Spotify Data Engineer Interview Guide: Process, Tips & Sample Questions (2026)

Introduction

Spotify is one of the world’s most data-driven consumer platforms, powering personalized listening for more than 600 million users across music, podcasts, and emerging AI-driven experiences like DJ X. Behind features such as Discover Weekly, Wrapped, and real-time recommendations are Spotify’s data engineers who design the pipelines, data models, and distributed systems that transform billions of daily events into meaningful insights for creators and listeners.

If you’re preparing for the Spotify data engineer interview, this guide will walk you through exactly what to expect: the full process, the most common SQL, system design, and behavioral questions, how to prepare for multi-round interview loops, and how to stand out by demonstrating both technical depth and value alignment. By the end, you’ll understand what Spotify looks for—and how to show that your work can help shape the next generation of personalized listening.

What does a data engineer do at Spotify?

Data engineers at Spotify build and maintain the large-scale data infrastructure that powers personalization, creator tools, advertising intelligence, financial reporting, and AI-driven user experiences such as Discover Weekly, Blend, Wrapped, and the newly upgraded AI DJ. As Spotify expands its investment in machine-learning–powered features, including major improvements to its DJ voice and recommendation models, data engineers play a critical role in enabling real-time personalization at massive scale.

On a typical team, a Spotify data engineer:

- Designs and operates distributed data pipelines using tools such as Flink, Dataflow, Scio, BigQuery, GCS, and Flyte, processing billions of events daily across mobile, web, and connected devices.

- Models complex datasets covering listening telemetry, session behavior, subscription lifecycle, catalog metadata, and creator analytics to support ML teams, product managers, and data scientists.

- Collaborates across squads and tribes, partnering with backend, ML, research, and product teams to ship data-powered features, especially those that rely on real-time recommendations and AI-generated experiences.

- Ensures data reliability and governance, architecting for schema evolution, GDPR compliance, lineage tracking, reproducibility, and incident recovery.

- Supports ML experimentation and productionization, enabling fast, accurate feature pipelines for training, evaluation, and serving systems that power personalization features like the AI DJ.

At Spotify, the strongest data engineers think beyond pipelines. They think about how dependable, well-designed data unlocks creativity for both listeners and artists.

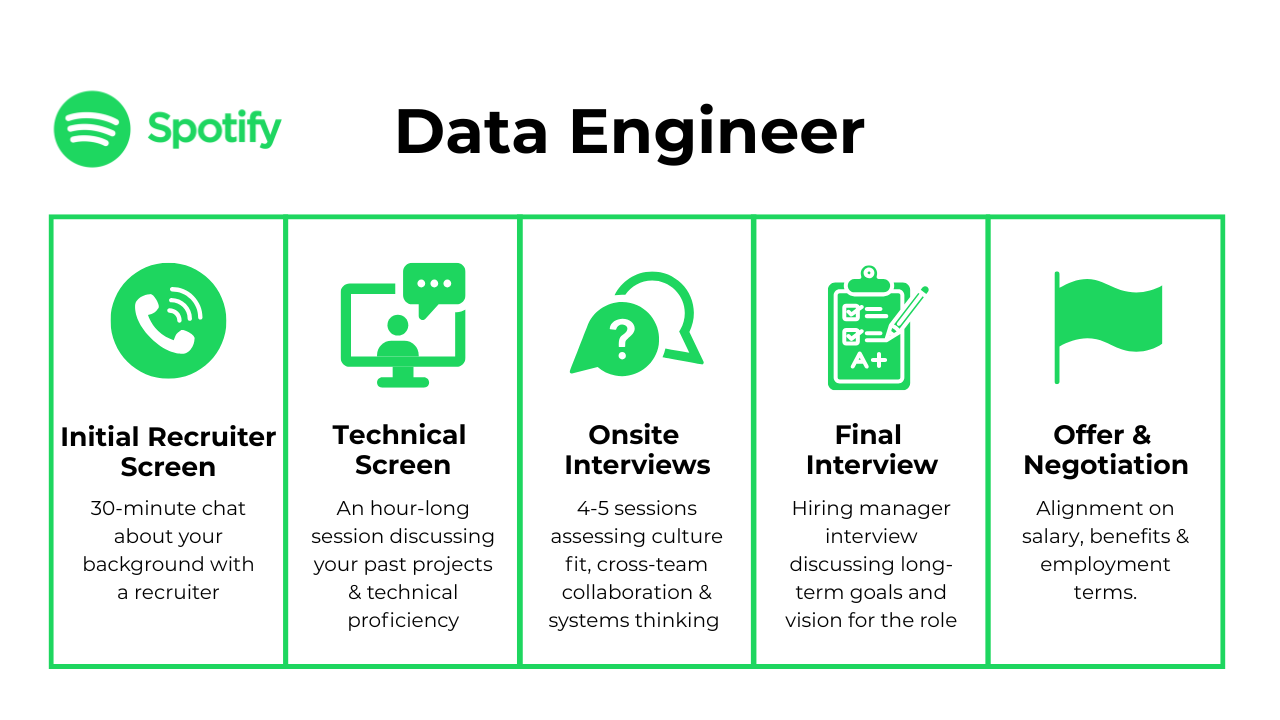

Spotify data engineer interview process: step-by-step

Spotify’s interview process blends technical rigor with cultural alignment. The company calls its hiring journey “Making things official,” a reflection of its people-first approach. Expect a multi-stage process that evaluates your technical expertise, problem-solving skills, and how well you embody Spotify’s five values - innovative, sincere, passionate, collaborative, and playful.

1. Recruiter screen

Your first conversation is with a Spotify recruiter who will review your experience and motivation for applying. You’ll discuss your background in data engineering, familiarity with technologies like Scala, Java, Python, Spark, or Google Cloud Platform, and how your skills align with Spotify’s mission. The recruiter also confirms logistics such as work location, compensation expectations, and timeline.

Tip: Be ready to explain why Spotify’s mission to unlock human creativity genuinely resonates with you. Recruiters want to see that you’re excited not only about data engineering, but also about how your work supports artists, creators, and listeners around the world.

2. Technical screen

If you pass the recruiter screen, you’ll move to a technical interview. This session typically takes place via CoderPad or a shared online editor and lasts around 60 minutes. Expect a mix of SQL and coding questions, pipeline design exercises, and system-thinking problems that test your ability to manage real-world data flow. You might also face short behavioral prompts related to collaboration or adaptability.

Common focus areas include:

- Writing SQL queries to aggregate streaming data efficiently

- Implementing transformations in Python or Scala

- Discussing data modeling choices and trade-offs for large-scale systems

Tip: Communicate your reasoning out loud. Spotify interviewers care just as much about your thought process and collaboration style as they do about the final solution.

3. Onsite (virtual final round)

The final round, often virtual, consists of four to five interviews with different “band members.” Each lasts about 45–60 minutes and covers technical, system-design, and behavioral topics.

Here’s what to expect:

- Coding & SQL: Hands-on problem solving in Python, Scala, or SQL.

- Data architecture/system design: Building scalable, fault-tolerant pipelines using Spotify-scale data.

- Case or scenario discussion: Diagnosing a real data issue or designing an end-to-end workflow.

- Values & culture interview: Demonstrating collaboration, transparency, and creativity in your responses.

Interviewers look for engineers who take ownership, handle ambiguity, and balance efficiency with innovation.

Tip: In the culture interview, highlight moments where you learned from failure or turned curiosity into impact. Spotify celebrates experimentation and sincerity.

4. Decision and offer

After interviews, Spotify gathers feedback from all interviewers. Both you and the company “rate one another” before a decision is made. If successful, you’ll receive an offer package that includes details on compensation, benefits, and Spotify’s Work From Anywhere program. This initiative allows you to work remotely within your region if collaboration remains feasible.

Tip: Before accepting, review data engineer compensation ranges on Levels.fyi at your level. Spotify’s pay varies by region, but competitive total packages include equity and global benefits.

Spotify’s process rewards curiosity, collaboration, and technical depth, which are qualities you can strengthen with the right practice. Before your next round, try your hand at some data engineer case study interview questions to build confidence in articulating more in-depth responses or practice with Interview Query’s AI interviewer.

Spotify data engineer interview questions

Spotify’s data engineer interviews combine deep technical problem solving with values driven discussion. You will face questions that test your command of SQL, pipeline design and large scale systems, along with behavioral prompts that reveal how you collaborate and think under pressure. The examples below reflect real interview themes reported by candidates and align with Spotify’s mission and data platform, which supports both creators and listeners worldwide.

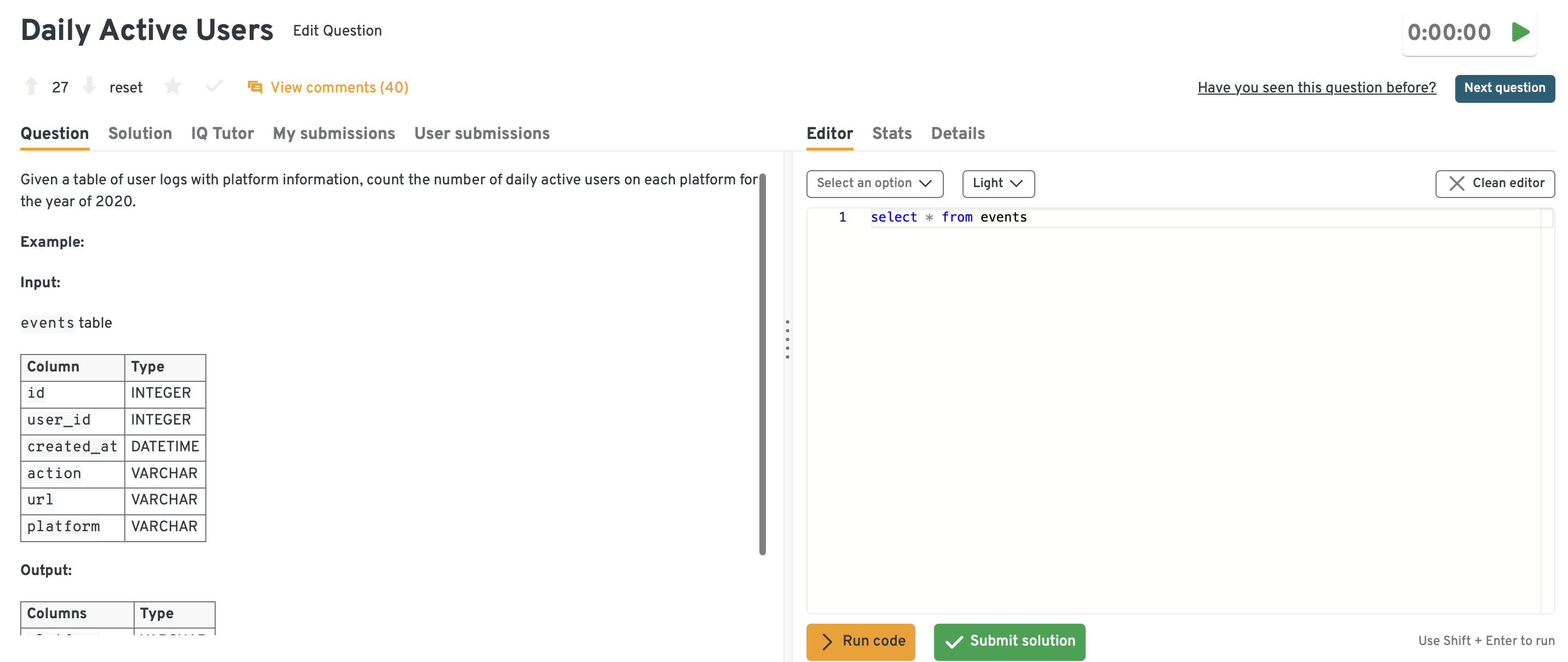

Core SQL and data architecture interview questions

Spotify’s data engineers work with billions of streaming events each day, so SQL fluency and data modeling are essential. In this section, you will find sample questions that test how you reason through queries, design reliable pipelines and model complex data for analytics and product insights. Strong answers show that you understand both the logic of the solution and the realities of running queries at Spotify scale.

1. How would you design a pipeline to track daily active users for Spotify’s mobile app?

A strong answer starts by clarifying what “active” means, for example at least one streaming event in a given day. From there, you would describe how you process events from multiple sources, aggregate by user and date in a batch or streaming job and write the output to a warehouse such as BigQuery. The best answers also cover monitoring and how to handle failures or data loss.

Tip: Think like an owner of a business critical metric. Clarify the DAU definition, list the main data sources, then walk through how you handle late events, duplicates, missing partitions, schema changes and GDPR related deletions. Interviewers listen for guardrails such as backfill strategies and freshness checks, not only which tools you name.

Head to the Interview Query dashboard to practice Spotify-style data engineering, core SQL, and data architecture interview questions. With built-in Python execution, performance analytics, and AI-guided feedback, it’s one of the fastest ways to sharpen your skills for Spotify data engineering interviews.

2. Write a SQL query to find the top three most streamed songs per country in the past 7 days.

SELECT

country,

track_id,

COUNT(*) AS play_count

FROM streams

WHERE played_at >= CURRENT_DATE - INTERVAL '7' DAY

GROUP BY country, track_id

QUALIFY ROW_NUMBER() OVER (

PARTITION BY country

ORDER BY play_count DESC

) <= 3;

Tip: Do not stop at the correct query. Explain how you would make it efficient on a very large table, for example by partitioning on played_at and clustering by country and track_id in BigQuery. Call out practical details such as whether to include podcasts, how to treat very short plays or bots and how your choices affect reporting accuracy.

3. How do you ensure data quality and consistency across distributed pipelines?

You could mention schema enforcement, validation checks at ingestion and transformation stages, and automated anomaly detection on key metrics. Referring to technologies like Protobuf, Pub/Sub, or Flyte is good, but the focus should be on your overall strategy for catching and triaging issues.

Tip: Organize your answer around contracts, detection and response. Talk about data contracts and schema versioning, volume and cardinality checks, null and distribution monitoring, lineage and reproducibility. Then explain how you would debug issues and communicate impact to downstream analytics or ML teams.

4. What is your approach to handling late arriving events in a streaming data system?

A solid response covers windowing and watermarking concepts, how you define lateness and how you update aggregates when late events arrive. You might use tools like Flink or Beam, and you should explain what happens when events arrive too late to be processed in the original window.

Tip: Frame your answer around the trade off between accuracy, latency and cost. Describe how you set lateness thresholds, how you handle partial recomputation and how you keep user facing metrics, for example playlist statistics or Wrapped counts, correct enough without rebuilding everything from scratch.

5. How would you model a dataset to analyze user churn for Spotify Premium?

Begin with a clear definition of churn, then propose a schema that joins subscription events, billing states and user behavior. You might create a fact table keyed by user with features such as session frequency, skips and plan tenure, and update it daily to feed dashboards or models.

Tip: Show that you understand messy subscription logic. Mention trials, grace periods, failed payments, regional differences and slowly changing dimensions for user and plan attributes. Interviewers appreciate when you call out alignment with finance or analytics teams so churn means the same thing everywhere.

Need more practice thinking through SQL and data modeling questions like these? Test your skills with a real world data science challenge on Interview Query to get hands on experience with the types of problems top tech companies use in interviews.

System design and cloud infrastructure interview questions

Scalability and reliability sit at the core of Spotify’s data platform. These questions explore your ability to design distributed systems, optimize ETL pipelines and work within a cloud first environment that uses tools such as Pub/Sub, Dataflow, BigQuery, GCS, Flink, Scio and Flyte. Interviewers look for system thinking, clear trade offs and awareness of what breaks at scale.

1. Design a scalable data pipeline to process real time streaming events from Spotify listeners.

A typical answer includes ingestion with Pub/Sub, stream processing with Dataflow or Flink, storage in BigQuery or a partitioned data lake on GCS and orchestration with a tool like Flyte. You should describe how you handle retries, idempotency and failure recovery, not just the happy path.

Tip: Go beyond drawing boxes. Explain how your design handles traffic spikes, stateful operations, backpressure and replay from durable storage. Interviewers often ask why you picked one framework over another, so be ready to justify your choices based on latency, cost and team expertise.

2. How would you optimize a daily batch ETL job that is missing its SLA by several hours?

Good answers start with measurement. You would profile the pipeline, identify slow stages, look for skewed joins or heavy shuffles, then apply targeted fixes such as partition pruning, better join strategies, caching intermediates or increasing parallelism.

Tip: Describe your debugging process step by step. Mention common bottlenecks, for example uneven partitions by country or very large joins with track metadata, and how you would simplify or restructure the job. Spotify interviewers look for engineers who question the pipeline design, not only cluster sizes.

3. Explain how you would architect a data warehouse schema to support Spotify’s financial reporting.

You might propose a star schema with fact tables for revenue and subscription transactions and dimension tables for users, time, regions and plans. You should also mention partitioning and clustering strategies to keep queries predictable and auditable.

Tip: Emphasize correctness and auditability. Talk about how you handle refunds, regional taxes, currency conversion and backfills, and how you keep a consistent historical view with slowly changing dimensions. Performance matters, but accuracy and traceability are the priority in this domain.

4. Describe how you would handle schema evolution in a production data pipeline.

Start with backward compatible changes, such as adding optional fields, and explain how you manage event versions through tools like schema registries or versioned Protobuf messages. Outline how you roll out changes without breaking downstream consumers.

Tip: Focus on rollout strategy. Mention dual writing to old and new schemas, feature flags, shadow pipelines and close monitoring during migration. Interviewers listen for evidence that you have managed real production changes and can protect other teams from surprises.

5. What would you include in a monitoring and alerting setup for data reliability?

You could include pipeline metrics, for example latency and throughput, and data quality checks such as volume anomalies, null ratios and duplication rates. You should also describe how alerts reach the right people and how incidents are documented and resolved.

Tip: Connect monitoring to user and business impact. Explain which failures would affect recommendations, royalties, dashboards or ads and how your alerts would catch them early. Mention on call practices and how you would design alerts to be actionable rather than noisy.

For deeper technical preparation, explore Interview Query’s system design question bank to practice end to end data pipeline design questions based on real data engineering stacks.

Behavioral and values alignment interview questions

Spotify’s behavioral interviews explore how you collaborate, handle ambiguity, learn from failure, and embody Spotify’s values of innovative, sincere, passionate, collaborative, and playful. These conversations are deeply scenario-based and assess how you operate in Spotify’s squad model, where cross-functional teamwork, humility, and curiosity are essential. Strong answers follow a structured storytelling approach (e.g., STAR) and highlight measurable outcomes or clear learning.

1. Tell me about a time you took a smart risk that didn’t work out.

What interviewers assess: Your ability to experiment responsibly, recover gracefully, and share learnings, reflecting Spotify’s culture of curiosity, iteration, and psychological safety. Interviewers want to see that you can take ownership without defensiveness.

Example answer:

“I tested a new streaming-based metric to reduce reporting lag. A pilot revealed late-event issues that caused temporary inconsistencies, so I rolled back quickly and documented the failure. I then added stricter validation and proposed a safer migration plan. It improved our process for future rollouts.”

Tip: Show that you experiment with guardrails, communicate transparently, and treat failures as learning inputs, all core expectations in Spotify’s fast-moving, experimentation-driven teams.

2. Describe a situation where you collaborated with cross-functional teams to deliver a data solution.

What interviewers assess: How you work within Spotify’s squad/tribe structure, influence without authority, and build alignment across PMs, ML engineers, designers, and data scientists. Collaboration depth matters more than the final output.

Example answer:

“I partnered with a PM and ML engineer to redesign an engagement metric. I facilitated a brief alignment doc, clarified definitions, and proposed trade-offs. Weekly async updates kept the squad synced, and we shipped a metric that improved experiment reliability.”

Tip: Spotify values clarity and co-creation. Demonstrate how you translate technical concepts, write alignment docs, navigate trade-offs, and build shared understanding, not just how you coded something.

3. How do you handle ambiguity when a project’s goals or metrics are unclear?

What interviewers assess: Comfort with evolving direction, ability to create clarity, and how you guide teams forward without overcommitting. Spotify often tests whether you can self-unblock and provide structure.

Example answer:

“For a new content feature, ‘quality’ wasn’t defined. I gathered input from PM and research, drafted proxy metrics with pros and cons, and aligned the team on one starting metric. I kept the pipeline modular so we could refine as we learned more.”

Tip: Highlight how you gather context, propose options, document assumptions, and communicate early. Spotify prizes people who create momentum in uncertainty, not those who wait for perfect guidance.

4. Share an example of when you improved a process or workflow within your team.

What interviewers assess: Bias toward continuous improvement, operational maturity, and your ability to raise engineering quality without big rewrites, which are key in Spotify’s large, distributed data ecosystem.

Example answer:

“I noticed our backfill process caused frequent clashes and slowdowns. I created a shared config and small monitoring dashboard, plus a short runbook. Backfill time and on-call load dropped noticeably within a month.”

Tip: Emphasize practical, high-leverage improvements. Spotify values engineers who elevate reliability and team experience through small, thoughtful optimizations rather than heroic rebuilds.

5. What motivates you about Spotify’s mission to unlock the potential of human creativity?

What interviewers assess: Mission alignment, sincerity, and your understanding of how data engineering impacts creators and listeners which is a major cultural theme at Spotify.

Example answer:

“I’m motivated by building systems that help artists reach fans and get paid fairly. Reliable data powers royalties, insights, and recommendations, so I see a direct connection between my engineering work and creative outcomes.”

Tip: Connect your motivations to real Spotify impact areas such as recommendations, royalties, creator insights, or fairness. Interviewers look for genuine enthusiasm backed by concrete product understanding, not generic mission statements.

For realistic behavioral preparation, try Interview Query’s coaching program to get one-on-one feedback on your stories and how you present them in Spotify style interviews.

Advanced data engineering and ML integration interview questions

Spotify’s data engineers work closely with machine learning engineers and data scientists to power features like Discover Weekly and Spotify Wrapped. These questions explore how you design data flows that support ML, maintain reproducibility, and think about fairness and ethics in recommendations.

1. How would you design a feature store to support Spotify’s recommendation models?

You could describe a pipeline that builds user and item features from streaming events, stores them in a warehouse for batch use, and exposes them through a serving layer with low-latency lookups. You should explain how you keep features consistent between training and serving paths.

Tip: Talk about training and serving skew, freshness guarantees, and versioning. Interviewers look for plans to keep fast moving features, for example, skip rate, up to date and traceable across batch and streaming pipelines.

2. What is your approach to maintaining data versioning for machine learning experiments?

Your answer should cover tracking dataset versions, transformation code and model artifacts. You might mention using a metadata store to tie models back to the exact data and logic that produced them.

Tip: Frame your answer around reproducibility under audit. Explain how you would ensure that a model trained months ago can be reproduced, including the input data snapshot, feature definitions, and configuration.

3. How would you optimize a data pipeline used for model training when latency and cost are both critical?

You could discuss column pruning, sampling, incremental updates of historical features and smarter storage formats. Then you should outline the risks of each optimization and how you would monitor for drift or degraded performance.

Tip: Interviewers expect you to talk about trade offs. Make it clear where you accept some loss of completeness or recall, how you watch for negative effects and when you would choose to pay more for accuracy.

4. Explain how you would enable near real time data access for machine learning inference.

A typical design involves combining batch-computed features with streaming updates, a serving store for low-latency lookups, and clear rules for cache invalidation and time to live settings. You should also mention how you would recover from outages.

Tip: Tie your design back to user experience. Explain how freshness, latency, and resilience affect recommendation quality and how you would monitor those properties with metrics and alerts.

5. How do you ensure ethical data use and bias mitigation in Spotify’s recommendation pipelines?

You might mention bias metrics across user groups, safeguards around sensitive attributes, and evaluation processes that include engineering, product, legal, and policy partners. The focus is on workflow, not only algorithms.

Tip: Show that you treat fairness and privacy as ongoing practices rather than one time checks. Interviewers respond well to candidates who can explain how they would design processes, dashboards and reviews that keep ethics in view for the whole team.

For advanced preparation on these types of questions, use Interview Query’s AI interviewer to simulate end to end system and ML integration scenarios and receive instant feedback on your answers.

How to prepare for a data engineer interview at Spotify

Spotify’s data engineer interviews reward strong fundamentals, clear reasoning, and curiosity about how data powers the listening experience. Use these strategies to prepare confidently for both technical and behavioral rounds.

1. Build intuition for Spotify’s data ecosystem

You don’t need to know every tool Spotify uses, but you do need to understand how modern data platforms operate at scale. Focus on how data moves from ingestion → validation → storage → consumption across batch and streaming systems.

Try this exercise:

Take a public event dataset such as the NYC Taxi Trips dataset and outline a simple pipeline:

- Batch or streaming ingestion?

- Where do validations occur?

- How do you structure analytics tables?

- What freshness or anomaly checks do you add?

Spotify interviewers want to hear your reasoning, not tool memorization.

2. Strengthen SQL and Python/Scala fundamentals

Expect SQL questions involving time windows, deduping, retention, null handling, and partition-aware querying—mirroring Spotify-scale data. For code, expect lightweight transformations in Python or Scala that reflect everyday ETL work.

Practice using a streaming-style dataset:

- Compute DAUs

- Identify top tracks per region

- Deduplicate replay events

- Join multiple large tables safely

- Explain your logic out loud

For targeted practice, explore Interview Query’s SQL challenges and Python exercises.

3. Practice modeling and pipeline design with a product mindset

Spotify favors engineers who design for long-term usability—not just functionality. When asked to model churn, subscriptions, or listening events, think about definitions, update cadence, downstream consumers, and schema evolution.

Prep exercise: Model “Premium churn” by defining:

- What churn means

- What signals matter

- Which tables you’d build

- How often they update

- Who uses them (ML, analytics, finance)

Interviewers look for product-aware design and reasoning through ambiguity.

4. Deepen practical distributed systems reasoning

Spotify will test how you think about real issues like late events, corrupted partitions, retries, scaling bottlenecks, and incremental backfills. You don’t need Paxos—you need practical intuition.

Questions to practice:

- What if events arrive out of order?

- What if a partition is corrupted?

- How do you backfill a week of missing data?

- When is a watermark too tight?

Expect live twists during the interview, so practice staying calm and adjusting your design.

5. Prepare behavioral stories aligned with Spotify’s values

Spotify values curiosity, collaboration, humility, and openness. Behavioral interviews assess how you learn, partner with others, handle ambiguity, and turn setbacks into learning.

Prepare 6–8 STAR stories that show:

- Navigating ambiguity

- Improving a workflow or process

- Collaborating across engineering, ML, and product

- Owning mistakes and iterating

- Making thoughtful trade-offs

For support structuring your stories, try Interview Query’s Behavioral Coaching.

6. Build awareness of Spotify’s business and user experience

Understanding the product shows maturity and mission alignment. Explore how data empowers recommendations, fraud detection, creator insights, ads, and subscription analytics.

Read 3–4 posts from the Spotify Engineering blog:

- Discover Weekly

- Data platform architecture

- Flyte migrations

- Podcast insights

Tie your technical reasoning to listener and creator outcomes.

7. Practice adaptive, end-to-end interview scenarios

Spotify interviews are evolving conversations. You’ll design a pipeline—then adapt it as new constraints surface (e.g., lateness, schema changes, new SLAs, cost limits).

Try this:

Start with: “Design a DAU pipeline.”

Then add constraints one at a time:

- Data arrives late

- Schema evolves mid-year

- Finance needs hourly DAU

- Cost doubles—optimize it

To simulate realistic practice, try an Interview Query Mock Interview for live feedback.

8. Communicate clearly and think out loud

Spotify’s engineering culture is deeply collaborative. Interviewers evaluate how clearly you explain trade-offs and translate complex ideas.

Prep exercise:

Record yourself explaining:

- What a watermark is

- How you’d handle late data

- How you ensure data quality

If the explanation rambles, simplify and structure it. Clear communication is a strong hiring signal.

Average Spotify Data Engineer Salary

Spotify offers competitive compensation packages that reflect its emphasis on engineering innovation and global scale. According to Levels.fyi, U.S.-based data engineers at Spotify earn between $167K and $217K per year, with compensation scaling significantly at more senior levels. Spotify rewards impact, data craftsmanship, and cross-functional collaboration across its analytics, personalization, and infrastructure teams.

- Engineer I: $167K per year ($145.9K base + $21.1K stock)

- Engineer II: $208.2K per year ($176.6K base + $30.5K stock + $1.1K bonus)

- Senior Engineer: $217.3K per year ($202.5K base + $14.8K stock)

Average Base Salary

Average Total Compensation

Spotify’s compensation structure rewards engineers who improve data reliability and accelerate product decisions across areas like music recommendations, advertising analytics, and content delivery optimization. Compensation reflects both technical depth and the ability to shape Spotify’s global data strategy.

FAQs

1. How many rounds are there in the Spotify Data Engineer interview?

Most candidates go through three to four main stages: a recruiter screen, a technical interview (usually via CoderPad), and a final virtual onsite loop with multiple interviews covering coding, system design, and values. Depending on the team, there may be an additional hiring manager or project discussion before the offer stage.

2. What technical skills should I focus on for the Spotify interview?

Expect a deep evaluation of SQL, Python, or Scala, and distributed systems fundamentals. Spotify engineers often work with Spark, Scio, Flyte, and Google Cloud Platform, so demonstrating hands-on familiarity with these tools helps. Strong knowledge of data modeling, ETL pipeline design, and performance optimization is essential.

3. How long does the Spotify interview process take?

The typical timeline runs one to three months from application to offer. Scheduling depends on candidate availability and team matching. Spotify aims to respond promptly after each stage, and feedback is often shared within a few days of final interviews.

4. How can I show alignment with Spotify’s values during interviews?

Spotify’s five values of innovative, sincere, passionate, collaborative, and playful are core to every hiring decision. You can show alignment by sharing stories that highlight curiosity, learning from failure, and teamwork. Be authentic and specific. Spotify values sincerity over polish, so focus on genuine reflection over scripted responses.

5. What are common Spotify data engineer interview questions?

Expect a mix of SQL queries, data pipeline design problems, and behavioral questions. Examples include:

- Write a query to find duplicate streams by user and track.

- Design a pipeline to process billions of event logs daily.

- How do you ensure data quality across multiple sources?

- Tell me about a time you collaborated to solve a production issue.

Tip: Practice with realistic prompts using Interview Query’s Mock Interviews to build confidence with Spotify-style scenarios.

6. What’s Spotify’s approach to remote work?

Spotify’s Work From Anywhere program lets employees choose their location as long as collaboration stays practical. The company emphasizes flexibility and global inclusivity, so expect questions about how you stay connected and communicate across time zones.

7. How should I prepare for the final interview loop?

Review system design fundamentals, practice explaining trade-offs, and prepare for values-driven conversations. Interviewers may test your ability to diagnose data flow issues or build a scalable pipeline. Focus on communicating your reasoning clearly.

Tip: Simulate the experience with Interview Query’s AI Interviewer to practice real Spotify-style questions.

8. What is the salary range for Spotify data engineers?

Compensation varies by level and region, but Levels.fyi reports total packages ranging from $130K to $200K+ for mid-level roles in the U.S., including base salary, equity, and benefits. Spotify’s global benefits include generous parental leave, flexible share incentives, and mental health programs.

Ready to hit play on your Spotify journey?

Becoming a Spotify data engineer means joining a company that treats data as both art and science. The interview process challenges you to think at scale, collaborate creatively, and align with Spotify’s values of innovation, sincerity, and playfulness. Each round is designed to uncover how you approach ambiguity, communicate your reasoning, and build systems that empower millions of creators and listeners.

Before your next round, sharpen your skills with the data engineering learning path, built by real data engineers who’ve been in the interview seat. Interview Query’s coaching program also offers tailored feedback and one-on-one guidance before your next round to help you perfect your responses to both technical and behavioural questions. With focused preparation and genuine curiosity, you’ll be ready to take center stage in Spotify’s data-driven band.