Deloitte Data Scientist Interview Guide: Process, Questions, and Prep

Introduction

A Deloitte data scientist works at the intersection of advanced analytics, machine learning, and client decision-making, helping organizations turn messy, high-volume data into deployable solutions. Deloitte employs more than 450,000 professionals globally and continues to expand its AI and analytics capabilities as clients invest heavily in automation, cloud migration, and data-driven transformation. Data scientists at Deloitte support projects across financial services, healthcare, public sector, and technology, where models are expected to move beyond experimentation and deliver measurable business impact.

This context shapes the Deloitte data scientist interview. The role goes beyond building models in isolation. Interviewers evaluate how candidates reason through ambiguous business problems, design scalable machine learning solutions, communicate trade-offs, and explain technical decisions to non-technical stakeholders. In this guide, we break down how the Deloitte data scientist interview process works, what each stage is designed to assess, and how candidates should prepare for the blend of coding depth, statistical reasoning, and client-ready communication Deloitte expects.

Deloitte Data Scientist Interview Process

The Deloitte data scientist interview process is designed to assess technical depth, structured problem-solving, and the ability to translate machine learning output into business decisions. Rather than testing algorithms in isolation, Deloitte evaluates how candidates work through real-world constraints such as imperfect data, unclear objectives, and stakeholder trade-offs. The exact structure varies by geography and service line, but most candidates progress through several rounds over a few weeks.

Many candidates prepare by practicing applied problems from Interview Query’s data scientist interview questions and pressure-testing their explanations through mock interviews, which mirror consulting-style follow-ups.

Interview Process Overview

Most candidates move through an initial screen, a technical assessment or take-home exercise, one or two technical interviews, and a final behavioral or case discussion with senior leaders. Compared with product-focused data science interviews, Deloitte places heavier emphasis on end-to-end thinking, from data sourcing and modeling through deployment and business impact.

Candidates often rehearse this progression using real-world challenges that require them to explain assumptions, modeling choices, and trade-offs under questioning.

Interview Process Summary

| Interview stage | What happens |

|---|---|

| Recruiter or HR screen | Background, motivation, and role alignment |

| Technical assessment or take-home | Coding, data manipulation, and modeling fundamentals |

| Technical interviews | Machine learning theory, Python/SQL, and applied problem-solving |

| Behavioral or business case | Communication, judgment, and client-facing reasoning |

| Partner or director round | Long-term fit, maturity, and impact orientation |

Recruiter Or Talent Acquisition Screen

The recruiter screen is usually a short conversation focused on confirming your background, interest in Deloitte’s data science work, and alignment with the role. Interviewers assess whether you understand the consulting context and can articulate why you want to apply data science in client-facing environments rather than purely internal product teams.

Many candidates refine this narrative through mock interviews to avoid vague or overly technical explanations.

Tip: Be prepared to explain why you are interested in applied, client-driven data science, not just model building.

Technical Assessment Or Take-Home Exercise

Some candidates complete an online assessment or take-home case early in the process. These exercises typically involve data cleaning, exploratory analysis, feature engineering, and a simple model or insight-driven recommendation. The goal is to evaluate how you structure analysis and communicate findings, not just raw accuracy.

Practicing similar workflows using Interview Query’s data science challenges helps candidates stay disciplined when working independently.

Tip: Clearly document assumptions and trade-offs. Deloitte values clarity of reasoning as much as technical correctness.

Technical Interviews

Technical interviews focus on machine learning fundamentals, statistics, and coding in Python or SQL. Interviewers often ask you to explain concepts such as bias–variance trade-offs, model evaluation, feature selection, and data leakage, then connect those ideas to real client scenarios. Coding questions tend to emphasize data manipulation and logic over obscure syntax.

Working through applied problems from the data scientist interview question bank helps candidates practice explaining their approach step by step.

Tip: When discussing models, explain why you chose an approach and when it would fail, not just how it works.

Behavioral And Business Case Rounds

Later rounds assess how you communicate insights, manage ambiguity, and influence decisions without direct authority. Business case discussions often resemble real Deloitte engagements, where you must explain a technical solution in plain language and tie it back to business outcomes.

Candidates often prepare for this stage by simulating stakeholder conversations in mock interviews, where interviewers challenge assumptions and push for clarity.

Tip: Anchor explanations around decisions and impact rather than technical implementation details.

Partner Or Director Round

The final round typically involves a conversation with a Partner or Director. This discussion focuses on judgment, communication maturity, and long-term fit within Deloitte’s analytics practice. Interviewers may revisit earlier projects and ask higher-level questions about risk, scalability, and client trust.

Tip: Show that you can think beyond individual models and understand how data science supports broader transformation efforts.

Deloitte Data Scientist Interview Questions

Deloitte data scientist interview questions assess how well you solve ambiguous client problems with data, communicate trade-offs clearly, and deliver analyses that can hold up in real delivery environments. Interviewers care less about “perfect” answers and more about whether you can structure the problem, make sensible assumptions, and explain your reasoning in a way a client can act on.

To prepare efficiently, many candidates practice on Interview Query’s data scientist interview questions and simulate follow-up pressure through mock interviews.

Technical And Machine Learning Fundamentals

These questions assess your ability to code, work with real datasets, and explain modeling decisions under realistic constraints.

How would you interpret coefficients of logistic regression for categorical and boolean variables?

Deloitte asks this to test whether you can translate a model into stakeholder-friendly implications, especially when features are encoded and interpretation can be misleading.

Tip: Explain the coefficient as a change in log-odds, then convert to an odds ratio, and explicitly call out how one-hot encoding changes the baseline.

-

This tests practical data wrangling and whether you can choose a defensible imputation approach without overcomplicating the solution.

Tip: Mention when median is preferable to mean (outliers), and call out that you would validate impact on downstream metrics or model performance.

Build a random forest model from scratch with specific conditions.

Deloitte may use questions like this to assess your comfort with core ML mechanics and your ability to reason about model structure, not just use libraries.

Tip: Focus on explaining bagging, feature subsampling, and majority voting, and discuss trade-offs like variance reduction and interpretability.

-

This evaluates algorithmic thinking and clean edge-case handling, which matters when building reliable pipelines and services.

Tip: Use binary search for the insertion point, then expand outward while guarding bounds.

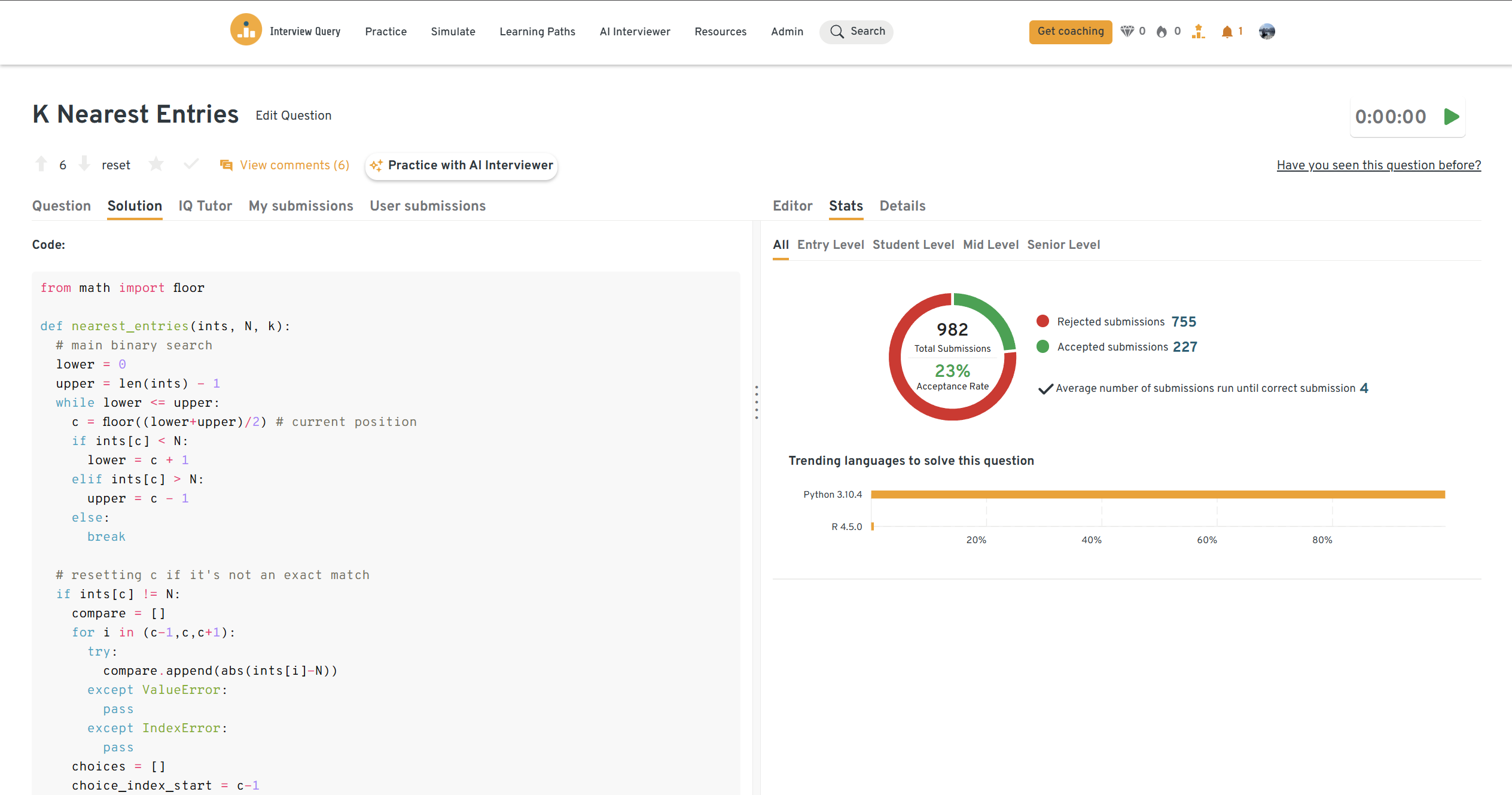

You can practice this exact problem on the Interview Query dashboard, shown below. The platform lets you write and test SQL queries, view accepted solutions, and compare your performance with thousands of other learners. Features like AI coaching, submission stats, and language breakdowns help you identify areas to improve and prepare more effectively for data interviews at scale.

Case Study And Business Insight Scenarios

These questions reflect Deloitte’s consulting context, where you are expected to frame the problem, define success metrics, and design an analysis that leads to a decision.

-

This tests experimentation fundamentals and whether you can design a clean measurement plan that stands up to scrutiny.

Tip: Start by defining the primary metric, guardrails, and minimum detectable effect before touching the statistics.

How would you assess the validity of the result in an A/B test with a .04 p-value?

Deloitte asks this to see if you understand how false positives happen in the real world and whether you can pressure-test findings before recommending action.

Tip: Ask about peeking, multiple comparisons, sample ratio mismatch, and whether the effect size is practically meaningful.

How would you approach designing a test for a price increase in a B2B SAAS company?

This evaluates how you balance statistical rigor with business risk, operational constraints, and stakeholder realities.

Tip: Discuss segmentation (new vs existing customers), revenue impact vs churn risk, and why you might prefer phased rollout or quasi-experimental designs.

-

This tests whether you can connect modeling to real revenue levers and explain what data you would need to operationalize pricing.

Tip: Tie your approach to elasticity, constraints, and experimentation, then outline the minimum dataset you’d request to proceed.

Behavioral And Client Communication

These questions assess how you operate in client-facing, cross-functional environments, where communication quality and judgment matter as much as technical skill.

Tell me about a project in which you had to clean and organize a large dataset.

Deloitte looks for evidence you can handle messy, high-stakes data and still deliver something decision-ready under time pressure.

Tip: Quantify impact (e.g., reduced missingness, improved model lift, faster reporting cycle) and name the checks you used to prevent silent errors.

Sample answer: In a prior analytics project, I inherited a dataset with inconsistent definitions across sources and frequent missing values. I profiled the data, standardized key fields with a data dictionary, and built validation checks to catch schema drift and outliers before modeling. After cleaning and documenting the pipeline, the team reduced reporting rework and improved model stability, which helped stakeholders trust the outputs enough to use them in weekly decision-making.

Describe a data project you worked on. What were some of the challenges you faced?

This tests whether you can explain a project end-to-end, including trade-offs, constraints, and how your work connected to outcomes.

Tip: Anchor your story around one hard decision you made (scope, metric definition, model choice) and the measurable result.

Sample answer: I worked on a churn prediction project where the initial goal was vague and the label definition was contested across teams. I aligned stakeholders on a single churn definition tied to business action, then built a baseline model and iterated feature engineering based on error analysis. The final deliverable included a prioritized driver analysis and a playbook for targeting, which led to a measurable improvement in retention for the highest-risk segment.

-

Deloitte evaluates whether you can adapt your communication style to executives, SMEs, and technical teams without losing accuracy.

Tip: Show how you reframed from “methods” to “decision, impact, and risk,” and how you validated understanding.

Sample answer: I once presented a model update using technical metrics, and the stakeholder was unconvinced because they could not connect it to business risk. I restructured the narrative around the decision at hand, translated performance into expected impact, and used a simple visual to show trade-offs between precision and coverage. After aligning on what “good” meant for the business, we agreed on a deployment threshold and moved forward with a rollout plan.

How do you handle shifting priorities when working on multiple projects?

This tests delivery judgment in a consulting environment where requests change quickly and timelines are non-negotiable.

Tip: Explain how you re-scope proactively and communicate trade-offs early, rather than trying to “do everything.”

Sample answer: When priorities shift, I first clarify what decision deadline is driving the change and what the minimum viable output is. I then re-scope deliverables into must-haves and nice-to-haves, communicate trade-offs to stakeholders, and set a revised timeline with checkpoints. This approach helped me keep multiple workstreams moving without sacrificing quality or surprising stakeholders late.

You might think that behavioral interview questions are the least important, but they can quietly cost you the entire interview. In this video, Interview Query co-founder Jay Feng breaks down the most common behavioral questions and offers a clean framework for answering them effectively.

~~~~

How To Prepare For A Deloitte Data Scientist Interview

Preparing for a Deloitte data scientist interview requires more than refreshing algorithms or memorizing definitions. Deloitte evaluates how you apply data science in messy, real client environments where objectives evolve, data is imperfect, and recommendations must stand up to executive scrutiny. Strong candidates prepare by combining technical depth with clear, decision-oriented communication.

Below are focused preparation strategies that reflect how Deloitte actually evaluates data scientists.

Build End-To-End Thinking, Not Just Models

Deloitte data scientists are expected to own problems from framing through recommendation. Interviewers often probe how you defined the problem, chose metrics, handled data limitations, and validated outcomes, not just which algorithm you used.

Practice walking through projects end to end using applied prompts from data scientist interview questions, making sure you can clearly explain assumptions, trade-offs, and next steps.

Tip: When practicing, always ask yourself, “What decision does this analysis enable?” and build your explanation around that.

Practice Explaining Technical Work to Non-Technical Stakeholders

Many interview questions require you to translate machine learning outputs into business language. Deloitte values candidates who can explain why a model matters, when it should be trusted, and what risks remain, without relying on jargon.

Refine this skill by pressure-testing explanations in mock interviews, where interviewers challenge clarity and push for decision-ready summaries.

Tip: Lead with the recommendation and impact, then explain the supporting analysis only as needed.

Sharpen Practical Coding and Data Wrangling Skills

Technical rounds emphasize Python, SQL, and real-world data manipulation rather than abstract puzzles. You should be comfortable cleaning datasets, handling edge cases, and writing readable, defensible code under time pressure.

Use Interview Query’s data science challenges to practice full workflows, from exploratory analysis to basic modeling and validation.

Tip: Prioritize correctness and clarity over clever shortcuts. Interviewers care about reliability and reasoning.

Strengthen Core Machine Learning and Statistical Foundations

Deloitte expects solid understanding of core ML concepts such as bias–variance trade-offs, model evaluation, feature engineering, and experiment design. Interviewers often test whether you can explain when a method works and when it fails.

Revisit these fundamentals through scenario-based prompts in the data scientist question bank, focusing on explanation quality rather than memorization.

Tip: Practice answering “why not” questions, such as why a simpler model might be preferable in a client context.

Prepare Behavioral Stories That Show Judgment and Impact

Behavioral interviews at Deloitte focus on ambiguity, stakeholder management, and delivery under constraints. Strong answers show how you influenced decisions, managed trade-offs, and delivered outcomes, not just tasks completed.

Rehearse behavioral scenarios using data science behavioral interview questions and refine them through live mock interviews.

Tip: Anchor each story around a decision you made and quantify the result whenever possible.

Simulate Real Interview Conditions Before Interview Day

The fastest improvement comes from realistic practice. Timed coding, case-style discussions, and live feedback reveal gaps that passive studying will not.

Combine applied case-style challenges with live mock interviews to mirror how Deloitte evaluates candidates across technical, business, and communication dimensions.

Tip: Practice thinking out loud. Deloitte interviewers evaluate how you reason, not just where you land.

Role Overview: Deloitte Data Scientist

A Deloitte data scientist works at the intersection of advanced analytics, machine learning, and client delivery. The role focuses on translating ambiguous business problems into deployable data science solutions that can scale across organizations. Unlike purely product-based data science roles, Deloitte data scientists operate in client-facing environments, where success depends as much on judgment and communication as on technical depth.

Day to day, Deloitte data scientists partner closely with consultants, engineers, and client stakeholders to define objectives, assess data readiness, build models, and translate results into decisions that guide transformation initiatives.

Core Responsibilities

- Problem framing and hypothesis development: Work with clients to clarify business questions, define success metrics, and translate high-level goals into analytical hypotheses.

- Data exploration and feature engineering: Clean, join, and explore structured and unstructured datasets, identify quality issues, and engineer features that reflect real business drivers.

- Model development and evaluation: Build and validate statistical and machine learning models, such as regression, tree-based methods, NLP, or time-series forecasting, with a focus on interpretability and robustness.

- Deployment and lifecycle support: Collaborate with engineers to operationalize models, support monitoring, and iterate based on performance and changing client needs.

- Insight communication: Present findings and recommendations to technical and non-technical stakeholders, explaining trade-offs, risks, and expected impact clearly.

- Delivery and collaboration: Contribute within agile or hybrid delivery teams, balancing analytical depth with timelines, governance, and client expectations.

Candidates preparing for this role benefit from practicing applied data science scenarios that emphasize ambiguity, trade-offs, and stakeholder alignment rather than isolated modeling exercises.

Culture And What Makes Deloitte Different

Deloitte’s data science culture is shaped by its consulting model. Data scientists are expected to be technically strong, but also adaptable, client-ready, and comfortable operating in ambiguous environments.

What Deloitte Interviewers Look For

- Structured thinkers: Candidates who can impose clarity on under-defined problems and explain their reasoning step by step.

- Client-ready communication: The ability to translate complex analysis into clear, decision-oriented narratives for non-technical audiences.

- Judgment under constraints: Comfort making trade-offs when data, time, or scope is limited, while managing risk responsibly.

- Collaboration and ownership: Willingness to work across disciplines and take responsibility for outcomes without formal authority.

- Learning orientation: Curiosity about new analytics techniques, tooling, and industries, paired with the ability to apply them pragmatically.

Deloitte data scientists often rotate across industries and problem types, which rewards adaptability and strong fundamentals over narrow specialization. Candidates who practice articulating business impact and stakeholder trade-offs through realistic data science interview questions and mock interviews are better positioned to demonstrate this fit clearly.

Average Deloitte Data Scientist Salary

Deloitte data scientist compensation varies by level and service line, with U.S.-based roles following a structured consulting ladder. The benchmarks below reflect annual total compensation reported on Levels.fyi and should be used as directional guidance when evaluating offers or preparing for leveling discussions.

Average Annual Compensation by Level (United States)

| Level | Title | Total (Annual) | Base (Annual) | Stock | Bonus (Annual) |

|---|---|---|---|---|---|

| L1 | Analyst | ~$88K | ~$86K | $0 | ~$1.1K |

| L2 | Consultant | ~$108K | ~$108K | $0 | ~$3.1K |

| L3 | Senior Consultant | ~$144K | ~$132K | $0 | ~$8.4K |

| L4 | Manager | ~$168K | ~$156K | $0 | ~$10.3K |

| L5 | Senior Manager | ~$252K | ~$228K | $0 | ~$24K |

| L6 | Director / Partner track | ~$132K | ~$120K | $0 | ~$6.5K |

What To Know About Deloitte Data Scientist Compensation

- Base-heavy structure: Deloitte data scientist roles are primarily base-salary driven, with little to no equity across most levels.

- Bonus varies meaningfully: Bonus amounts depend on performance ratings, utilization, and firm performance, which explains variation within the same level.

- Promotion matters more than tenure: The largest compensation step-ups typically occur at promotion points (e.g., Senior Consultant → Manager).

- Service line and geography matter: Compensation can differ depending on whether the role sits within Consulting, Strategy & Analytics, or Technology, as well as local market adjustments.

Average Base Salary

Average Total Compensation

If you are benchmarking an offer or preparing for leveling conversations, it helps to practice end-to-end data science interview questions and simulate evaluation scenarios through mock interviews, since leveling outcomes often influence where you land within these ranges.

FAQs

How technical is the Deloitte data scientist interview?

The interview is technically rigorous, but not in a “pure research” sense. You are expected to demonstrate solid foundations in Python, SQL, statistics, and machine learning, while also explaining why a model or approach makes sense in a business context. Many questions combine technical reasoning with judgment, which is why candidates practice both coding and explanation using data scientist interview questions and mock interviews.

What types of machine learning questions does Deloitte usually ask?

Most Deloitte data scientist interviews focus on core applied concepts rather than cutting-edge research. Common topics include bias–variance trade-offs, model evaluation, feature engineering, experiment design, and handling messy or incomplete data. Interviewers often probe when a simpler model might outperform a complex one, especially in client-facing environments.

Does Deloitte require strong business or consulting experience for data scientists?

Prior consulting experience is not required. Deloitte hires data scientists from industry, startups, and academic backgrounds. What matters most is your ability to translate technical work into decisions, manage ambiguity, and communicate clearly with stakeholders. Candidates without consulting backgrounds often perform well if they prepare scenario-based explanations and behavioral stories through mock interviews.

Are take-home assignments common in Deloitte data scientist interviews?

Some service lines include a take-home case or technical exercise early in the process. These typically test data cleaning, exploratory analysis, modeling choices, and how you communicate findings. Accuracy matters, but clarity of assumptions and recommendations matters more. Practicing full workflows through data science challenges helps simulate this experience.

What skills differentiate strong Deloitte data scientist candidates?

Strong candidates consistently anchor their answers around decisions and impact. Instead of focusing only on algorithms, they explain trade-offs, risks, and next steps in plain language. They also show comfort working with imperfect data and shifting requirements, which mirrors real Deloitte client engagements.

Final Thoughts: The Consulting Lens Behind Deloitte’s Data Scientist Interviews

Deloitte does not hire data scientists to build models in isolation. It hires people who can walk into an ambiguous client problem, impose structure, make defensible technical choices, and explain those choices clearly to decision-makers. The strongest candidates consistently show how their analysis leads to action, how they manage uncertainty, and how they balance rigor with delivery timelines. That mindset, more than any specific algorithm, is what Deloitte interviewers are testing for across technical, case, and behavioral rounds.

If you want to prepare the way Deloitte actually evaluates candidates, start with Deloitte-relevant data scientist interview questions that force you to explain trade-offs out loud. Reinforce that practice with real-world data science challenges that mirror client constraints like imperfect data and shifting goals. Finally, pressure-test your communication through mock data science interviews, where follow-up questions reflect the same consulting-style scrutiny used by Deloitte interviewers.