Anthropic Research Scientist Interview Guide (2025): Process, Questions & AI Safety

Introduction

If you’re preparing for an Anthropic research scientist interview, you’re stepping into one of the most competitive research roles in AI. Anthropic is growing quickly, with over $7 billion raised since its founding and a mission to make advanced AI systems safe, reliable, and interpretable. Research scientists here are not just running experiments. They are shaping how the next generation of AI models is designed, tested, and aligned with human values.

In this guide, you’ll learn exactly what to expect in the process. We’ll cover the different interview rounds, highlight examples of Anthropic research scientist interview questions, and explain how Anthropic evaluates technical depth and safety thinking. You’ll also see how values-driven assessments, including Anthropic AI safety interview questions, are built into the hiring process. By the end, you’ll know how to approach your preparation with both confidence and clarity.

Role overview & culture

As a research scientist at Anthropic, you’ll spend your days designing experiments, testing model architectures, and exploring how to make AI systems safer and more reliable. The role isn’t just about publishing papers. It’s about shaping how advanced AI is built and ensuring that safety keeps pace with capability. In practice, this means collaborating with engineers to train models, running interpretability studies, and presenting your findings to both internal teams and the broader research community.

Anthropic’s culture is built around rigorous, mission-driven science. The company has published more than 40 peer-reviewed papers and reports in the past few years, with work cited across the AI safety field. Teams are small, so every research scientist has a visible impact. You’ll work alongside experts in alignment, interpretability, and scaling laws, with the expectation that your research not only pushes the frontier but also adheres to the company’s safety-first principles.

Why this role at Anthropic?

Many researchers are drawn to Anthropic because the work is visible and influential. Publications from Anthropic are frequently discussed in academic conferences, cited in policy debates, and covered in industry media. As a research scientist, your contributions are not only shared internally but often shape how the broader AI community thinks about safety and alignment.

The role also offers a clear path for growth. Early-career scientists gain hands-on experience designing experiments and running large-scale training pipelines, while senior researchers often lead new safety initiatives or mentor cross-functional teams. The scale of the work is another advantage: Anthropic’s models, such as Claude, serve millions of users, meaning your research can be tested in both controlled and real-world environments. For many candidates, this combination of research depth, global reach, and mission-driven purpose is what makes preparing for Anthropic research scientist interview questions so important.

What is the interview process like for a research scientist role at Anthropic?

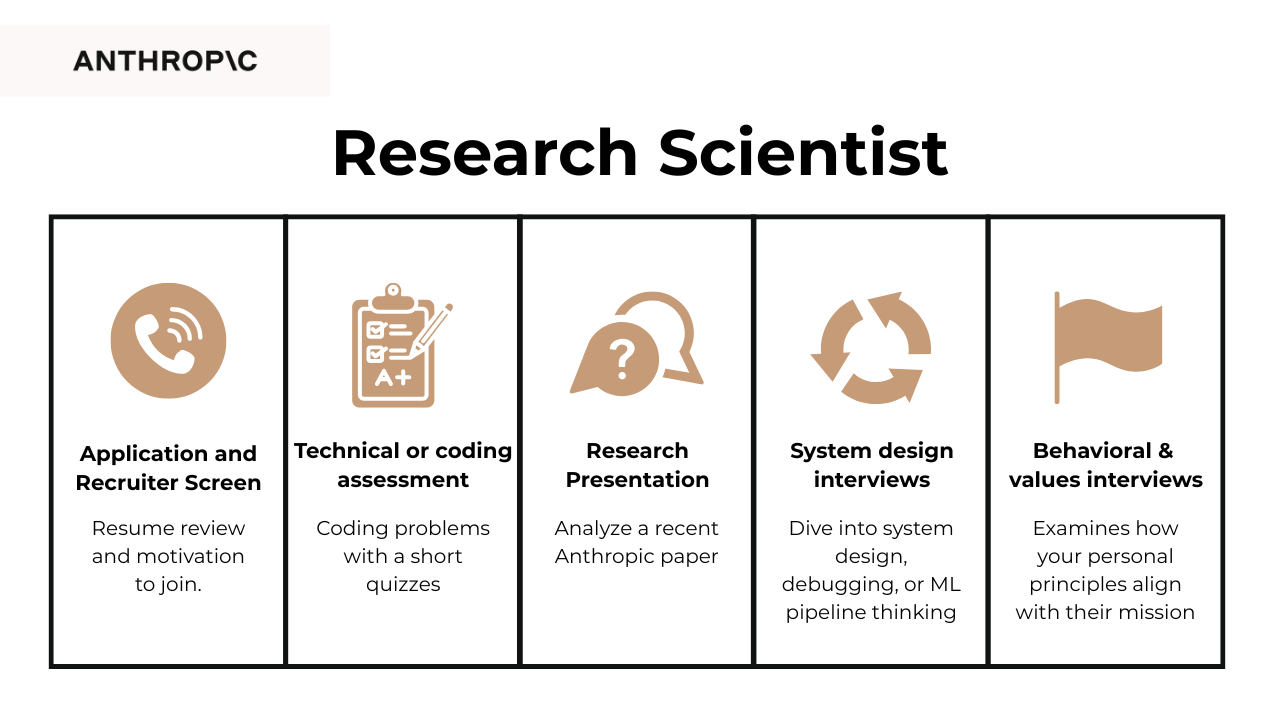

Anthropic’s interview process is structured, rigorous, and intentionally reflective of the company’s mission. Each stage tests a mix of research depth, technical ability, and values alignment. Expect questions that explore how you design experiments, reason about safety, and communicate complex ideas clearly. The process typically includes a recruiter screen, multiple technical interviews, a research presentation, system design and ML theory discussions, and a final mission alignment round.

Application & Recruiter Screen

This 30–45 minute call focuses on your background, research focus, and motivation for joining Anthropic. Recruiters want to understand whether your work naturally fits into Anthropic’s core areas, alignment, interpretability, or robustness, and whether your personal research philosophy aligns with the company’s long-term mission of building safe, reliable AI systems.

A strong candidate uses this conversation to show curiosity about Anthropic’s approach and articulate how their experience addresses a current research challenge. Clarity, humility, and enthusiasm for safety-driven research leave the best impression.

Tip: Prepare one concise story that connects your past research to Anthropic’s mission. For instance, explain how your experience with model interpretability or robustness relates to the company’s work on Constitutional AI or scalable oversight.

Technical & Coding Rounds

These interviews assess how you reason through algorithms, debug code, and translate abstract research concepts into working systems. You’ll likely solve problems in Python involving data structures, optimization, or applied ML logic, with an emphasis on writing clear, reproducible code.

Anthropic’s interviewers care as much about reasoning as correctness. They want to see how you identify assumptions, explain trade-offs, and collaborate during problem-solving. The best candidates treat these sessions as scientific discussions rather than pure coding tests, narrating their thought process throughout.

Tip: Practice timed problems, but focus on verbalizing each step. Use simple phrases like “I’ll test this edge case next” or “Here’s why I’m choosing this data structure.” This mirrors the collaborative research environment Anthropic expects you to thrive in.

Research Presentation/Case Study

In this one-hour session, you’ll either present your own research or analyze a recent Anthropic paper. The goal is to evaluate how you form hypotheses, design experiments, and connect findings to broader safety implications. Successful candidates avoid just reciting results; they demonstrate independent thinking, acknowledge limitations, and link their work to open questions in AI alignment.

Interviewers also observe how you communicate complex ideas to both technical and non-technical audiences. Your ability to make nuanced topics accessible is key, since Anthropic’s researchers often collaborate across engineering, policy, and ethics teams.

Tip: Structure your presentation around “Problem → Approach → Result → Implication.” Close with a short reflection on what you’d explore next if you had more time. This shows both scientific curiosity and forward-looking research thinking.

System Design & ML Theory Rounds

This round evaluates your understanding of large-scale model architectures and your ability to reason about trade-offs between safety, efficiency, and capability. You might be asked to design a system that detects adversarial inputs, outline how to test model robustness, or explain how scaling laws influence safety evaluation.

Anthropic uses this session to assess whether you can approach technical challenges holistically, balancing performance with safety principles and being explicit about the reasoning behind each decision. Clear, structured explanations matter as much as technical correctness.

Tip: Start by clarifying the goal and constraints before diving into solutions. Say, “Let’s define what ‘robustness’ means for this scenario,” or “If the system’s failure mode is X, we’ll need safeguards like Y.” This framing signals the disciplined reasoning Anthropic values in its research culture.

Test your skills with real-world analytics challenges from top companies on Interview Query. Great for sharpening your problem-solving before interviews. Start solving challenges →

Values & Mission Alignment

This final round explores how you think about AI ethics, long-term risk, and the responsible scaling of advanced systems. Questions often touch on interpretability, alignment, and the social implications of AI deployment. The focus is not on being “right,” but on showing maturity of thought, self-awareness, and genuine alignment with Anthropic’s philosophy of safe progress.

Interviewers will want to understand how you reason about trade-offs, how you reconcile pushing the frontier of AI capabilities while ensuring safety and transparency remain intact. Ideal candidates express humility about what is knowable, a commitment to open inquiry, and an understanding that safety is a collaborative, evolving goal.

Tip: Reflect on your personal stance toward AI safety before this round. Read Anthropic’s Core Views on AI Safety and identify one section that resonates with your experience. Referencing it thoughtfully in your response shows sincerity and preparation.

Hiring Committee & Offer

Once all interviews are complete, written feedback from every interviewer is compiled and reviewed by a cross-functional hiring committee. The committee assesses your performance holistically, including technical ability, research creativity, communication, and values alignment, and determines the final decision and level calibration.

Anthropic’s offers typically include base salary, equity, and research stipends for conference participation or independent exploration. Candidates who reach this stage should expect a transparent discussion around compensation and scope.

Negotiation Tip: Frame your negotiation around long-term impact, not just numbers. For instance, express how you plan to contribute to the research roadmap or lead cross-lab collaborations. Anthropic tends to respond well to candidates who align requests with mutual growth. You can also inquire about equity refresh cycles and funding support for publications or conferences, which can meaningfully enhance the total package.

Need 1:1 guidance on your interview strategy? Interview Query’s Coaching Program pairs you with mentors to refine your prep and build confidence. Explore coaching options →

What questions are asked in an Anthropic research scientist interview?

When you reach the interview rounds, the types of questions you face are designed to test not only your technical expertise but also how you think about safety and collaboration. Many candidates look up Anthropic research scientist interview questions to understand the mix of coding, research, and values-based discussions that come up. These categories give you a good idea of where to focus your preparation.

Coding and technical questions

These questions check your ability to translate theory into working code. You may be asked to work through data preprocessing tasks, optimize algorithms, or debug machine learning pipelines in real time. The goal is not only to test correctness but also to see how clearly you communicate your reasoning under time pressure. Interviewers want to understand how you approach problems step by step, since this mirrors the collaborative coding environment at Anthropic.

How would you write a query to find the top five pairs of products most frequently purchased together by the same user?

-

Anthropic looks for research scientists who can blend statistical intuition with engineering rigor, and this question does that. You should treat this as a scalable co-occurrence problem, deduplicating user-product pairs, forming combinations, and aggregating them efficiently. You should justify how using

LEAST()andGREATEST()avoids duplicate counting, and discusses how to scale this logic for billions of rows. In an interview, connect your reasoning to recommendation systems, correlation discovery, or causality analysis, and show how your SQL logic reflects a data-driven mindset. -

Anthropic values precision in temporal reasoning and data validation. You should self-join the subscriptions table on

user_id, ensuring both rows have completed (end_datenot null) subscriptions. Then check for overlap using the conditiona.start_date <= b.end_date AND b.start_date <= a.end_date, while excluding the same row with a primary key inequality. You should aggregate results to return one Boolean per user, marking whether overlap exists. Explain how this relates to detecting data integrity issues, double-billing, or overlapping experiment periods, which Anthropic engineers handle in production systems. -

Anthropic values fairness and interpretability in data insights; this question tests both. You should join

employeesanddepartments, then group by department and use conditional aggregation:AVG(CASE WHEN salary > 100000 THEN 1 ELSE 0 END)to compute the high-earner ratio. You should useHAVING COUNT(*) >= 10to filter departments and then rank them by that ratio in descending order. Talk about why proportion is a better fairness measure than raw counts, and how you’d scale or visualize such insights to audit bias in salary data. -

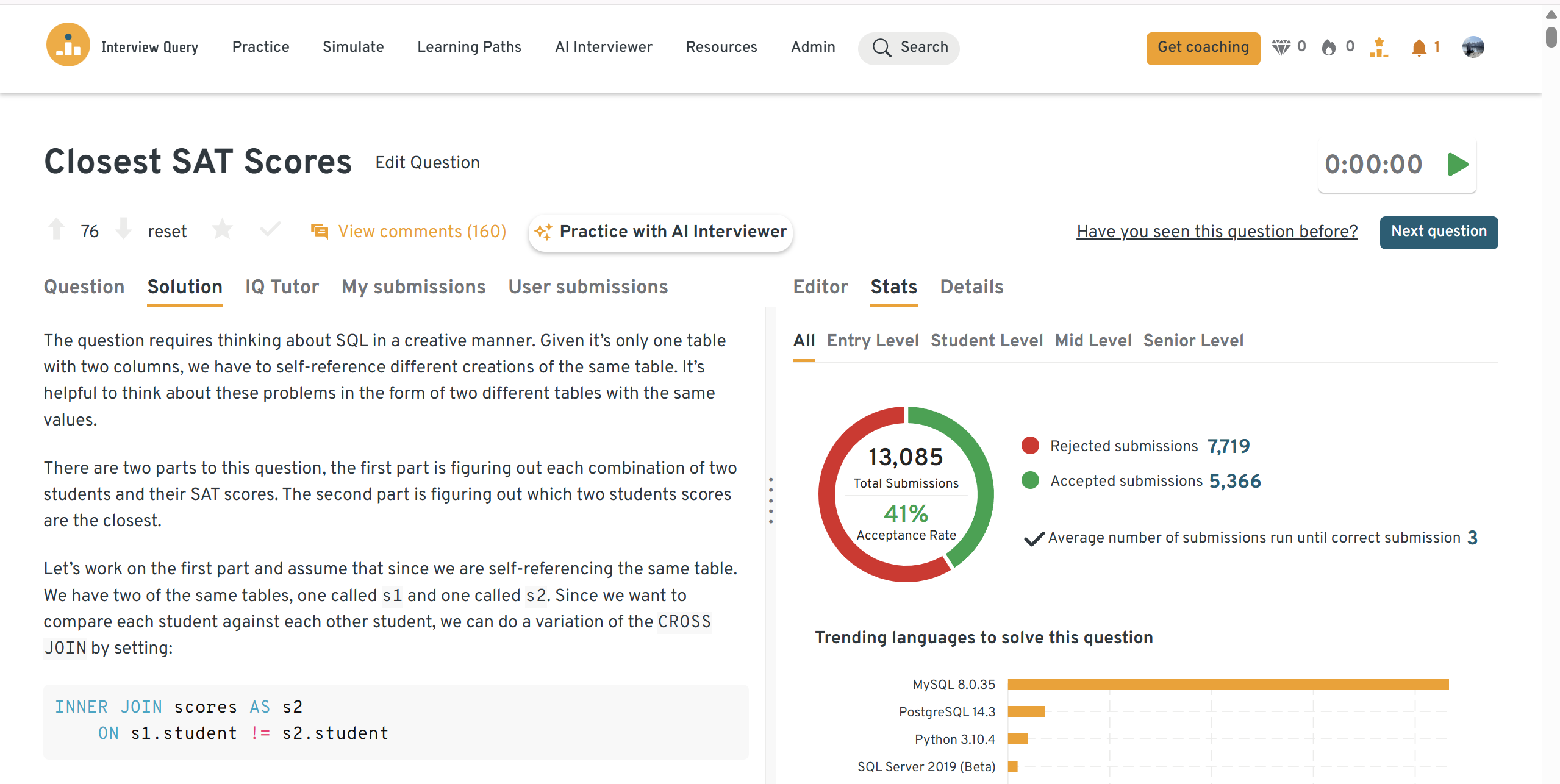

Anthropic seeks researchers who can reason through similarity and ranking with clarity. You should self-join the

studentstable (withs1.id < s2.id) to compare every unique pair, calculate the absolute score difference, and order the results by that difference in ascending order. You should break ties by sorting names alphabetically and return only the top result. When explaining, connect this to nearest-neighbor problems, clustering, or distance-based reasoning, showing that you can bridge SQL logic with ML intuition.

-

Anthropic interviews often test how you handle messy data and design resilient systems. You should use

ROW_NUMBER() OVER (PARTITION BY employee_id ORDER BY id DESC)to identify the most recent inserted row per employee and filter forrow_number = 1. Explain why this works under an auto-incrementingidand how it recovers the true current salary. Discuss how you’d detect or prevent such ETL anomalies in a research environment where data lineage and reproducibility are key. -

Anthropic values precision in hierarchical logic; this question tests how you think about ranking under ties. You should filter for Engineering, apply

DENSE_RANK()over salary in descending order, and select rows where the rank equals 2. You should justify whyDENSE_RANK()ensures correctness when multiple employees share the top salary. Relate your answer to order statistics, rank aggregation, and fairness in selection, all of which mirror the structured thinking Anthropic values in model evaluation. How would you list all neighborhoods that have zero users associated with them?

Anthropic researchers are trained to spot data sparsity and bias early in their analysis, and this question captures that. You should use a

LEFT JOINfromneighborhoodstouserson the neighborhood key and filter rows whereuser_id IS NULL. You should note thatNOT EXISTScould be more performant for larger datasets. When explaining, connect this logic to identifying missing data, sampling bias, or fairness issues, the kind of reasoning that underpins trustworthy AI systems.How would you find users who placed fewer than three orders or spent under $500 in total?

Anthropic’s interviewers care about your ability to turn behavioral data into meaningful thresholds. You should group by

user_id, computeCOUNT(*) AS ordersandSUM(amount) AS total_spent, then useHAVING COUNT(*) < 3 OR SUM(amount) < 500to filter the result. You should talk about how these users might represent low-engagement segments and how you’d validate whether thresholds like “3 orders” or “$500” are statistically meaningful. This is your chance to show both technical accuracy and scientific curiosity, which Anthropic prizes in its research culture.

Tip: Narrate like a scientist. State the goal, list assumptions, choose the simplest data structure that works, and write small, testable helpers. Validate with a tiny example before scaling. Close by noting time and space, plus one reliability improvement you would add in a real codebase.

Want to practice real case studies with expert interviewers? Try Interview Query’s Mock Interviews for hands-on feedback and interview prep. Book a mock interview →

Research and AI safety questions

This category goes deeper into your ability to design and evaluate research. Questions often explore areas like alignment, interpretability, and fairness, as well as your ability to critique existing approaches. Anthropic places special weight on Anthropic AI safety interview questions, since they reveal how you balance advancing capabilities with reducing risk. These questions are meant to surface whether your philosophy matches the company’s mission of building safe and reliable systems.

How would you design an experiment to evaluate whether a large language model is aligned with human values?

You should start by defining what “alignment” means in measurable terms, such as minimizing harmful outputs or maximizing truthful, helpful responses. You should propose an experimental setup with human evaluation loops, clear success metrics (e.g., agreement rate, refusal accuracy), and model interventions. In an Anthropic interview, discuss why alignment must be evaluated both empirically (via behavior) and philosophically (via normative grounding). You should also connect this to Anthropic’s work on Constitutional AI, showing you understand how principles can shape model behavior.

How would you test whether a model’s reasoning process is interpretable to humans?

You should propose probing techniques like mechanistic interpretability, feature attribution, or intervention-based causal tracing. Describe how you’d design controlled tasks to isolate reasoning chains, and evaluate interpretability both qualitatively (via visualization) and quantitatively (via prediction robustness). Anthropic looks for candidates who not only apply methods but also critique their epistemic reliability, so they explain how interpretability can be misleading or incomplete. Highlight your awareness that Anthropic’s ultimate goal isn’t just interpretability, it’s controllability and transparency in decision-making systems.

What safeguards would you design to prevent unintended harmful behaviors from an advanced AI system?

You should think holistically; combine technical mitigations (robustness testing, red teaming, reward modeling) with institutional safeguards (deployment gates, auditing pipelines). Explain how you’d measure success not by absence of failure, but by continuous risk reduction and monitoring. At Anthropic, framing this as a socio-technical problem is key; discuss how governance, feedback loops, and external oversight work together. You should show that your mindset aligns with the company’s philosophy of “safety through understanding”, not merely compliance.

How would you measure fairness in a language model’s outputs?

You should begin by defining fairness for the task, equalized performance across demographic or contextual groups, and select appropriate statistical tests (e.g., demographic parity, disparate impact ratio). You should design datasets that reflect realistic distributions, not just synthetic balance. Discuss tradeoffs between fairness, accuracy, and interpretability, and show how you’d validate improvements after mitigation. In an Anthropic interview, emphasize that fairness is a continuous diagnostic process, not a one-time fix, and link it to broader system reliability.

If a model appears safe on benchmarks but behaves unpredictably in the wild, how would you investigate the cause?

You should approach this scientifically: reproduce the failure under controlled conditions, analyze context sensitivity, and inspect latent activation patterns or prompt dependency. Propose building diagnostic datasets that stress-test generalization, and discuss potential causes like data drift, spurious correlations, or weak out-of-distribution calibration. Anthropic interviewers expect you to think like a researcher investigating alignment regressions, not just a debugger, so narrate how you’d reason about the gap between lab safety and real-world deployment.

How would you design an evaluation for long-term reliability in generative AI systems?

You should define reliability as consistent, intended behavior over extended temporal horizons, then propose tests involving multi-turn interactions or simulated deployments. You should consider compounding errors, prompt memory decay, and value drift over repeated queries. Explain how you’d track degradation using longitudinal metrics or automated monitors. In the Anthropic context, highlight how long-term reliability underpins the company’s vision for aligned, steerable systems that remain safe as they scale.

How do you balance accelerating AI capabilities with ensuring alignment and safety?

You should acknowledge the tension between innovation and restraint; describe how capability gains must be paired with proportional safety advances. You should propose organizational and technical frameworks that synchronize these tracks (e.g., safety budgets, dual-track research, safety signoff reviews). At Anthropic, interviewers will be listening for your ethical reasoning as much as your technical skill. Show that you value interpretability, evaluation, and iterative oversight as essential to responsible frontier research, not as an afterthought.

Tip: Ground every answer in a measurable definition, a concrete evaluation plan, and a clear failure model. Describe the dataset, the protocol, primary and guardrail metrics, and how you would stress test for distribution shift and adversarial prompts. End with what you would change if the result conflicts with prior literature.

Behavioral and collaboration questions

In addition to technical skill, Anthropic wants to know if you can thrive in a cross-functional, research-driven culture. Behavioral questions test how you’ve worked with engineers, product managers, or fellow researchers in the past. They often focus on handling ambiguity, managing pressure to publish, or navigating differences in research direction. Clear and structured answers help show that you can collaborate effectively while staying aligned with the company’s values.

-

Choose a project centered on model interpretability, safety evaluation, or alignment research, something that showcases depth and reasoning beyond standard ML experiments. You should walk through your research question, hypothesis, and experimental design. Discuss key challenges such as reproducibility, data quality, ethical tradeoffs, or failure modes that emerged in evaluation. Explain how you iterated through these issues, perhaps refining dataset curation, formalizing metrics, or using simulation environments. At Anthropic, interviewers want to see how you apply scientific rigor to ambiguous problems while balancing safety and innovation.

Sample Answer: One of the most meaningful projects I led focused on reducing hallucinations in a large language model used for information retrieval. The goal was to make the model more truthful without degrading its helpfulness. I began by designing a dataset of high-risk prompts, cases where factual correctness was critical, and built a verifier that estimated the model’s uncertainty before generating responses. The biggest challenge was reproducibility because small changes in the fine-tuning data led to volatile performance shifts. To address this, I standardized our evaluation pipeline, version-controlled all data splits, and defined a consistent refusal accuracy metric. Eventually, we achieved a 40 percent reduction in unsafe outputs while maintaining overall response quality. This project taught me that progress in model reliability often comes less from algorithmic novelty and more from disciplined evaluation and data hygiene.

How would you make complex AI research findings more accessible to non-technical audiences?

Frame this around transparency and interpretability in AI communication. You should explain how you distill high-dimensional or abstract concepts, like policy gradients or model alignment, into frameworks that decision-makers, ethicists, or policymakers can understand. Discuss methods like interactive dashboards for interpretability, annotated examples for model explanations, and clear uncertainty communication in reports. You should emphasize visual intuition, reproducibility, and ethical clarity. Anthropic interviewers will look for your ability to bridge rigorous science with public trust, a key trait in research scientists working on frontier models.

Sample Answer: In my previous lab, I helped communicate our interpretability research to policymakers and media partners. Rather than overwhelming them with equations, I focused on storytelling by explaining the model as a system of interacting concept neurons and illustrating key findings through simple visualizations. For example, we replaced saliency maps with annotated examples that showed how attention weights changed across prompts. We also developed an internal dashboard where stakeholders could toggle model parameters and observe behavior changes in real time. This hands-on experience demystified the work and built trust. I believe accessibility in AI communication means meeting people where they are, translating abstract math into human consequences, being transparent about limitations, and clearly articulating uncertainty rather than hiding it.

-

You should focus on strengths that reflect Anthropic’s values, like systematic experimentation, strong literature grounding, and humility in model evaluation. Choose one weakness that shows intellectual honesty without undermining competence (e.g., overanalyzing before implementation or taking too long to validate edge cases). Then, describe how you’ve addressed it by introducing faster prototyping cycles or clearer milestone planning. Tie it back to outcomes such as increased research throughput and collaboration impact. Anthropic values researchers who show self-awareness and continuous scientific improvement.

Sample Answer: My current advisor would likely describe me as methodical, curious, and self-critical, a researcher who values clarity over speed. My main strengths are my ability to structure ambiguous problems, my deep grounding in literature, and my collaborative approach to experiments. However, a weakness I have been actively addressing is my tendency to overanalyze before starting implementation. Early in my PhD, I sometimes spent too long perfecting theoretical framing rather than testing minimal hypotheses. To improve, I started enforcing short “prototype-first” sprints, which led to faster feedback loops and a noticeable increase in publication throughput. I have learned that in research, progress is not about eliminating uncertainty but managing it through systematic iteration and reflection.

-

You should choose a cross-disciplinary project, perhaps where you had to align interpretability goals with engineering timelines or ethical review constraints. Describe how you reframed the discussion using shared objectives like model robustness or public accountability. Mention how you created structured documentation, reproducible experiments, and decision logs to build clarity. Then highlight the results: improved collaboration, a refined evaluation plan, or a better deployment decision. Anthropic wants to see that you can communicate across teams without losing scientific depth or ethical nuance.

Sample Answer: In one collaboration, my team’s interpretability study clashed with engineering priorities. While we wanted deeper analysis of internal representations, the deployment team was under pressure to meet a product deadline. The tension came from different definitions of “done.” I reframed the discussion by identifying a shared goal, improving model reliability metrics without blocking deployment. We agreed to release a smaller, lower-risk version first while continuing our interpretability probes in parallel. To keep alignment, I documented all assumptions and results in concise decision memos that both teams could reference. The compromise worked, we launched on schedule, and our follow-up studies uncovered a latent failure mode that informed the next release. That experience reinforced my belief that good communication is less about persuasion and more about translating goals into common measurable terms.

-

Anchor your answer on Anthropic’s mission of building safe, steerable, and interpretable AI systems. You should connect your experience, whether in ML safety, interpretability, NLP, or alignment, to specific research directions Anthropic pursues (e.g., Constitutional AI, scalable oversight, or mechanistic interpretability). Emphasize that you’re motivated by epistemic responsibility: understanding how and why models behave as they do. Show that your research philosophy aligns with Anthropic’s belief in responsible scaling, open inquiry, and cross-disciplinary collaboration between science, policy, and engineering.

Sample Answer: What draws me most to Anthropic is its philosophy that safety and capability must advance together. I have followed your work on Constitutional AI and mechanistic interpretability for some time, and what stands out is the methodological rigor. Experiments are not just performance-driven but epistemically grounded. My own research has focused on model reliability and evaluation design, and I find Anthropic’s approach deeply aligned with my belief that AI progress must remain transparent, auditable, and human-centered. I want to contribute to a culture that values humility about what we do not yet understand, and that builds tools to make AI systems not only more powerful but also more interpretable and controllable. Joining Anthropic would allow me to work at the frontier of that balance between innovation and responsibility.

Tell me about a time you had to balance research speed with ensuring ethical rigor or safety validation.

You should describe an instance where you had to trade off rapid progress with careful evaluation, perhaps delaying a model release for more red-teaming or interpretability analysis. Explain the guardrails you implemented: peer review, risk checklists, or bias audits. Then quantify both the learning benefit and the safety gain. Anthropic interviewers will be looking for your ability to self-regulate under pressure, showing that you prioritize truth and safety over velocity, a core principle of their research culture.

Sample Answer: While working on a generative text system for a partner organization, we faced pressure to deploy quickly after promising early results. However, our bias audits had not yet been completed, and we discovered that certain prompts produced skewed outputs against minority groups. Although the timeline was tight, I argued for delaying deployment until we could implement mitigation steps, curating adversarial test sets, introducing fairness constraints in the training data, and running red-teaming exercises. This decision postponed the launch by two weeks but led to a 25 percent improvement in fairness scores and greater stakeholder confidence. It taught me that ethical rigor is not a trade-off against innovation but part of producing credible science. A short delay to ensure safety ultimately preserved long-term trust and research integrity.

Describe how you diagnosed and resolved a plateau in model performance or unexpected behavior during training.

Outline your investigative process: hypothesis generation, ablation testing, hyperparameter tuning, and probing for failure modes or spurious correlations. Discuss how you tested alternative architectures, data augmentations, or loss functions, and what insights led to recovery. Quantify improvements where possible, but focus on your methodical reasoning process, not just the fix. Anthropic values researchers who are calm, empirical, and curious when systems deviate, those who treat debugging as scientific discovery, not just engineering.

Sample Answer: In one project on adversarial robustness, our model’s validation accuracy plateaued well below baseline despite multiple architecture tweaks. Instead of making further blind adjustments, I approached the issue like a scientific investigation. I first ran ablations on data augmentations, discovering that a mislabeled subset was skewing gradients. Then, by plotting loss landscapes and inspecting layer activations, I found that gradient saturation was preventing effective learning beyond a certain epoch. I introduced layer normalization adjustments and rebalanced the optimizer’s learning rate schedule, which restored performance and improved robustness by 10 percent. More importantly, I documented each diagnostic step, turning it into an internal failure triage guide for our lab. That experience reinforced my belief that debugging is an act of discovery and an opportunity to learn how models think, not just fix what is broken.

Tip: Tell tight STAR stories that end with a metric and a habit you adopted afterward. Show you can bridge research, engineering, and policy with a shared KPI and crisp docs. When describing conflict, name the decision rule you used and the follow-up that kept partners aligned.

How to prepare for a research scientist role at Anthropic

Preparing for the Anthropic research scientist interview requires balancing research excellence with values-driven reasoning. The best candidates show technical mastery, a deep understanding of AI safety, and an ability to explain their ideas with clarity and conviction. Here’s how to get ready effectively.

Study Anthropic’s Publications and Research Focus

Start by reviewing the Anthropic Research Hub. Read papers on alignment, interpretability, and robustness to understand the company’s scientific priorities and experimental methodology. Look at how Anthropic researchers structure their abstracts, define hypotheses, and communicate safety implications.

Tip: Select one Anthropic paper that aligns with your background, such as “Constitutional AI” or “Scaling Laws and Safety” and prepare a brief critique or replication idea. This will help you sound precise and thoughtful in your research presentation round.

Strengthen Core Machine Learning and AI Fundamentals

Anthropic expects fluency in ML concepts like optimization, generalization, robustness, and representation learning. Brush up on loss landscapes, adversarial examples, and gradient-based interpretability. Use the Interview Query ML library for practical problem-solving and pair it with LeetCode for implementation drills in Python or PyTorch.

Tip: Don’t just memorize algorithms; explain them intuitively. In Anthropic’s interviews, you’ll often be asked why a method works or how you’d test its reliability under a distribution shift.

Practice Research Presentations and Communication

You’ll need to present technical material to both researchers and cross-disciplinary teams. Practice summarizing your past work in 10–12 minutes using the structure: Motivation → Approach → Findings → Implications → Future Work.

Tip: Record yourself explaining one experiment as if to a policy stakeholder, not another scientist. Anthropic’s researchers often communicate with ethics and governance teams, so clarity for mixed audiences is a strong differentiator.

Prepare for Coding and Debugging Challenges

Anthropic’s technical rounds are collaborative coding sessions designed to reveal how you reason, not just how you code. Expect to handle data manipulation, model evaluation, or numerical edge-case debugging in Python.

Tip: As you solve problems, verbalize your hypotheses: “This looks like a memory bottleneck issue—I’ll print intermediate shapes to confirm.” Practicing this habit shows scientific reasoning in action, which is exactly what Anthropic values.

Deepen Your Understanding of AI Safety and Responsible Scaling

Anthropic’s identity revolves around safe and interpretable AI. Review key writings such as their Core Views on AI Safety and Responsible Scaling Policy. Understand terms like alignment, scalable oversight, and model steerability, and be ready to articulate your own stance.

Tip: Prepare one personal reflection that links your prior research to Anthropic’s mission. For example: “In my last project on robustness, I realized how model reliability under distributional shift is essential for safety, an area I’d love to expand on at Anthropic.”

Simulate the End-to-End Interview Flow

Run through a mock Anthropic-style loop:

- 1 timed coding round (60 min)

- 1 research presentation (15 min summary + 10 min Q&A)

- 1 system design prompt focused on scaling or safety trade-offs

- 1 mission alignment discussion

Tip: Use Interview Query’s mock interview platform or the AI interview simulator to replicate pressure conditions. Treat each session as a scientific experiment. Afterward, note what you’d iterate on next.

Build Thoughtful STAR Stories for Behavioral Questions

Even research scientists are evaluated on teamwork and resilience. Prepare structured stories that demonstrate ownership, intellectual honesty, and your ability to handle uncertainty.

Tip: End every story with a learning takeaway (“This taught me to validate assumptions earlier” or “This experience made me document experiments more rigorously”). Anthropic interviewers pay close attention to growth mindset and self-reflection.

Ready to Start Your Anthropic Research Scientist Prep?

Succeeding at Anthropic means showing that your research not only advances AI, but does so safely, transparently, and with deep curiosity. To prepare with structure and confidence, start where other top candidates begin:

- Use the AI interview simulator to practice coding and safety reasoning under time constraints.

- Explore our ML and research learning paths to strengthen your fundamentals.

- Read success stories of Interview Query users.

Every Anthropic interview rewards rigor, humility, and clarity of thought. With focused preparation and practice, you’ll be ready to demonstrate not just how you build AI systems—but how you help make them safe for the world.