Anthropic Interview Questions 2025: Process, Values & Prep

Introduction

Over 50,000 professionals applied to Anthropic roles last year, but only a small fraction made it past the interview stage. If you’re preparing for an Anthropic interview, you’re already competing among some of the sharpest minds in AI. The company’s reputation for cutting-edge research, ethical rigor, and technical excellence means every interview round counts.

In this guide, you’ll learn what the Anthropic interview process looks like, the most common Anthropic interview questions, and how to prepare for both the technical and values-driven stages. Whether you’re applying as a Software Engineer, ML Researcher, or Product Manager, this article will help you enter your interviews confident, informed, and aligned with Anthropic’s mission to make AI safer for everyone.

Why work at Anthropic?

If you are considering a role at Anthropic, you are looking at one of the few companies where technical excellence meets ethical responsibility. People join not only to work on advanced AI systems but also to contribute to the global effort of keeping artificial intelligence safe, transparent, and beneficial. Before diving into interview preparation, it is worth understanding what makes Anthropic such a distinct and inspiring place to work.

A mission that drives every line of code

Anthropic’s foundation rests on a clear idea: artificial intelligence should be safe, interpretable, and aligned with human values. Every model, experiment, and deployment decision reflects this principle. For engineers and researchers, that means writing code that has both precision and purpose, knowing their work contributes to one of the most important challenges in technology today.

A culture of collaboration and clarity

Inside Anthropic, collaboration is built into daily life. Teams discuss research findings openly, critique each other’s code with care, and approach every project with intellectual humility. The culture encourages learning through dialogue and mutual respect, creating an environment where innovation grows naturally from shared curiosity.

A place to learn from the best

Anthropic’s team includes experts from leading AI research organizations such as OpenAI, DeepMind, and Google Brain. Working here provides constant access to people who are shaping the direction of AI safety and interpretability research. It is a fast-paced but thoughtful environment where ideas move quickly and mentorship is always within reach.

Career growth with real impact

Anthropic offers a rare combination of technical depth and mission-driven growth. Employees develop skills that extend beyond coding or modeling, gaining insight into ethics, governance, and responsible deployment. Every contribution has a measurable effect on how AI evolves, allowing individuals to grow their careers while making a meaningful global impact.

Challenge

Check your skills...

How prepared are you for working at Anthropic?

What’s Anthropic’s interview process like?

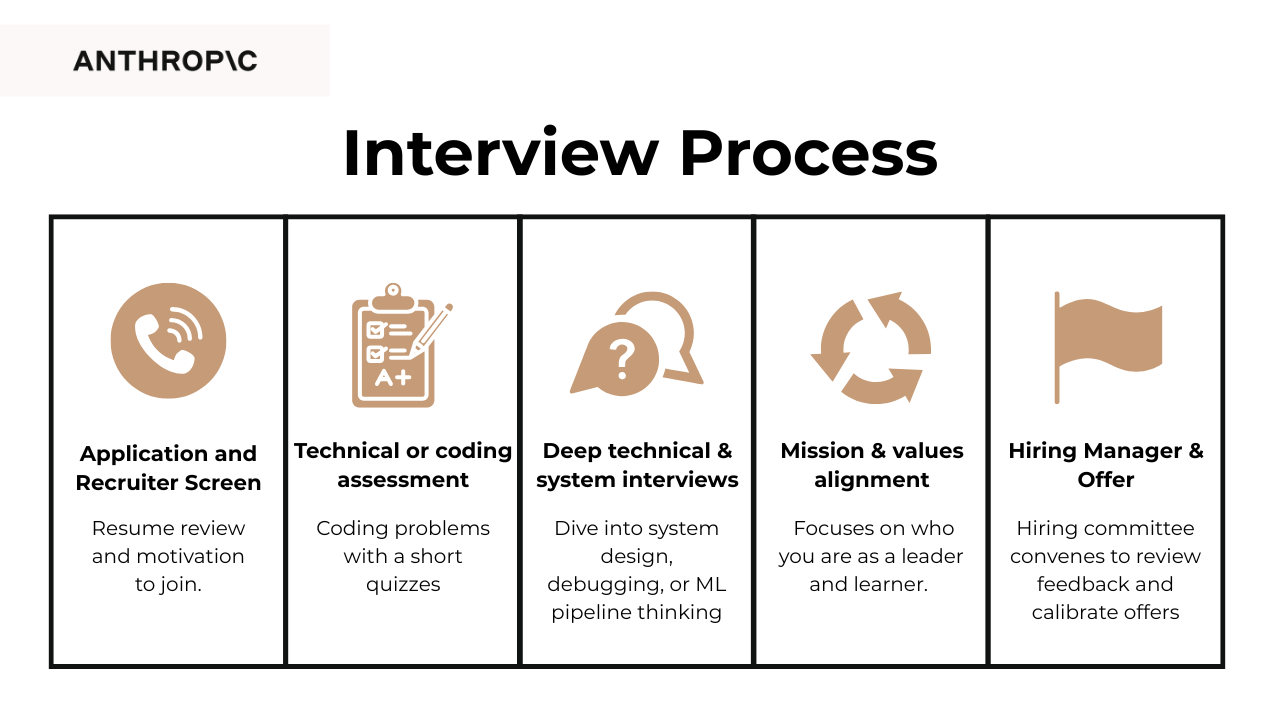

Anthropic’s hiring process balances technical depth with mission alignment. You move from a recruiter screen to a technical assessment, then deep technical and system interviews, followed by a values conversation and final matching. Across stages, interviewers evaluate clarity of reasoning, collaboration, and your ability to build safe, reliable systems at scale.

Recruiter and initial screening

This call establishes fit and context. The recruiter evaluates your background, your motivation for Anthropic, and whether your skills align with current teams. They look for a clear, authentic connection to AI safety, strong communication, and evidence that you thrive in collaborative, research-adjacent environments.

Tip: Prepare a concise “why Anthropic” that links your past impact to their mission and reference one specific Anthropic post or policy you have read.

Technical or coding assessment

This stage tests core problem solving under time constraints. Interviewers evaluate algorithmic reasoning, correctness, and how you structure clean, testable code. They look for engineers who explain trade-offs as they code and who verify edge cases without overcomplicating solutions.

Tip: Think aloud while you implement, write a quick sanity check on inputs and boundaries, and summarize time and space complexity before you submit.

Deep technical and system interviews

These sessions dive into system design, debugging, or ML pipeline thinking, depending on the role. Interviewers evaluate how you decompose complex problems, balance latency, cost, and reliability, and communicate design choices. They look for pragmatic builders who connect architecture to real production constraints and who factor in observability, safety, and reproducibility.

Tip: Structure your answer as requirements, constraints, high-level design, data flows, trade-offs, and failure modes, then propose a staged rollout with metrics.

Mission and values alignment

This conversation focuses on how you reason about responsibility in AI, teamwork, and decision-making under uncertainty. Interviewers evaluate ethical awareness, self-reflection, and how you balance speed with long-term safety. They look for curiosity, humility, and a habit of documenting decisions so teams can learn safely.

Tip: Prepare one story where you chose reliability or user trust over a faster launch and quantify the long-term benefit of that choice.

Final matching and references

This stage aligns you with a specific team and confirms working style through references. Interviewers evaluate mutual fit, collaboration style, and consistency between your interview stories and reference feedback. They look for steady execution, clear ownership, and the ability to partner across research and engineering.

Tip: Share a brief one-pager with recent projects, scope, metrics, and your preferred problem spaces so matching conversations stay focused and concrete.

Most common Anthropic interview questions

Anthropic’s interviews are designed to test more than technical skill. They explore how candidates think, communicate, and align with the company’s mission of building safe and interpretable AI. While the topics vary by role, most interviews follow a predictable pattern centered around three themes: values, technical depth, and system-level thinking. Understanding these categories helps you prepare with focus rather than memorization.

Mission and values alignment

Anthropic places heavy emphasis on alignment between a candidate’s personal motivations and the company’s mission. These interviews often feel like reflective conversations rather than traditional assessments. Expect to discuss how you make ethical decisions, navigate uncertainty, or handle responsibility in complex AI systems. Interviewers look for thoughtfulness, curiosity, and an ability to balance innovation with caution. Candidates who connect their technical work to human impact tend to make a strong impression here.

Describe a software project you worked on. What were some of the biggest challenges you faced?

Choose a project that shows how you think and build in line with Anthropic’s focus on reliability and scalability. Walk through your design choices and problem-solving process rather than just the outcome. Talk about the trade-offs you made under constraints like speed, accuracy, or safety, and explain how you tested for robustness. End by quantifying the improvement in performance or user experience your work created.

Sample answer: I led the redesign of a data ingestion service that processed 5M+ events daily. The main challenge was ensuring reliability during schema updates. I solved it by introducing versioned schemas, canary rollouts, and better observability. We reduced incident alerts by 40% and cut debugging time in half. The project taught me that reliability scales only when safety is built into each deployment step.

-

At Anthropic, you’ll collaborate with researchers, policy experts, and product teams who may not have an engineering background. Show that you know how to translate complexity into clarity. Explain how you document systems, visualize architecture, or use analogies to make your work accessible. Emphasize that good communication helps the entire organization build safer, more transparent AI.

Sample answer: I use clear visuals and short analogies. For example, I once explained our model retraining process as “teaching the system new habits” and mapped dependencies in a one-page flowchart. That helped policy and legal teams grasp how bias could propagate. Making the system transparent improved collaboration and accelerated our safety reviews.

-

Reflect on how others would describe your technical habits and approach to teamwork. Highlight strengths that matter in Anthropic’s environment, such as writing maintainable code, questioning assumptions, or balancing experimentation with caution. When you mention a weakness, focus on how you’ve built awareness and structure to address it. Anthropic values self-reflection, so be specific and honest about what you’ve learned.

Sample answer: My manager would describe me as methodical, collaborative, and cautious with production changes. My strengths are system reliability, structured communication, and mentorship. My growth area is delegation; I tend to over-own. I’ve been working on that by assigning clearer ownership and setting check-in points instead of doing the work myself.

-

Anthropic’s work often involves balancing competing priorities between safety, performance, and research speed. Describe a time when miscommunication created tension or slowed progress. Explain how you identified the misunderstanding, reframed the issue in measurable terms, and built alignment. The goal is to show that you can navigate differences with empathy while keeping the team focused on responsible outcomes.

Sample answer: In one project, data scientists wanted to push an untested model to production, while ops flagged latency risks. I scheduled a short sync with both teams, reframed concerns around measurable risks, and proposed a staged rollout with latency monitoring. The model went live safely, and both teams appreciated the shared framework for decision-making.

-

This is your chance to connect your personal motivation to Anthropic’s mission. Talk about how the company’s focus on safety, interpretability, or model reliability aligns with your values. Explain what kind of problems excite you and how your skills, whether in distributed systems, ML tooling, or backend design, can advance Anthropic’s long-term vision. Be specific about why this mission matters to you.

Sample answer: I applied because Anthropic’s focus on interpretability and responsible scaling matches how I view my work. I want to build systems that are fast, reliable, and ethically sound. My experience in distributed systems and data observability aligns with your need for safe, auditable infrastructure, and I value the company’s balance between innovation and responsibility.

Tell me about a time you balanced rapid feature development with maintaining reliability and technical debt.

Anthropic moves quickly but never at the expense of safety. Use an example where you had to deliver under pressure without compromising stability or ethics. Describe how you assessed risk, implemented guardrails such as testing or rollback plans, and communicated trade-offs to the team. Finish by explaining how your decision protected long-term reliability while still enabling innovation.

Sample answer: During a launch sprint, our team had to ship a new feature under tight deadlines. I proposed a dual-track plan: release a minimal, read-only version first, then add write operations after we verified load stability. It let us hit the date while protecting uptime. That decision became our standard pattern for future launches.

Tip: Answer with principle, situation, action, outcome. Tie your decision to user trust or system safety. End with a lesson you would apply at Anthropic.

Technical depth and analytical skill

Technical interviews at Anthropic go beyond standard coding challenges. They focus on how you reason through problems, structure solutions, and communicate trade-offs. For engineering or research roles, this might include analyzing algorithms, optimizing performance, or exploring data structures that scale to real-world applications. The key is to demonstrate depth of understanding rather than surface-level knowledge. Interviewers value clarity of reasoning and the ability to turn abstract concepts into practical, testable ideas.

How would you sample a random row from a 100M+ row table without throttling the database?

Avoid full-table scans with

ORDER BY RANDOM(). Instead, use indexed ranges orTABLESAMPLEif supported by the engine. Explain how you’d mitigate load with read replicas or cached metadata. At Anthropic, connect this to reliability and safety: show that you think about database performance as part of designing fault-tolerant, non-disruptive systems.How would you write a query to report total distance traveled by each user in descending order?

Use aggregation to sum ride distances per

user_idand order by the result. In your explanation, mention how you’d exclude invalid or canceled rides, normalize units, and handle missing data. Anthropic engineers are expected to reason about data quality, so highlight how you’d ensure consistency for research models or usage metrics derived from this data.-

Apply a window function like

ROW_NUMBER()over daily partitions ordered by timestamp descending, then filter for the first row. Clarify your approach to time zones and boundaries—precision matters when models learn from temporal data. At Anthropic, these details show your commitment to reproducibility and data integrity. -

Use a

GROUP BYwith a conditional average and aHAVINGclause to filter departments. Discuss how you’d normalize currencies, remove duplicates, and apply access controls for sensitive pay data. At Anthropic, mention how you protect personally identifiable information and ensure that performance analytics remain privacy-safe. -

Aggregate deposits by day, then use a rolling window with two preceding rows and the current one. Discuss how you handle missing days and late-arriving events. Relate this to Anthropic’s infrastructure by describing how you’d make data pipelines idempotent and traceable, ensuring model updates are consistent and auditable.

-

Perform a self-join on user-level purchase data, enforce pair order with

LEAST/GREATEST, and group by pair names. When explaining, talk about distributed joins, deduplication, and approximate counting. In Anthropic’s context, connect this to large-scale analytics that power user modeling or system interpretability while minimizing compute cost. -

Join on

user_idand use the standard overlap condition between start and end dates. Reduce to a boolean per user withEXISTSorCOUNT(*) > 0. At Anthropic, show that you think about correctness in time-based logic, which matters when coordinating experiment versions or model deployments.

Tip: Before optimizing, state the baseline approach and its bottleneck. When you improve it, explain the trade you are making and how you will validate correctness and performance.

System design and problem-solving

This category evaluates your ability to think holistically about complex systems. Anthropic’s work often involves designing scalable, safety-critical infrastructure for large models, so these discussions test both technical design and strategic foresight. You might be asked to outline a system, explain its architecture, and justify decisions based on safety, reliability, or efficiency. Interviewers want to see if you can balance innovation with robustness, approaching design as both an engineer and a steward of responsible AI.

-

Explain your approach step by step as if you’re integrating sorted data streams in an ML pipeline. Highlight how your linear-time merge supports distributed ingestion without data loss. Discuss memory constraints, backpressure handling, and monitoring for out-of-order inputs. End by clearly stating your time and space complexity.

-

Begin by identifying key business processes such as orders and returns. Sketch a star schema with facts and conformed dimensions, then explain how you’d enforce data lineage and privacy. In an Anthropic context, describe how you’d build traceable data flows that guarantee reproducibility for training and evaluation pipelines.

-

Create an address history table with effective start and end timestamps. Explain how you’d deduplicate and normalize addresses while preserving historical context. Discuss how you’d build “current address” queries efficiently. Relate this to Anthropic’s need for version control and consistent state tracking across experiments or models.

-

Describe tables for riders, drivers, vehicles, and rides, including ride states and timestamps. Add indexes for hot queries like “active rides near a location.” Explain how you’d partition by geography and time. For Anthropic, discuss reliability—how you’d prevent data corruption, ensure transactional integrity, and design for safe concurrent updates.

-

Define tables for tenants, applications, and API keys with rotation history and encryption. Explain how you’d enforce access control, auditing, and rate limits. At Anthropic, emphasize how this mirrors designing systems that protect sensitive model data and ensure secure access for collaborators and researchers.

-

Describe a linear-time approach using prefix sums. After presenting the algorithm, discuss how this principle extends to load balancing and partitioning in distributed systems. At Anthropic, relate it to fair resource allocation and deterministic computation across shards to maintain reproducibility.

Tip: Draw the happy path first. Mark hotspots, queues, caches, and storage classes. Add retries with jitter, idempotency keys, timeouts, and a simple circuit breaker. Name your shard key and why.

Tips when preparing for an Anthropic interview

Preparing for Anthropic’s interview process requires both technical excellence and a genuine understanding of the company’s mission. Anthropic looks for individuals who can think deeply, code responsibly, and collaborate thoughtfully. The following steps will help you build a preparation plan that mirrors the company’s expectations and values.

Study Anthropic’s mission and research

Start by reading Anthropic’s official research papers and blog posts. Learn about concepts like model interpretability, Constitutional AI, and the Responsible Scaling Policy. Understanding how Anthropic connects research to real-world safety applications helps you speak credibly about its goals during interviews.

Tip: Summarize each research topic in one sentence, then explain how it relates to the product or system level. Being able to simplify complex ideas shows both comprehension and communication skill.

Review your core technical foundations

Anthropic’s interviews test your depth of understanding, not just memorization. Revisit key areas such as data structures, algorithms, probability, and optimization. If you’re in engineering, practice debugging and building small systems that demonstrate sound architectural thinking. For research roles, strengthen your command of ML fundamentals like loss functions, evaluation metrics, and gradient optimization.

Tip: While practicing problems, focus on narrating your reasoning and trade-offs. Anthropic values clarity in how you reach a solution, not just whether you get it right.

Strengthen system design and scalability thinking

System design interviews at Anthropic often involve building large-scale, safety-critical systems for AI applications. Study patterns in distributed architecture, fault tolerance, and monitoring. Understand how to reason about trade-offs between reliability, cost, and performance.

Tip: Practice walking through designs using a structured format: start with requirements, outline components, then discuss scalability and failure recovery. Always end with how you would test and monitor for responsible scaling.

Build familiarity with large language models and AI infrastructure

Anthropic’s work revolves around model development, deployment, and safety. Familiarity with concepts like training pipelines, inference optimization, and model evaluation will help you stand out. You do not need to be a researcher, but understanding how large models operate will allow you to engage thoughtfully in discussions.

Tip: Explore how models like Claude or GPT behave under different prompts. Observe how small adjustments affect tone or factuality; this insight can help you answer product or safety-related questions with confidence.

Develop strong written and verbal communication skills

Anthropic emphasizes written reasoning and clear communication across teams. Engineers and researchers often write design documents, post-mortems, or research summaries in place of long meetings. Practicing structured writing shows that you can document ideas, decisions, and trade-offs clearly.

Tip: Write one-page summaries of a technical topic or project you’ve worked on. Ask a non-technical peer to read it and give feedback—your goal is clarity, not complexity.

Practice clear and structured communication

During interviews, Anthropic pays close attention to how candidates explain their reasoning. You are evaluated on how well you structure your thought process, justify your choices, and summarize insights. Practicing concise explanations helps you show composure under time pressure.

Tip: When solving problems, follow a “think, speak, solve” rhythm. Take a few seconds to organize your approach, talk through the logic step by step, and end by summarizing what you learned.

Conduct mock interviews and seek feedback

Mock interviews replicate the pacing and stress of real conversations. Practice both technical and mission-alignment questions with peers or mentors. Tools like Interview Query’s mock interviews and the AI Interviewer can simulate timed Anthropic sessions and help you refine your responses.

Tip: Record yourself while practicing and review your clarity, filler words, and pacing. This self-feedback loop will make you more composed and confident on interview day.

Reflect on personal motivation and long-term impact

Anthropic’s interviewers value self-awareness and purpose. Reflect on how your past work contributes to building responsible technology and how that connects to Anthropic’s goals. Be prepared to discuss situations where you balanced innovation with accountability.

Tip: Write down two or three experiences where you made decisions that prioritized safety, transparency, or ethical responsibility. These examples make your behavioral answers more compelling and authentic.

Stay current with AI ethics and governance trends

Anthropic operates at the intersection of technology and policy. Understanding broader conversations about AI regulation, bias mitigation, and responsible development will strengthen your perspective during mission-alignment interviews.

Tip: Follow reputable AI ethics publications or newsletters like Partnership on AI, AI Now Institute, or Anthropic’s blog. Reflect briefly on one ethical challenge you find most pressing today—this can lead to insightful conversations in the interview.

Build consistency in practice and reflection

Preparation is not just about repetition but about progress tracking. Set a weekly schedule that mixes technical drills, design exercises, and mission reflection. Balance problem-solving with learning and feedback to keep your practice meaningful.

Tip: After every study session, jot down one key improvement point. Tracking growth over time helps you stay focused and motivated throughout your prep journey.

FAQs

What is the average salary across roles at Anthropic?

Most core roles at Anthropic fall under Software Engineering, Research Science, and Product Management, each contributing to the company’s mission of building safe and aligned artificial intelligence systems. Compensation at Anthropic ranks among the highest in the AI industry, reflecting its emphasis on top-tier technical talent and research excellence.

Overall, Anthropic maintains a very high compensation benchmark compared with peer AI labs. Levels.fyi lists software, research, and policy positions with total compensation ranging from $300,000 to $635,000 USD, with senior leadership roles occasionally surpassing $700,000 USD. These numbers reflect Anthropic’s focus on attracting top technical talent to build its next-generation Claude models and AI safety systems.

From recent data, Research Scientists and Senior Software Engineers command the highest pay, often exceeding US$500,000 per year when equity is included. Product Managers also earn strong six-figure packages, particularly those leading AI safety or consumer product initiatives. Below is a salary comparison across key Anthropic positions:

- Software Engineer

- Base Salary: ~US$200K–US$300K per year

- Total Compensation: US$255K–US$590K+ (Business Insider, Levels.fyi)

- Structure: High base and significant equity, with senior engineers often earning over US$500K in total compensation. Packages reward expertise in AI infrastructure, safety systems, and high-impact model deployment.

- Research Scientist (Interpretability / Alignment)

- Base Salary: ~US$250K–US$350K per year

- Total Compensation: US$280K–US$600K+ (Chemistry Jobs, Levels.fyi)

- Structure: Includes strong equity packages and potential bonuses. Senior scientists and research–engineering hybrids often exceed US$500K annually, reflecting Anthropic’s premium for alignment and interpretability research.

- Product Manager (Consumer Growth / Model Safety)

- Base Salary: ~US$275K–US$305K per year (The Ladders)

- Total Compensation: US$300K–US$450K+

- Structure: Combination of high base pay and substantial equity grants. Senior PMs managing large-scale model initiatives or cross-functional product launches earn significantly higher total packages.

Anthropic’s compensation strategy is designed to attract world-class technical talent through a mix of high base salaries and substantial equity ownership. Roles that directly advance AI safety, research innovation, and infrastructure scalability receive the largest rewards, underscoring the company’s focus on long-term alignment and research-driven impact.

What is the interview process like at Anthropic?

Anthropic’s interview process combines technical depth with cultural and mission alignment. You can expect recruiter screenings, coding or system design interviews, and reflective discussions about AI safety and collaboration. Each stage is designed to evaluate how you think, communicate, and connect with the company’s purpose.

How hard is it to get a job at Anthropic?

It is highly competitive. Anthropic attracts candidates from top research institutions and technology companies, so strong technical foundations, clear reasoning, and alignment with its safety mission are essential. Consistent practice and familiarity with the company’s values can significantly improve your chances.

What kinds of behavioral questions does Anthropic ask?

Behavioral questions focus on teamwork, ethics, and problem-solving under uncertainty. Interviewers may ask how you handle disagreements, approach ambiguous decisions, or ensure responsibility in your work. The goal is to understand your values, not just your experience.

Does Anthropic ask about culture fit?

Yes. Culture fit is an important part of the process, often explored through open-ended questions about communication, learning, and accountability. Interviewers want to see if your working style aligns with Anthropic’s collaborative and research-driven environment.

What values does Anthropic look for in candidates?

Anthropic values integrity, humility, curiosity, and a commitment to building AI that benefits people. Candidates who demonstrate awareness of both the technical and ethical dimensions of their work tend to stand out. Showing thoughtfulness and a desire to learn continuously reflects the company’s core spirit.