Anthropic Software Engineer Interview Guide 2025

Introduction

Preparing for an Anthropic software engineer interview means stepping into a role where every line of code has impact. Anthropic has raised billions in funding and is recognized as one of the leading AI safety companies, building models like Claude that are used by millions worldwide. Software engineers here develop infrastructure, scale large models, and design systems that make AI safer and more reliable. It’s no surprise that many candidates begin by searching for Anthropic software engineer interview questions to understand the skills and expectations.

At Anthropic, software engineers do more than code. They contribute to research, support large-scale training systems, and work closely with cross-functional teams to ensure responsible scaling. The culture emphasizes collaboration, transparency, and mission-driven work. Engineers are expected to adopt rigorous practices like safety-first reviews, careful system design, and constant iteration. For this reason, people also look up Anthropic interview questions software engineer to prepare for values alignment and culture fit conversations, not just technical rounds.

This guide gives you a clear roadmap for the entire process so you know exactly what to expect and how to prepare. You will learn how the interview loop works from recruiter screen to live coding, online assessments, system design, and values conversations. We break down common question types, show the patterns Anthropic cares about, and explain how to think out loud, optimize, and make sound trade-offs. You will also get a focused prep plan that covers coding drills, design frameworks, and behavioral stories, along with practice resources you can use right away. By the end, you will have a structured approach that helps you walk into each round confident, organized, and ready to show strong engineering judgment that aligns with Anthropic’s mission.

Why this role at Anthropic?

Anthropic gives software engineers the chance to build core systems that power safe, reliable AI at scale. You will work on high-leverage problems such as distributed training and inference, data and tooling for evaluation, security and privacy controls, and developer platforms that make responsible AI practical for teams. The career path is wide and merit-based. Engineers grow from owning services and features to leading cross-team projects, driving platform roadmaps, and mentoring others. You can deepen as a staff or principal engineer across infrastructure or product-adjacent stacks, move into research engineering, or step into engineering management if you enjoy team building and delivery leadership. The work stays hands-on, the decisions are measurable, and the impact is visible across research and product. If you want to grow in an environment that values clarity, reliability, and responsible scaling, this role rewards strong builders who communicate well and think rigorously about safety.

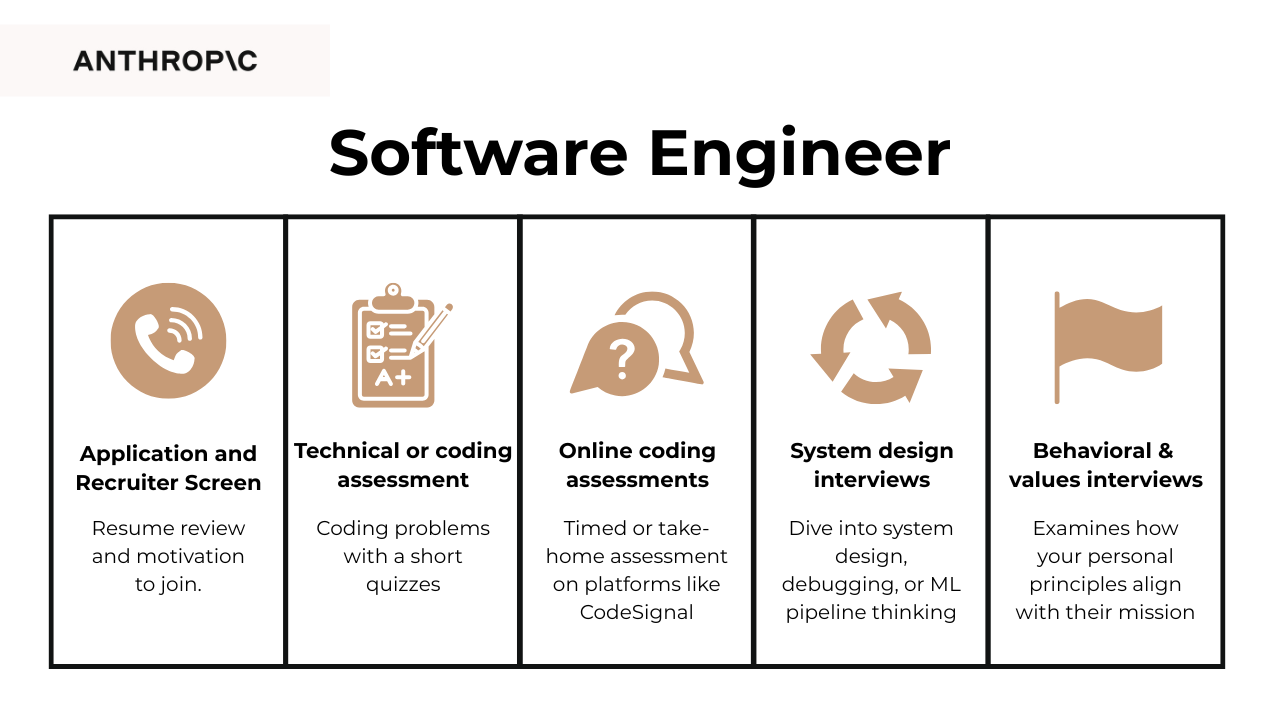

What is the interview process like for a software engineer role at Anthropic?

The interview process for a software engineer role at Anthropic is structured to test your technical depth, system thinking, and alignment with the company’s mission. You will move through several stages, beginning with a recruiter screen and advancing to technical interviews, design challenges, and cultural discussions. Each round helps the team understand not only how you solve problems but also how you collaborate and reason about safety in large-scale AI systems. Many candidates prepare by reviewing Anthropic coding interview questions so they can anticipate the structure and expectations of each stage.

Application & recruiter screen

The process usually begins with a short conversation with a recruiter. This stage focuses on your background, technical foundation, and motivation for joining Anthropic. Recruiters are evaluating whether your experience aligns with Anthropic’s engineering domains, such as infrastructure, model tooling, or safety systems. They also assess how clearly you can explain your work and how your goals connect with Anthropic’s mission.

Tip: Prepare a short “why Anthropic” story that connects your engineering experience to responsible AI development. Referencing Anthropic’s Responsible Scaling Policy shows that you understand what makes their work distinct.

Technical & coding rounds

These interviews are the core of the process and test your ability to write clean, efficient, and maintainable code. Interviewers are looking for problem-solving skills, algorithmic thinking, and clarity of communication as you reason through solutions. Anthropic values engineers who can write code that is both correct and scalable, with strong attention to performance and safety constraints.

Tip: When solving a problem, explain your reasoning as you go. Think aloud about trade-offs between readability and optimization to show that you can balance practicality with performance.

Online coding assessments

Before or alongside live coding rounds, you may receive a timed or take-home assessment on platforms like CodeSignal. These tests measure algorithmic speed, accuracy, and consistency under time pressure. Anthropic is evaluating whether you can produce reliable, well-structured code quickly without compromising on correctness. They also look for familiarity with core data structures and the ability to test your own logic before submission.

Tip: Practice on similar platforms using timed challenges. Aim to solve problems methodically rather than rushing, and verify edge cases before submitting your final code.

System design interviews

System design rounds assess your ability to think at scale. You might be asked to design scalable APIs, build storage for large datasets, or ensure reliability in distributed systems. Anthropic uses these rounds to evaluate how well you decompose complex problems, balance trade-offs, and reason about performance, cost, and safety. The ideal candidate can connect design choices to real-world production implications and communicate their thinking clearly.

Tip: Use frameworks such as “requirements → constraints → design → trade-offs → scalability → failure points.” Always mention how you would ensure reliability, safety, and interpretability in the system you design.

Behavioral & values interviews

The final step of the process examines how you align with Anthropic’s mission and engineering culture. Interviewers want to see how you collaborate, take ownership, and handle decisions under uncertainty. They are evaluating your ability to balance speed with reliability and to recognize when long-term safety should take priority over short-term delivery. The ideal candidate demonstrates integrity, humility, and curiosity about responsible AI.

Tip: Share stories that show how you have made thoughtful trade-offs between quality and delivery or how you’ve improved safety or reliability in past systems. Authentic examples resonate more than rehearsed answers.

What questions are asked in an Anthropic software engineer interview?

Anthropic’s interview loop is designed to test whether you can solve problems efficiently, design scalable systems, and collaborate in a mission-driven environment. The questions span several categories, each targeting a different skill set that matters for building safe and reliable AI.

Coding and technical questions

This category focuses on your ability to write clean, optimized solutions under time pressure. Expect themes around arrays, hashmaps, recursion, and dynamic programming, with some sessions done live on a whiteboard or pair-programming platform. Many candidates practice with Anthropic coding interview questions or review patterns similar to Anthropic interview questions, coding to build familiarity with the types of challenges that appear. For practice, engineers often turn to Anthropic Leetcode interview questions, since the difficulty and style are comparable to what Anthropic emphasizes.

How would you sample a random row from a 100M+ row table without throttling the database?

Prefer an indexed-id jump rather than

ORDER BY RANDOM()to avoid full scans. You should discuss fallbacks if the first range is empty, using a second pass starting from the minimum. If on engines that support it, you can considerTABLESAMPLE SYSTEMwith a small rate plusLIMIT 1. In an Anthropic software engineer interview, tie this to production safety by mentioning throttling controls, read replicas, and sampling that preserves privacy constraints.How would you write a query to report total distance traveled by each user in descending order?

Join rides to users, sum ride distance per

user_id, and order by the aggregate descending. You should guard against nulls and unit inconsistencies, and decide whether to exclude canceled rides. In a production context, pre-aggregate into a daily fact table to reduce query latency and support dashboards.-

Use a window like

ROW_NUMBER() OVER (PARTITION BY CAST(created_at AS DATE) ORDER BY created_at DESC)and filter to row 1. You should be explicit about time zones and the day boundary to avoid off-by-one errors. In production, add an index on(created_at DESC)and consider materializing daily rollups. -

Join employees to departments, group by department, compute

AVG(CASE WHEN salary > 100000 THEN 1 ELSE 0 END)as the rate, and applyHAVING COUNT(*) >= 10. Order by the rate descending and break ties deterministically. Call out data-quality checks (currency normalization, duplicates) and access controls for sensitive pay data. -

Aggregate positive transaction values per calendar day, then apply a window

AVG(daily_deposits) OVER (ORDER BY day ROWS BETWEEN 2 PRECEDING AND CURRENT ROW). You should decide how to treat missing days: fill with zeros via a date spine if you need a true moving window. In production, ensure idempotent backfills and verify that late events land in the correct day bucket. -

Self-join distinct user–product sets to form unique pairs, enforce ordering with

LEAST/GREATEST, join to product names, then group and count. Order by count descending and break ties alphabetically on names. At Anthropic scale, discuss partitioning byuser_id, approximate counting, and guardrails against test orders or synthetic traffic. -

Self-join on

user_idwhere both rows haveend_dateand apply the canonical overlap predicatea.start_date <= b.end_date AND b.start_date <= a.end_date, excluding self-matches. Reduce to a per-user boolean viaEXISTSorCOUNT(*) > 0. In production, index(user_id, start_date, end_date)and standardize time zones and inclusivity rules to avoid boundary bugs.

Tip: Before coding, list data structure, complexity target, and test set. While coding, narrate invariants. After coding, prove correctness on a tricky case and suggest a small memory or time optimization you would try next.

System design questions

System design rounds shift the spotlight to architectural thinking. You may be asked to propose scalable backends for AI workloads, outline storage solutions for large datasets, or balance reliability against performance in distributed systems. These interviews check whether you can zoom out, break problems into components, and articulate trade-offs clearly. Both junior and senior engineers face them, but senior candidates should be ready for deeper explorations of scalability and leadership in design choices.

-

Frame this as a building block for streaming systems that merge sorted shards. Explain how you would implement a linear-time merge and extend it to k-way merges with a min-heap for large fan-in. Discuss memory constraints, backpressure, and out-of-order corrections in distributed pipelines. Close by stating the time and space complexity and how you would monitor correctness at scale.

-

Define business processes first, then propose facts for orders, inventory, and returns with conformed dimensions for customer, product, store, and date. Describe partitioning, slowly changing dimensions, and late-arriving facts. Explain how you would support privacy, lineage, and reproducible ML training sets. Outline batch vs incremental loads and the metrics layer that guarantees one definition of revenue.

-

Propose an address history table keyed by person and address with effective start and end timestamps. Explain how you would handle deduplication, normalization, and geocoding while preserving past occupants. Cover read patterns like “current address” queries with indexes on validity windows. Describe safeguards for compliance and deletion while maintaining referential integrity.

-

Model riders, drivers, vehicles, and rides with states from requested to completed. Add geospatial points for pickup and drop-off and a pricing breakdown with promotions and fees. Explain indexes for hot paths like “active rides near a location” and how you would partition by city and time. Discuss consistency needs for payment capture and how you would audit disputes.

-

Define tenants, applications, API keys, scopes, and key rotation history with strong encryption at rest. Explain rate limiting, revocation, and audit logs that support incident response. Describe how you would isolate noisy tenants and ensure idempotency for payment webhooks. Cover migration and rotation strategies that avoid downtime and key leakage.

-

Present a linear-time solution with a total sum scan and a running left sum. Explain how this primitive supports partitioning decisions in sharded services and load balancing. Discuss streaming variants for large inputs that do not fit in memory. State time and space complexity and how you would test edge cases like negatives or repeated values.

Tip: Draw the request path first. Call out hotspots, backpressure points, and data TTLs. Add retries with jitter, idempotency keys, and circuit breakers. State your sharding key, partition strategy, and what you would page on.

Behavioral and culture fit questions

Beyond technical expertise, Anthropic prioritizes collaboration and mission alignment. Behavioral questions probe how you’ve handled team dynamics, delivered under pressure, or made trade-offs between speed and reliability. They often touch on how you embody values like transparency, ownership, and long-term safety. Clear STAR-method answers show interviewers that you can contribute not just to codebases but also to a culture that values responsible AI.

Describe a software project you worked on. What were some of the biggest challenges you faced?

Pick a project that highlights your engineering rigor—perhaps building a backend service, optimizing data pipelines, or contributing to an ML infrastructure tool. Walk through the problem statement, technical stack, and design decisions you made. Discuss specific challenges such as scaling, concurrency, or data consistency, and explain how you overcame them. End by quantifying the impact on reliability, performance, or developer productivity.

Sample answer:In my previous role, I helped build a distributed data processing pipeline that handled millions of daily events. The main challenge was ensuring consistency between real-time and batch layers while keeping latency below one second. I worked with the data engineering team to refactor the message queue, introducing backpressure handling and a monitoring dashboard to detect lag early. This required deep debugging across multiple services and coordination between infrastructure and analytics teams. After deployment, data accuracy improved by 20% and alert response times dropped by half, which directly increased trust in our internal metrics.

-

Focus on clear documentation, intuitive dashboards, and reproducible demos. Explain how you simplify concepts by visualizing architecture or using real-world analogies. Mention how you collaborate with product, safety, or research teams to translate technical constraints into practical implications. Show that you value communication as much as code quality.

Sample answer: I believe the key is framing complexity through purpose rather than mechanics. On one project, I built an interactive dashboard that visualized API dependencies as simple flows between services, which made it easier for product managers to understand latency bottlenecks. Instead of diving into thread pools and RPC calls, I explained it in terms of “wait time” and “handoffs.” I also added scenario-based documentation so that anyone could reproduce test results without setup confusion. This approach made technical reviews faster and helped non-engineers feel comfortable contributing ideas about reliability and user impact.

-

Highlight strengths like writing maintainable code, designing scalable systems, and collaborating cross-functionally. Choose one real but manageable weakness, such as over-refactoring or underestimating documentation time, and describe how you’ve improved through structured reviews or clearer planning. Reflect on how your mindset aligns with Anthropic’s emphasis on rigor, clarity, and safety in engineering.

Sample answer: My manager would describe my engineering style as methodical and collaborative. My main strengths are writing clean, well-documented code, proactively mentoring teammates, and designing systems with long-term maintainability in mind. I also take initiative in proposing small design improvements before they turn into major refactors. My weakness used to be over-investing in optimization before validating user impact, but I’ve learned to apply data-driven prioritization during planning reviews. This balance between speed and rigor has made me more aligned with teams that value safety and thoughtful execution, like Anthropic.

-

Use an example where technical trade-offs were misunderstood—say, balancing model accuracy with latency or rollout risk. Explain how you reframed the issue in terms of measurable impact or user outcomes. Describe the steps you took to build alignment and what changed afterward. Emphasize how you value empathy and collaboration when resolving tension.

Sample answer: When I worked on an ML API integration, there was disagreement between the research and product teams about acceptable latency. Researchers wanted to keep complex models, while product wanted faster responses for users. I realized we were talking past each other—so I built a simple benchmark dashboard that plotted model accuracy against latency. Seeing the trade-offs visually helped everyone agree on an acceptable target and a staged rollout plan. That experience reinforced how structured communication and empathy can turn conflict into productive collaboration.

-

Anchor your answer on Anthropic’s mission of building safe, interpretable, and reliable AI systems. Discuss how your technical background—whether in distributed systems, ML tooling, or backend infrastructure—can help scale this mission. Show that you’re drawn to solving hard problems responsibly and contributing to systems that prioritize safety as a first-class design constraint.

Sample answer: I applied to Anthropic because it combines cutting-edge engineering with a clear sense of responsibility. I’ve worked on scalable infrastructure and ML tools before, but I’ve always been interested in how to make AI systems interpretable and safe in production. Anthropic’s work on Constitutional AI and model transparency aligns perfectly with that goal. I’m looking for a role where I can design reliable, high-performance systems while contributing to safety-first principles at scale. My mix of backend expertise, systems thinking, and collaborative mindset makes this a natural fit.

Tell me about a time you balanced rapid feature development with maintaining reliability and technical debt.

Describe a situation where speed and stability were in tension, such as shipping a feature under tight deadlines. Walk through how you assessed risk, defined guardrails (testing, rollback plans, monitoring), and made trade-offs explicit to stakeholders. End with how you kept quality high while still delivering impact.

Sample answer: At my last company, we had to launch a new data ingestion feature in less than two weeks for a client pilot. The initial approach risked skipping key validation checks, which could cause downstream data corruption. I proposed a lightweight schema validation service that ran asynchronously to flag issues without blocking ingestion. This allowed us to ship on time while containing risk through detailed logs and rollback triggers. The pilot succeeded, and the same service later became part of our permanent data quality framework—proving that speed and reliability can coexist when you design with guardrails.

Tip: Keep one story each for reliability incident, cross team alignment, and debt paydown. Name the risk, the guardrail you added, the metric that moved, and the doc you left behind so the fix persisted.

How to prepare for a software engineer role at Anthropic

Anthropic expects software engineers to combine strong coding fundamentals, thoughtful system design, and alignment with its mission of safe, interpretable AI. Preparing well means building both technical mastery and the ability to reason through trade-offs that prioritize safety and reliability.

Study the role and culture

Start by learning how Anthropic defines responsible AI development. Reading the Core Views on AI Safety, Responsible Scaling Policy, and exploring the Anthropic research hub will help you connect system design choices to safety principles. Understanding this philosophy is key to answering culture and values questions confidently.

Tip: Summarize each document in your own words and think about how you would reflect those principles in engineering trade-offs like latency vs. safety or performance vs. transparency.

Strengthen algorithms and data structures

Most technical interviews emphasize fundamental computer science knowledge. Expect problems involving arrays, hashmaps, recursion, and dynamic programming. Practicing Anthropic Leetcode interview questions helps you build the right mix of speed and clarity.

Tip: Focus on explaining why you’re choosing a specific data structure and how it impacts time and space complexity. Anthropic values clear reasoning as much as correctness.

Practice system design with an AI focus

System design questions test how you build scalable, reliable systems under real-world constraints. At Anthropic, this often includes designing architectures that support large models, distributed workloads, or evaluation pipelines.

Tip: In every design, explain how you would ensure reliability and safety at scale—mention fault tolerance, data validation, and monitoring for model performance or bias.

Use online coding platforms to simulate real conditions

Anthropic often uses timed assessments on platforms like CodeSignal. Reviewing Anthropic codesignal interview questions helps you practice accuracy and structure under time pressure.

Tip: Recreate test conditions by setting a timer and submitting your solutions without looking at references. Focus on writing readable, tested code rather than rushing to the final output.

Learn to think out loud and optimize

During live coding rounds, how you explain your approach is as important as the solution itself. Interviewers evaluate how clearly you can reason, debug, and improve your code.

Tip: Practice narrating your steps during problem-solving sessions. When you find a working solution, discuss optimizations out loud to show iterative thinking and awareness of trade-offs.

Build familiarity with large-scale AI systems

Even if you’re not a research engineer, knowing how large language models are trained and deployed gives you a huge advantage. Learn the basics of inference optimization, distributed training, data handling, and safety evaluation.

Tip: Read Anthropic’s research posts or watch technical talks about Claude’s evolution. Practice summarizing them for a non-technical audience to demonstrate understanding and communication skill.

Master written communication

Anthropic values written communication as a core engineering skill. You’ll often explain designs or propose improvements through documentation instead of meetings.

Tip: Write one-page design briefs or post-mortems summarizing technical choices and trade-offs. This habit mirrors Anthropic’s culture of clarity and evidence-based reasoning.

Prepare behavioral and collaboration stories

Behavioral interviews test how you work across teams, especially under ambiguity or high stakes. Prepare STAR stories that highlight how you improved reliability, solved scaling issues, or handled safety-critical bugs.

Tip: End each story with a metric, such as improved latency, reduced errors, or better collaboration outcomes, to show tangible impact.

Mock interviews and iterative feedback

Practicing with peers or mentors helps you build confidence and communication rhythm. You can simulate Anthropic-style sessions through Interview Query’s mock interviews or test yourself using the AI Interviewer.

Tip: Record yourself while practicing. Review your tone, pacing, and how clearly you describe trade-offs or reasoning steps.

Stay current on AI ethics and safety trends

Anthropic values awareness of broader discussions around AI regulation and responsible development. Following companies like OpenAI, DeepMind, and the Partnership on AI helps you speak fluently about industry shifts.

Tip: Read one research article or policy update weekly and jot down a short summary. Use these insights to enrich your answers about mission alignment or industry awareness.

Ready to Start Your Anthropic Engineering Prep?

Preparing for Anthropic software engineer interview questions means going beyond algorithms. The strongest candidates show mastery of coding fundamentals, clear system design thinking, and a thoughtful approach to collaboration and AI safety. Interviews are designed to see not only if you can solve problems quickly but also if you can reason about trade-offs and build responsibly at scale.

If you’re getting ready for this process, practice with resources tailored to engineers. The Anthropic company page on Interview Query offers role-specific insights, while the Interview Query question bank lets you drill into coding and design challenges. To sharpen your skills under pressure, schedule a mock interview or test yourself with the AI Interviewer. With consistent practice, you’ll be ready to handle both Anthropic coding interview questions and values-driven discussions with confidence.