Revolut Data Scientist Interview Guide (2025 Update)

Introduction

Joining as a Revolut data scientist, you’ll play a central role in shaping one of the world’s fastest-growing fintech platforms. You’ll be responsible for building robust experimentation pipelines to validate product decisions, forecasting financial or risk metrics that directly inform business strategy, and deploying scalable models into production. Collaboration is key—you’ll work closely with product managers, engineers, and fellow data scientists to deliver insights that drive measurable results. Whether you’re optimizing fraud detection algorithms or A/B testing new onboarding flows, your impact will be immediate and significant. With millions of daily users and vast real-time datasets, every model you ship at Revolut influences user behavior at scale.

Role Overview & Culture

As a data scientist Revolut, you’ll also find yourself immersed in a culture that prioritizes intellectual rigor and ownership. Revolut’s values—Think Deeper, Never Settle, and the belief in lean, autonomous squads—mean that you’re not just running queries or tweaking models. You’re expected to challenge assumptions, experiment with bold ideas, and fully own your roadmap. Squads move fast and act like mini-startups, giving you both the responsibility and the creative freedom to shape solutions end-to-end. In this flat and fast environment, learning is accelerated and performance is rewarded with influence. It’s a place where smart people move quickly—and meaningfully.

Why This Role at Revolut?

At Revolut, data scientists are expected to own projects from ideation to production, with real autonomy and access to massive data infrastructure. The scope isn’t limited to building models—you’ll define the problem, test your hypotheses, measure ROI, and deploy production-ready solutions. The business moves fast, and your insights can shape product direction in real time. Plus, with meaningful equity, your work doesn’t just generate value—it helps you capture it.

If you’re preparing for the Revolut data scientist interview, here’s exactly what you need to know—from the process to the questions to how you should structure your answers.

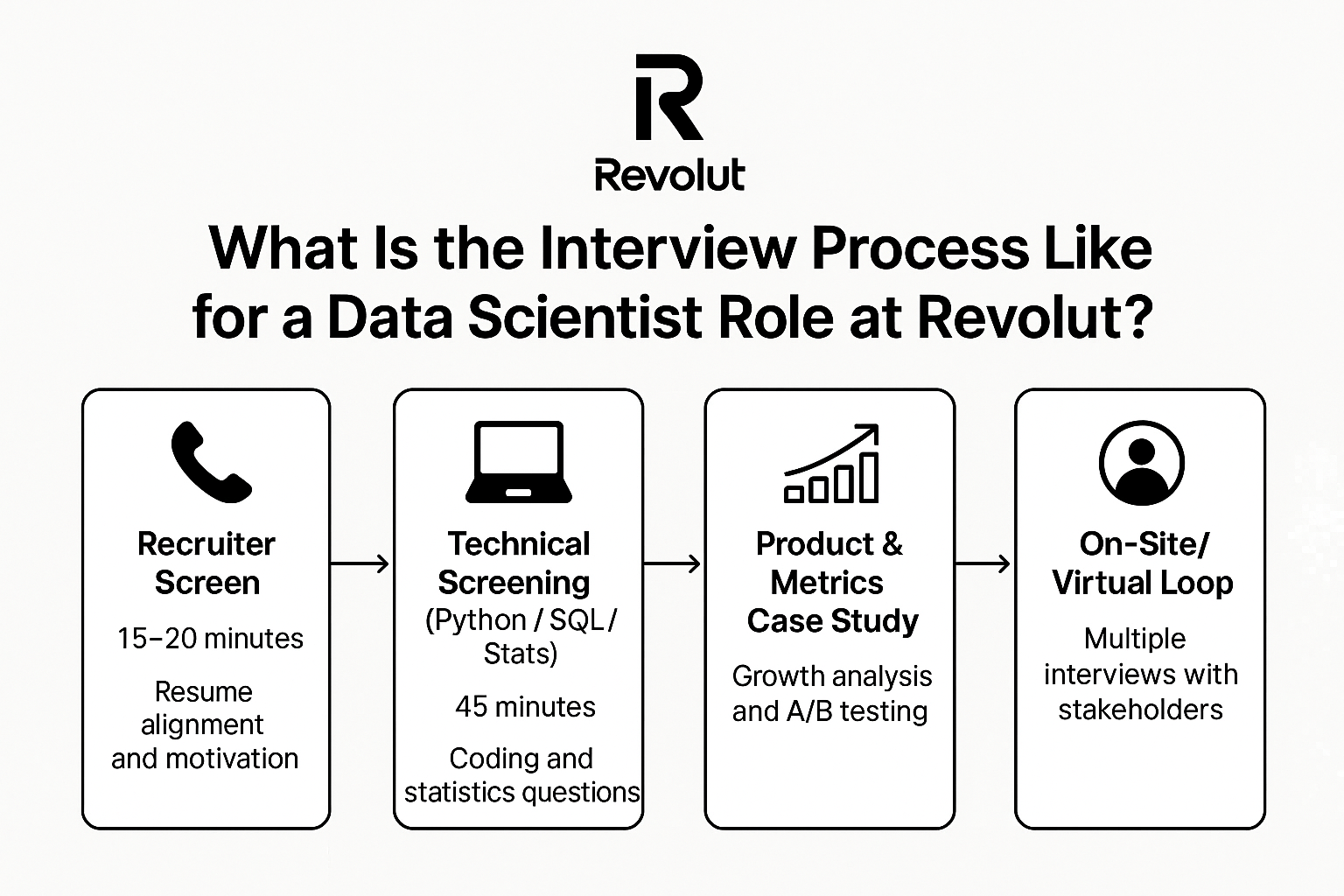

What Is the Interview Process Like for a Data Scientist Role at Revolut?

While the process can vary slightly by domain—risk, credit, computer vision—the Revolut data scientist interview typically follows a consistent and rigorous structure designed to assess both technical depth and product sense.

Recruiter Screen

he process kicks off with a 15–20 minute call focused on resume alignment, motivation for joining Revolut, and your availability. Expect light behavioral questions and a chance to discuss your interest in the role and the company’s mission.

Technical Screening (Python / SQL / Stats)

This 45-minute live session tests hands-on coding skills with real-world Pandas problems and SQL joins. You’ll also answer probability and statistics questions that simulate business scenarios. This is where the second use of revolut data scientist interview becomes especially relevant—your problem-solving style and communication matter as much as correctness.

Product & Metrics Case Study

You’ll walk through a growth funnel analysis or design an A/B test. The goal is to evaluate your ability to interpret product metrics, formulate hypotheses, and structure experiments that drive decisions.

On-Site / Virtual Loop

The final round includes multiple back-to-back interviews. You’ll dive deep into machine learning projects you’ve built, answer behavioral questions tied to Revolut’s values, and engage in a stakeholder round that simulates cross-functional collaboration. Here, you’ll again demonstrate your readiness for the Revolut data scientist role under pressure and ambiguity.

Revolut commits to giving feedback within 24 hours post-loop. Final decisions are subject to a hiring committee review and sign-off from senior leaders.

Candidates interviewing for senior roles should expect an additional panel on system design for ML infrastructure, plus scenario-based questions that assess team leadership, influence, and long-term vision.

What Questions Are Asked in a Revolut Data Scientist Interview?

The Revolut data scientist interview is known for testing both analytical depth and business intuition, often under tight time constraints. You can expect a mix of coding, statistics, experimentation, and product-sense questions that reflect the company’s fast-moving, data-first culture.

Coding & SQL Questions

Expect practical data manipulation tasks—from cohort churn analysis to tricky ETL edge cases involving NULLs, duplicates, or timezones. Window functions are a favorite, and Revolut values a brute-first, optimize-later approach that mirrors real-world engineering.

1. Write a query to get the current salary for each employee.

This query retrieves the most recent salary entry for each employee by selecting the row with the maximum id for each unique combination of first_name and last_name, assuming id increases with each new entry. It joins the original employees table with this subquery to return the latest salary for each employee.

Write a query to count users who made additional purchases after a main transaction. Start by joining the transaction table to itself using a time window condition. Then group by user and filter using HAVING COUNT(*) > 1. It’s similar to real-world churn analysis queries you’d face in fast-moving product teams.

3. Calculate the first touch attribution for each user_id that converted.

Calculate the first touch attribution channel for each customer journey. Use window functions to rank events by timestamp and filter for rank = 1. Always check for null or malformed timestamps. This question mirrors product analytics tracking challenges at Revolut.

Select the top 3 departments with at least ten employees by average salary. A classic grouping and filtering question that often requires subqueries or WITH clauses. You may also need to handle ties or apply rounding logic. This mirrors the kind of top-N cohort queries used in internal dashboards.

This query calculates the average number of right swipes for users in an A/B test (feed_change) who reached at least 10, 50, and 100 swipes. For each swipe threshold, it filters users who have swiped that many times, then computes the average number of right swipes among their first N swipes grouped by feed variant. This allows comparison of swipe behavior across different feed ranking algorithms at various user engagement levels.

Statistics & Experimentation Questions

You’ll be tested on your ability to run sound A/B tests, interpret p-values, and explain trade-offs between Frequentist and Bayesian methods.

6. Determine the mean and variance of 2X−Y for independent normal variables X∼N(3,4) and Y∼N(1,4).

Use linear transformation properties of normal distributions: mean and variance scale predictably. Calculate expected value and variance algebraically. Good test of probabilistic reasoning. Applies well to real-world model combination variance.

Derive using probability density functions or simulate numerically. Recognize the distribution of order statistics. Useful for understanding uncertainty in summary stats. Sharpens intuition for rare event bounds.

Determine if survey responses are random or biased using hypothesis testing. Walk through defining your null hypothesis, choosing the appropriate test (e.g. Chi-square or t-test), and checking assumptions. You should also interpret the p-value in context. This reflects the kind of validity checks Revolut expects when evaluating behavioral experiments.

9. Design an A/B test to measure the impact of a new pricing feature on conversion.

Start with hypotheses and define the metric (e.g., conversion rate). Calculate the required sample size using power analysis and determine test duration. Discuss common pitfalls: peeking, imbalance, multiple comparisons. Very reflective of Revolut’s experiment-heavy culture.

10. Compare the advantages of Bayesian vs. Frequentist A/B testing.

Cover key differences: priors, interpretation, handling low-sample results. Give real-world use cases where Bayesian is preferred (e.g., fast iteration, small sample sizes). Expect to discuss tradeoffs. Important for aligning with Revolut’s fast-decision environment.

Machine-Learning & Model Evaluation Questions

Revolut loves applied ML—think fraud detection features, time-based decay, and handling concept drift in production. Be ready to compare evaluation metrics (ROC, precision-recall) and explain model results to non-technical stakeholders in a way that drives decisions.

11. Build a model to bid on a new unseen keyword using a dataset of keywords and their bid prices.

Walk through data preprocessing, one-hot encoding, and regression techniques. Address sparse categories and potential leakage. Tie model outputs to business outcomes. Shows end-to-end ML deployment thinking.

12. Determine if 1 million Seattle ride trips suffice to build an accurate ETA prediction model.

Frame the problem using bias-variance tradeoff and expected feature diversity. Discuss sampling, label accuracy, and geographic coverage. Emphasize data quality over quantity. Tests scoping and evaluation reasoning.

13. Choose between ROC-AUC and precision-recall for fraud detection.

Explain class imbalance and its impact on metrics. Highlight PR curve relevance when false positives are costly. Discuss when to tune thresholds. Key question for Revolut’s fraud ML domain.

14. Explain concept drift and how to monitor it in a deployed model

Define drift types (covariate, prior, concept), and monitoring tools like KL divergence or PSI. Propose retraining strategies. Connect to Revolut’s dynamic product environment. Crucial for production ML stability.

15. How would you explain model output to a product manager or stakeholder?

Use tools like SHAP, partial dependence plots, or simple rule approximations. Avoid math jargon—focus on directionality and intuition. Add context around impact. Measures stakeholder empathy and communication.

Product & Business Impact Questions

These questions test whether you can tie your analysis to real-world outcomes—like quantifying the revenue uplift from a model’s lift or choosing a North-Star metric for a new feature. Clarity and prioritization matter more than jargon.

Break down metrics like active users, power users, engagement frequency. Consider confounders like seasonality or user type shifts. Form hypotheses and structure root-cause analysis. Strong case for metric literacy.

Look for sampling bias, experiment setup flaws, or confounding variables. Recommend data collection improvements. Common in transportation or marketplace product metrics. Tests skepticism and experiment fluency.

18. Define a North-Star metric for a new Revolut feature like crypto staking.

Choose from retention, user activity, or revenue impact depending on goal. Justify tradeoffs and data sources. Tests metric creativity. Important for aligning models with product direction.

19. Assess the success of a fraud detection model from a business standpoint.

Discuss not just accuracy but false positive cost, user experience, and fraud prevention value. Suggest metrics like avoided loss. Good fusion of ML and business framing. Essential for applied roles at Revolut.

20. Prioritize two competing product experiments—one with high variance, one with high MDE.

Frame risk, sample size, and business value. Suggest running A/B with control overlap if resources are limited. Measures decision-making under constraints. Sharpens intuition for tradeoff thinking.

Behavioral & Culture-Fit Questions

You’ll need crisp STAR-format stories that align with Revolut’s values: ownership, speed, and measurable impact. Focus on moments where you took initiative, made tradeoffs under pressure, or learned fast from failure.

21. Describe a time you took full ownership of a failing project and turned it around.

Use STAR format: set up the challenge, your ownership, how you executed, and the outcome. Highlight speed and stakeholder buy-in. Strong match for Revolut’s Never Settle value.

22. Tell me about a time you made a decision without perfect data.

Explain how you framed the risk, moved forward, and measured impact post-decision. Show comfort with ambiguity. Taps into Revolut’s bias toward action.

23. Give an example of a time you shipped something fast with high impact.

Detail time constraints, your approach, and what outcome you achieved. Show tradeoffs made to optimize for speed. Strong culture-fit test.

24. Talk about a time when you challenged a team’s direction with data.

Describe your analysis, how you presented it, and what changed. Emphasize clarity, not ego. Emphasize values of Think Deeper and Stronger Together.

25. Share a moment when you failed and what you learned.

Choose a real example, take accountability, and focus on what changed next time. Avoid vague platitudes. Demonstrates growth mindset.

How to Prepare for a Data Scientist Role at Revolut

Technical, behavioral, and analytical skills are critical in proving yourself as an efficient data scientist to Revolut. Here is a rough guideline on how to prepare for the role:

Master Timed SQL/Python Drills

Simulate 30–45 minute live sessions using real datasets—focus on joins, filtering logic, and Pandas manipulation. Speed matters, but so does clarity—write clean code and explain as you go.

Refresh Core Stats

Revisit A/B testing foundations: p-values, Type I/II errors, and confidence intervals. Understand common pitfalls like peeking and sample ratio mismatches, as these often come up in interviews.

Build Experiment Design Playbook

Prepare a structured approach to experiment design—cover hypothesis framing, MDE calculations, and tradeoffs between guardrail and primary metrics. Be ready to walk through example scenarios live.

Tune ML Storytelling

Choose 2–3 ML projects that highlight end-to-end thinking—problem framing, model selection, evaluation, and business outcomes. Revolut values measurable impact, so bring metrics.

Mock Interviews & Peer Feedback

Practice thinking aloud, especially when faced with open-ended case questions. Ask a peer or mentor to challenge your assumptions and probe your reasoning—it’s the best proxy for the real loop.

FAQs

What Is the Average Salary for a Data Scientist at Revolut?

Average Base Salary

Average Total Compensation

Where Can I Read First-Hand Interview Experiences?

Check out the company interview guide. You’ll find interview experiences and questions of top tech companies.

Are There Live Job Postings for Revolut Data Scientists?

Yes! The Jobs Boards hosts recent job postings for different roles at different companies, including the Data Scientist role at Revolut.

Conclusion

Preparing for the Revolut data scientist interview means going beyond technical skill—it’s about thinking like a builder. Nail the fundamentals: timed SQL/Python drills, strong command of statistics and experiment design, and impactful ML storytelling that shows business results. Just as important, reflect Revolut’s values—Think Deeper, Never Settle—and be ready to thrive in fast-moving, autonomous squads.

Need more practice or insider tips? Schedule a mock interview to test your readiness under pressure. Check out our blog for practice prompts, deep dives, and insider strategies to help you get hired.

Looking to apply as a Revolut data scientist? Explore more insights and role-specific prep by visiting our software engineer and product manager interview guides.