Why OpenAI Is Hiring Forward-Deployed AI Engineers Like Palantir

The Shift from AI Models to AI Management

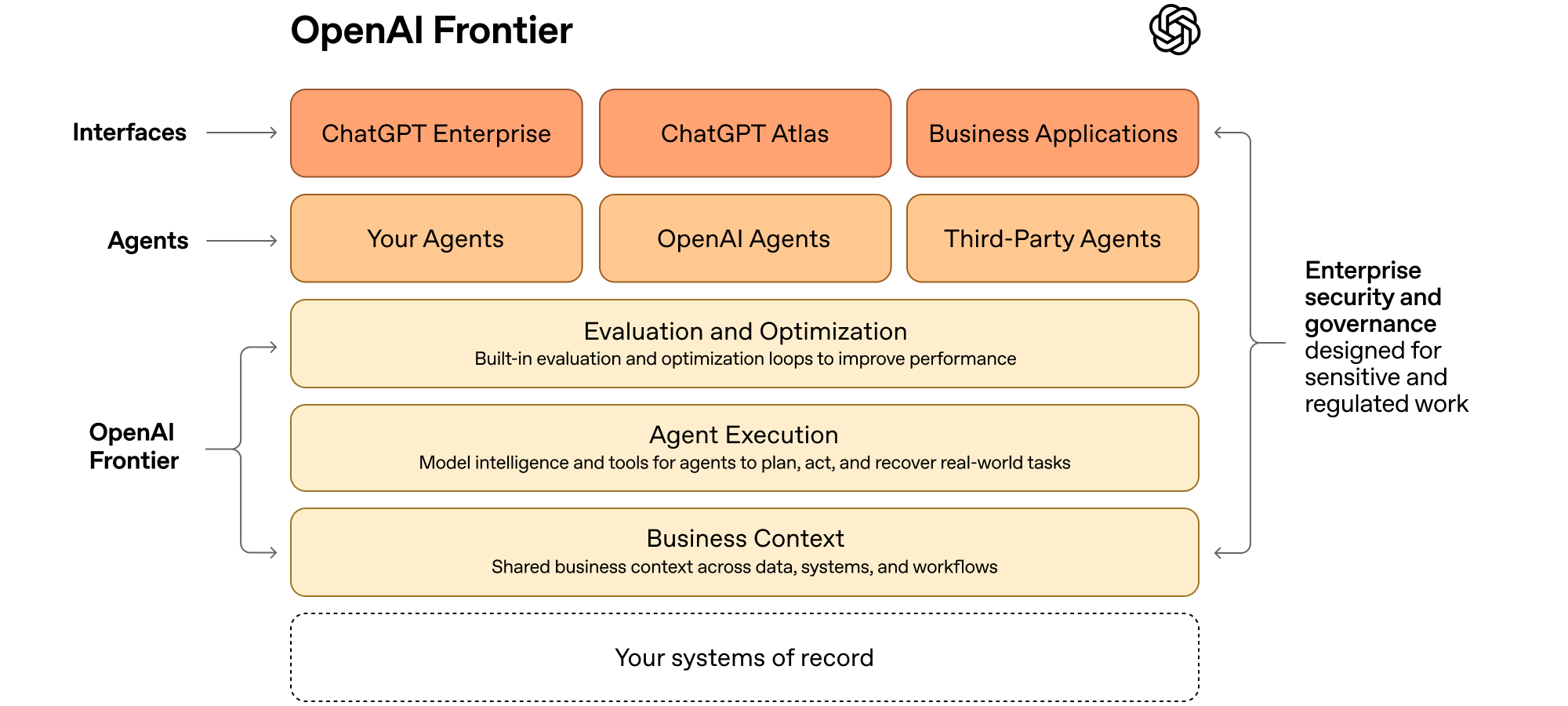

While OpenAI has made its name through artificial intelligence models, APIs, and chatbots, its approach is changing with the launch of OpenAI Frontier. Described as a framework for deploying AI agents, Frontier represents the company’s shift away from selling raw AI capabilities and toward managing AI agents like a workforce.

According to OpenAI’s product announcement, the company is building infrastructure where AI agents have defined roles, scoped permissions, persistent memory, and governance controls. Instead of just being stateless prompts firing off answers, these agents can operate across workflows, retain context, and be monitored much like employees inside an organization.

This is more than just a feature update and represents a structural change with implications far beyond product strategy, namely for entry-level tech jobs, for how companies deploy AI at scale, and for why OpenAI itself is hiring engineers in a very different way than before.

What OpenAI Is Actually Building

At its core, Frontier is best understood as a control layer for AI agents. It’s described less like a chatbot interface and more like an orchestration system, one that allows companies to deploy multiple agents, assign them responsibilities, and manage how they interact with internal tools and data.

Source: OpenAI Frontier announcement

Source: OpenAI Frontier announcement

What’s new here is persistence and coordination, allowing teams to ‘move beyond isolated use cases to AI coworkers that work across the business,’ as per OpenAI’s announcement. Since agents can carry memory across tasks, access specific systems based on permissions, and collaborate with other agents inside real enterprise environments, they set themselves apart from single-turn prompts or copilots.

These capability transformations are what’s making AI stop behaving like a helpful assistant, and more like a junior worker, at least in terms of scope. Through well-defined tasks, repeatable workflows, and ongoing responsibility. OpenAI’s own framing of AI agents emphasizes orchestration and management. This shift also hints at where enterprise value is actually being created.

Why Entry-Level Tech Jobs Are the First Pressure Point

If AI agents are starting to resemble junior workers, it’s not surprising where the pressure shows up first. Entry-level tech roles are disproportionately exposed because they’re built around exactly the kinds of tasks these agents now handle well, such as clearly scoped work, repetitive processes, and heavy reliance on documentation and rules.

In practice, this looks like AI agents performing QA checks, cleaning datasets, maintaining internal tools, or producing first-pass analyses that once belonged to junior analysts or engineers. In fact, large enterprises like HP, Oracle, and Uber are already experimenting with this kind of deployment, particularly in back-office and support-heavy functions.

Although this doesn’t mean entry-level jobs disappear overnight, it does raise the bar for what “entry-level” means. Moreover, the problem is less outright replacement and more a pipeline issue. As covered by a previous article on the risks of freezing entry-level tech hiring, there could be a long-term talent crisis characterized by fewer true starting roles, slower skill development, and harder career ladders. AI agents can intensify that dynamic by absorbing the very work that used to train new hires.

OpenAI Is Emulating Palantir’s Strategy

This is where OpenAI’s hiring strategy becomes crucial. The AI startup is increasingly adopting a Palantir-style model, bringing in forward-deployed engineers who work directly with customers rather than sitting behind a purely productized interface.

Palantir has long understood a hard truth of enterprise software, which is that complex systems don’t succeed through demos alone and require deep, often painful integration. OpenAI appears to be reaching the same conclusion.

Similar to Palantir’s forward-deployed AI engineer roles, which Interview Query has previously reported on, these highly in-demand positions blend technical expertise with customer-facing execution, essentially embedding engineers inside organizations to make AI actually work.

What OpenAI’s On-Site Engineers Signify

Hiring engineers to work on-site with customers is a strong signal about how OpenAI sees the future of AI adoption. Trust and compliance must be prioritized when AI agents are granted access to internal systems and operate across sensitive data found even in unstructured documents and sources like emails and reports.

While traditional cybersecurity solutions like Palo Alto Networks are already working with enterprises to authenticate and restrict AI agents, OpenAI’s on-site AI engineers also meet that need by recognizing specific contexts. They translate abstract agent capabilities into custom workflows that fit real companies, with all their legacy systems and political constraints. Since enterprise AI adoption struggles less with capability and more with implementation, OpenAI’s strategy reflects that reality.

The broader implication is that AI is shifting toward a services-plus-platform model, not just software licenses. Organizations that can afford this level of deployment will move faster. Those that can’t may find themselves stuck with underutilized tools and unmet expectations.

The Structural Shift in AI Strategy

Stepping back, the pattern is clear. OpenAI isn’t just selling AI anymore, but more importantly operationalizing labor. The shift runs across multiple dimensions: from general-purpose models to enterprise-focused agents, from SaaS-style interfaces to deployment and management layers, and from traditional software engineers to AI engineers with customer-facing skills.

For tech workers, new grads, and career switchers, there is a crucial need to understand that value is moving away from writing isolated code and toward designing, deploying, and managing systems that include AI as an active participant. As this shift is expected to broaden in the next few years, those who stay relevant and employable are those who can adopt AI no longer as a mere tool, but as a coworker that can help solve problems.