Microsoft Machine Learning Engineer & Applied Scientist Interview Guide (2025)

Introduction

Landing a Microsoft applied scientist interview or a Microsoft machine learning engineer role opens the door to solving some of the most complex AI challenges at scale. These positions empower you to develop cutting-edge machine learning models that impact millions of users worldwide. Whether you’re passionate about advancing search algorithms in Bing or optimizing ad targeting through data science, Microsoft offers a unique environment to innovate and grow.

Role Overview & Culture

As a Microsoft Applied Scientist or Machine Learning Engineer, your daily responsibilities include owning the lifecycle of ML models powering products like Bing Search and Microsoft Ads. You’ll design and implement online experimentation frameworks and deploy scalable pipelines on Azure ML. Collaboration is key, as you work closely with product managers, data scientists, and engineers to translate business needs into impactful AI solutions. Microsoft fosters a culture grounded in a Growth Mindset and “One Microsoft,” encouraging autonomy while expecting data-driven decision making and cross-team alignment.

Why This Role at Microsoft?

This role offers the opportunity to work on products that serve billions of queries daily, leveraging Microsoft’s bespoke AI hardware accelerators and cloud infrastructure. Microsoft provides a fast track for individual contributors, with clear career progression from IC to Principal roles, alongside competitive compensation packages including generous RSU grants. To succeed, you’ll need to demonstrate both technical excellence and strategic thinking during the rigorous Microsoft applied scientist interview process, which tests your mastery of machine learning theory, coding, and problem-solving.

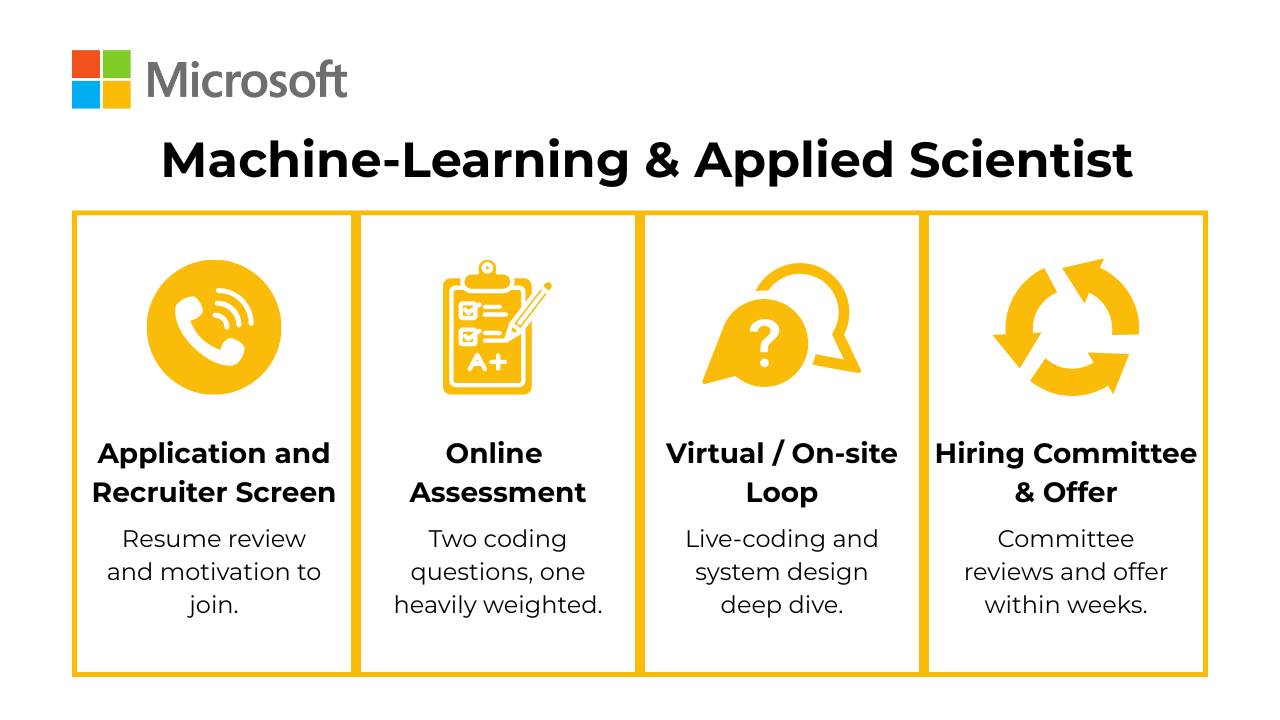

What Is the Interview Process Like for a Machine-Learning / Applied Scientist Role at Microsoft?

The Microsoft applied scientist interview process is designed to rigorously evaluate your coding skills, machine learning knowledge, research capabilities, and cultural fit. It typically starts with an initial recruiter screen focused on résumé fit and your motivation for joining Microsoft. Candidates then proceed to an online coding and ML quiz that tests Python proficiency, algorithms, and foundational ML concepts. Successful candidates move on to a comprehensive virtual or on-site interview loop consisting of multiple rounds that assess data structures and algorithms, deep ML theory, product sense, research depth, and behavioral fit.

Application & Recruiter Screen

This initial stage involves a recruiter reviewing your résumé to determine alignment with Microsoft’s technical requirements and organizational needs. Expect a conversation that explores your background, motivations for applying, and how your experience connects to the role. The recruiter also provides an overview of the interview process and assesses your communication skills and cultural fit, setting the tone for subsequent stages.

Online Coding & ML Quiz

Candidates who pass the recruiter screen proceed to a timed, 60-minute online assessment that tests fundamental skills in Python programming, data structures, algorithms, and basic machine learning concepts. This round gauges your problem-solving efficiency and foundational ML knowledge, ensuring you can handle the core technical demands before advancing to deeper evaluations.

Virtual / On-site Loop

This is the core interview phase, typically comprising five rounds, each focusing on different competencies:

- Data Structures & Algorithms: Tests your ability to write clean, efficient code and solve complex algorithmic problems.

- Machine Learning Concepts: Assesses your understanding of ML theory, model design, and statistical principles.

- Product Sense: Evaluates how you apply ML solutions to real-world product challenges, balancing user needs and business impact.

- Research Deep-Dive: Focuses on your past research or technical projects, probing your analytical thinking and innovation skills.

- Behavioral: Measures cultural fit, collaboration, and leadership potential aligned with Microsoft’s values.

These interviews are conducted virtually or on-site depending on circumstances, involving Microsoft engineers, data scientists, and PMs.

Hiring Committee & Offer

Once interviews conclude, a cross-functional hiring committee reviews all feedback and evaluates your performance holistically. The committee calibrates your level—commonly between L60 and L64—and decides on offer details, including compensation and career progression paths. This step ensures consistency in hiring standards and alignment with Microsoft’s talent strategy.

Differences by Level

Interview emphasis shifts based on seniority: L60 and L61 roles focus heavily on data structures and algorithmic skills, while candidates at L63 and above face additional rounds like machine learning system design to test architectural thinking. Principal-level candidates are assessed on strategic vision and their ability to influence cross-organizational initiatives, reflecting broader leadership responsibilities.

What Questions Are Asked in a Microsoft Machine-Learning / Applied Scientist Interview?

When preparing for the Microsoft applied scientist interview questions, candidates can expect a rigorous assessment spanning coding, machine learning theory, experimentation, system design, and behavioral fit. Microsoft’s AI teams demand not only deep technical expertise but also the ability to apply machine learning solutions effectively within product contexts, especially on platforms like Azure ML. Familiarity with Azure machine learning interview questions and Microsoft ai interview questions ensures you’re ready to tackle the wide-ranging challenges this role presents.

Coding / Algorithm Questions

This category tests your proficiency with data structures, algorithms, and efficient coding—foundational skills crucial for implementing scalable machine learning pipelines. Expect problems involving heaps, trees, and recursion, often reflecting real-time or streaming data scenarios encountered in Microsoft products. Demonstrating clean, optimal solutions and solid coding practices is essential to progress. Many Microsoft machine learning interview questions emphasize writing production-quality code under time constraints.

-

To solve this, you need to join the

employeesanddepartmentstables, filter departments with at least ten employees, and calculate the percentage of employees earning over 100K. Then, rank these departments based on the calculated percentage and select the top three. Given a slow-running query on a 100 million row table in Redshift, how would you diagnose and optimize it?

This question evaluates your ability to troubleshoot performance at scale—critical for Amazon’s high-volume data environments. Focus on techniques like analyzing the query plan (

EXPLAIN), checking for proper distribution and sort keys, filtering early, and avoiding cross joins or nested loops. Mention compression encoding, use ofDISTSTYLE, and Redshift’s limitations with large scans. Amazon expects BIEs to not only write correct queries—but efficient ones that scale with growing data.Write a query to get the current salary for each employee after an ETL error.

To solve this, you need to identify the most recent salary entry for each employee, which can be done by selecting the maximum

idfor each unique combination offirst_nameandlast_name. This ensures that you retrieve the latest salary record for each employee.Implement a Type 2 Slowly Changing Dimension (SCD II) for Customer Profiles that preserves historical changes such as address and subscription-tier status.

Design a dedicated dimension table in Azure Synapse Analytics (or Azure SQL DB) with a surrogate customer_dim_id, effective_start_dt, effective_end_dt, and a current_flag. An Azure Data Factory (or Databricks) pipeline performs incremental loads: it detects changed source records via checksum/hash comparison, inserts a new versioned row, and updates the previous version’s effective_end_dt while flipping current_flag to 0. A back-fill procedure—triggered by historical snapshots landing in ADLS Gen2—replays changes without downtime, ensuring temporal joins always pull the correct version.

-

This problem tests your ability to implement recursive backtracking or dynamic programming approaches to combinatorial problems—skills essential for MLEs building complex feature engineering or optimization pipelines at Microsoft. Efficiently exploring combinations while managing state and pruning invalid paths mirrors challenges faced in hyperparameter tuning or search space exploration.

-

This classic reservoir sampling problem evaluates your understanding of streaming algorithms and memory-efficient computations—core competencies for Microsoft MLEs dealing with real-time data streams from products like Azure Event Hubs or telemetry services. Implementing uniform random selection without knowing stream size tests your grasp of probabilistic algorithms and online learning, both vital for building adaptive ML models and data pipelines in scalable environments.

-

This question probes your ability to model probabilistic systems and implement weighted random sampling—techniques frequently applied in recommendation systems, anomaly detection, or synthetic data generation at Microsoft. Handling uneven distributions and maintaining correct sampling probabilities demonstrate your practical skills in implementing ML algorithms that reflect real-world stochastic processes.

Machine-Learning Concept Questions

These questions evaluate your grasp of core machine learning theory, including model training, optimization, regularization, and trade-offs like bias and variance. Candidates should be comfortable deriving update rules for common algorithms and explaining key concepts clearly. Given Microsoft’s cloud AI ecosystem, expect questions tied to deployment on Azure ML, reflecting the integration of ML models into scalable production environments. Mastery of these topics underpins your ability to contribute meaningfully to product development and research.

How would you interpret coefficients of logistic regression for categorical and boolean variables?

At Microsoft, translating model outputs into actionable insights for diverse stakeholders is essential. This question tests your ability to communicate logistic regression results clearly—explaining odds ratios, interpreting coefficients relative to baselines for categorical variables, and how boolean features impact log-odds. Microsoft Applied Scientists often collaborate with product and business teams who require intuitive explanations, so articulating dummy variable encoding and interaction effects using familiar analogies (e.g., feature impact on click-through rates or user conversion) is valuable.

How would you explain the concept of linear regression to three different audiences?

Microsoft’s collaborative environment requires you to adapt your communication style based on your audience, whether they are engineers, product managers, or business leaders. This question evaluates your ability to distill complex concepts: from a simple metaphor for novices (“drawing the best-fit line to predict outcomes”), to technical intuition for data scientists, and deeper discussions on assumptions and optimization for expert colleagues. Demonstrating this flexibility ensures your insights drive informed decisions across multidisciplinary teams.

What’s the difference between Lasso and Ridge Regression?

Regularization techniques like Lasso and Ridge are key tools in Microsoft’s scalable ML solutions to handle high-dimensional data and prevent overfitting. This question tests your understanding of their mathematical differences—Lasso’s ability to perform feature selection by shrinking some coefficients to zero versus Ridge’s approach of penalizing large coefficients without eliminating features. Emphasize how these methods balance model interpretability and predictive performance, which is crucial for Microsoft’s diverse applications ranging from cloud analytics to personalized recommendations.

-

Microsoft Applied Scientists must choose models not only based on accuracy but also on interpretability, scalability, and business context. This question assesses your ability to compare model assumptions: linear regression assumes linear relationships, while random forests can capture complex, non-linear patterns. Discuss trade-offs such as model explainability (important for stakeholder trust) versus predictive power, data volume considerations, and robustness to outliers—factors critical when deploying ML models on Azure or other Microsoft platforms.

-

This question probes your awareness of optimization edge cases, which is vital when building robust ML systems at Microsoft. Logistic regression may fail to converge on perfectly separable data as weights can grow infinitely to maximize likelihood. Discuss how regularization techniques reintroduce a bias-variance trade-off, ensuring convergence and generalization. Such insights are important for production-level model training, especially in scenarios like anomaly detection or quality assurance in Microsoft’s AI products.

-

This question tests your understanding of model selection trade-offs in real-world applications, a key skill at Microsoft where resource efficiency and scalability matter. While deep learning excels in complex data like images or speech, SVMs can be preferable for smaller datasets, lower computational cost, and better interpretability. You should discuss scenarios where SVMs offer advantages—such as structured data or when training resources are limited—and contrast these with the flexibility and scalability of deep learning models, demonstrating balanced judgment critical for deploying ML solutions on Azure and other Microsoft platforms.

Experimentation & Product Insight Questions

Data and applied scientists at Microsoft play a pivotal role in designing experiments that validate machine learning innovations and quantify product impact. You will be asked to outline online A/B tests for features such as speech transcription or search ranking, and estimate business or user experience lifts from latency improvements or accuracy gains. These questions test your ability to connect technical metrics with customer outcomes and business objectives, reinforcing Microsoft’s customer-obsessed culture.

How would you design and prioritize experiments to improve user onboarding conversion rates?

This question evaluates your ability to identify friction points in the onboarding flow and formulate testable hypotheses that drive user activation. At Microsoft, you should apply prioritization frameworks like ICE (Impact, Confidence, Effort) to balance potential gains against resources, ensuring your experiments align with broader retention and lifetime value goals. Discussing scalable experiment designs and clear success metrics highlights your proficiency in driving product growth through systematic testing.

How would you prioritize and structure multiple simultaneous growth experiments when resources are limited?

Microsoft values strategic resource allocation and maximizing ROI. This question tests your ability to organize and prioritize a portfolio of experiments using frameworks such as RICE or PIE. Demonstrating how you manage engineering bandwidth, avoid experiment overlap, and focus on compounding learnings aligns with Microsoft’s efficient, results-driven culture.

How would you structure an experiment to test a value proposition across different customer segments?

Segmenting users effectively and tailoring hypotheses is crucial when optimizing diverse products at Microsoft. This question assesses your approach to designing A/B tests that capture segment-specific responses, selecting relevant success metrics, and iterating based on data. Demonstrating deep user empathy and alignment with business goals reflects Microsoft’s customer-centric innovation.

How would you measure the impact of reducing latency in a real-time AI-driven feature?

Latency improvements can significantly affect user satisfaction in Microsoft products like Azure Cognitive Services. This question evaluates your ability to define appropriate KPIs—such as engagement, retention, or error rates—and design experiments to quantify the causal impact of performance optimizations. Your answer should highlight methods to isolate effects and manage confounding variables.

Describe how you would validate and monitor a machine learning model deployed in production.

This question tests your understanding of continuous evaluation and monitoring frameworks critical to Microsoft’s AI product reliability. You should discuss metrics for model accuracy, drift detection, and alerting mechanisms, as well as strategies for iterative model improvement informed by experimental data and user feedback.

How would you approach optimizing feature rollout strategies to balance risk and learning speed?

Microsoft often employs staged rollouts for AI features to mitigate risks. This question probes your ability to design gradual feature releases, select appropriate user cohorts, and monitor early indicators to inform rollout decisions. It evaluates your strategic thinking in balancing experiment velocity with product stability.

System / Pipeline Design Questions

System design interviews assess your understanding of end-to-end machine learning infrastructure, including real-time feature stores, data ingestion, and streaming analytics using tools like Kafka and Azure Stream Analytics. Expect scenarios involving AI-powered solutions such as copilot for data engineering, which automatically generates features or optimizes workflows. These questions evaluate your architectural thinking and your ability to build robust, scalable ML systems within Microsoft’s AI-powered platforms.

How would you design a feature store to support both online and offline model training and inference?

Microsoft Applied Scientists build AI systems requiring consistent, reproducible features across training and serving. Your design should leverage Azure Cosmos DB or Redis for low-latency online access, alongside batch processing with Azure Synapse Analytics or Data Lake for offline computations. Discussing feature versioning, latency guarantees, and synchronization mechanisms highlights your ability to deliver high-quality ML pipelines that integrate seamlessly with Microsoft’s AI platforms.

What architecture would you use to serve real-time ML predictions at low latency under high load?

This question probes your ability to engineer scalable, fault-tolerant prediction services on Azure, critical for Microsoft products with strict latency demands like Bing search ranking or Teams features. Consider managed Azure ML endpoints, microservice architectures with Azure Functions and API Management, autoscaling policies, caching layers with Azure Cache for Redis, and robust monitoring via Azure Monitor. Balancing cost, performance, and reliability embodies Microsoft’s engineering excellence.

How would you build a model to detect if a post on a marketplace is talking about selling a gun?

At Microsoft, responsible AI and compliance are paramount. This question tests your ability to define problem boundaries clearly, engineer robust text features using Azure Cognitive Services or custom NLP pipelines, and select models such as gradient boosted trees that can handle class imbalance effectively. Minimizing false positives and negatives aligns with Microsoft’s commitment to ethical AI deployment, ensuring models respect user privacy and legal frameworks.

Design a system to minimize wrong orders

This scenario evaluates your skill in integrating machine learning with operational workflows, a common need in Microsoft’s e-commerce and supply chain applications. By leveraging Azure Data Factory for data ingestion and Azure ML for predictive modeling, you can flag risky orders preemptively. Incorporating real-time feedback loops from customer support exemplifies Microsoft’s data-driven culture and continuous improvement philosophy aimed at enhancing user satisfaction.

How would you structure a retraining pipeline that adapts models as new data arrives?

Continuous model improvement is a cornerstone of Microsoft’s AI strategy. Use Azure ML Pipelines or Kubernetes workflows to orchestrate retraining triggered by performance decay, data drift, or scheduled intervals. Emphasize CI/CD integration with automated validation and seamless deployment using Azure DevOps. Model registry versioning ensures traceability and rollback capability, supporting Microsoft’s commitment to operational robustness.

Let’s say your model’s performance drops in production. What diagnostics and steps would you take?

MLE and Applied Scientists at Microsoft are expected to proactively monitor and troubleshoot live models. Discuss identifying causes such as feature distribution shifts, label changes, or serving inconsistencies using Azure Monitor and Application Insights. Propose steps like retraining, rollback to previous versions via Azure ML Model Registry, and enhanced monitoring alerts. This approach reflects Microsoft’s principle of “Delivering Success” through operational vigilance.

How would you handle model versioning and rollback in a live ML system?

Robust version control and rollback strategies are essential in Microsoft’s enterprise AI deployments. Outline approaches like blue-green and canary releases managed via Azure DevOps pipelines, semantic versioning standards, and automated performance checks pre-rollout. Emphasize traceability and rapid rollback to mitigate risks, ensuring Microsoft’s AI systems maintain high availability and user trust.

How would you monitor and alert on feature drift and prediction performance in a real-time ML model deployed via Azure ML?

Effective monitoring safeguards Microsoft’s AI product quality. Describe setting up dashboards and alerts for input feature distributions, prediction latency, and accuracy using Azure Monitor, Prometheus, or custom tools integrated with Azure ML endpoints. Discuss root cause analysis workflows and automatic retraining triggers, demonstrating a proactive mindset aligned with Microsoft’s emphasis on scalable, self-healing AI systems.

Behavioral & Culture-Fit Questions

Behavioral interviews gauge your alignment with Microsoft’s Growth Mindset and collaborative culture. You’ll be asked to share stories illustrating how you solved customer pain points using machine learning insights, demonstrating ownership, learning agility, and impact. Structuring answers with the STAR method helps convey your problem-solving process and leadership qualities effectively, showing that you are a strong cultural and technical fit for Microsoft’s AI teams.

Tell me about a time when you exceeded expectations during a project. What did you do, and how did you accomplish it?

Microsoft values individuals who go beyond baseline goals by identifying new opportunities and driving exceptional outcomes. Focus on how you expanded project scope, uncovered unmet user needs, or delivered solutions that had significant impact. Highlight your initiative, ownership, and how your actions helped clarify goals or accelerate progress, embodying Microsoft’s drive to deliver success.

Tell me about a time you received tough feedback. What was it, and what did you do afterward?

This question assesses your growth mindset and resilience—core to Microsoft’s culture. Choose an instance where feedback prompted you to change your approach, such as improving collaboration, simplifying complex solutions, or communicating more clearly to create alignment. Emphasize your ability to reflect, adapt, and generate energy in the team through learning.

-

Microsoft expects you to create clarity around competing priorities and navigate trade-offs effectively. Describe how you managed technical complexity alongside stakeholder urgency, using frameworks or tools to align expectations and maintain focus on strategic goals. Show how you generated energy in the team to meet deadlines without compromising quality.

How have you demonstrated Microsoft’s leadership principles in your previous roles?

Instead of focusing on customer obsession, discuss concrete examples where you created clarity by setting clear objectives, generated energy by motivating cross-functional teams, or delivered success by driving measurable impact. Highlight actions rooted in empathy, data-driven decision-making, and collaboration across diverse groups.

-

Frame your strengths around navigating ambiguity—whether through designing experiments with limited data, diving deep into complex problems, or rallying teams around incomplete requirements. Microsoft values builders who thrive in dynamic environments. For growth, share how you’ve learned to better align with stakeholders or scale your solutions responsibly.

Describe a time you led a team through a complex challenge involving ML deployment or data infrastructure. How did you maintain alignment and momentum?

This question probes your leadership and project management skills, essential for Microsoft Applied Scientists who often collaborate across engineering, data, and product teams. Explain how you created clarity by defining shared goals, generated energy by fostering open communication, and ensured delivery through effective coordination and problem-solving.

How to Prepare for a Machine-Learning / Applied Scientist Role at Microsoft

Preparing for a Machine Learning or Applied Scientist role at Microsoft requires a balanced approach that combines technical expertise, problem-solving skills, and cultural alignment. Success depends not only on your mastery of machine learning concepts and coding but also on how well you communicate your thinking and collaborate with interviewers. Below are key strategies to help you prepare effectively and stand out during the Microsoft applied scientist interview process.

Study the Role & Culture

Understanding Microsoft’s leadership principles is essential for interview success. Focus on how your past research or product wins demonstrate your ability to Create Clarity by setting clear goals and communicating effectively, Generate Energy by inspiring collaboration and driving momentum, and Deliver Success through measurable impact. Emphasize your capability to innovate autonomously while working as part of a unified team. Aligning with these principles shows that you are a strong cultural fit for Microsoft’s data science and applied AI teams.

Practice Mix

Microsoft interviews typically cover a blend of topics: around 40% focus on data structures and algorithms, 30% on machine learning concepts, 20% on experimental design and product insight, and 10% on behavioral questions. Allocate your study time accordingly to build a well-rounded skill set that meets the role’s demands. Practicing across these areas ensures you’re prepared for the diverse challenges of the interview.

Think Out Loud & Clarify

Interviewers appreciate candidates who verbalize their thought process clearly and ask clarifying questions. This approach fosters collaboration, ensures alignment, and allows interviewers to understand your reasoning. Practice articulating each step of your problem-solving and validating assumptions before diving into solutions.

Brute Force → Optimize

Start your coding or algorithm answers with a straightforward, brute-force approach to demonstrate problem understanding. Then, narrate how you would optimize it through vectorization, parallelization, or more efficient algorithms. This shows depth in problem solving and your ability to improve solutions iteratively, which is highly valued at Microsoft.

Mock Interviews & Feedback

Engage in mock interviews, ideally with former Microsoft applied scientists or knowledgeable peers. Recording and reviewing these sessions helps identify areas for improvement in content, communication, and pacing. Incorporate feedback continuously to refine your answers and build confidence before the real interview.

FAQs

What Is the Average Salary for an Applied Scientist / ML Engineer at Microsoft?

Average Base Salary

Average Total Compensation

How Long Does the Microsoft ML Interview Process Take?

The Microsoft Machine Learning and Applied Scientist interview process typically spans 3 to 5 weeks. For senior-level candidates, interview loops may extend to 6 or 7 weeks due to additional rounds and deeper assessments.

Are There Job Postings for Microsoft ML Roles on Interview Query?

Yes! Browse current Microsoft machine learning and applied scientist roles and unlock insider interview reports to prepare effectively. Explore the latest openings and exclusive resources to boost your chances.

Conclusion

Mastering these Microsoft AI interview questions and thoroughly understanding the interview process position you strongly for offer day. Success in this role goes beyond technical skill—it requires structured problem-solving, clear communication, and strategic thinking that aligns with Microsoft’s AI-driven mission.

Enhance your preparation with our comprehensive Modeling and Machine Learning Interview Learning Path to sharpen your core competencies. For a deeper dive into real success stories, explore how Jerry Khong navigated the Microsoft interview process and achieved his career goals. Ready to take the next step? Schedule a mock interview or subscribe to receive weekly machine learning question sets designed to boost your confidence and performance. For broader preparation, check out our related guides for Microsoft Data Scientist and Microsoft Software Engineer roles to round out your skills.