Capital One Data Engineer Interview Guide | Process, Questions, Tips

Introduction

Capital One is a leading financial services company recognized for its innovative use of technology and data to transform banking. With a cloud-first approach and a deep investment in modern data infrastructure, Capital One has become a pioneer in using data to drive everything from customer personalization to fraud prevention. As a Data Engineer here, you’ll be part of a dynamic team that designs and builds scalable data pipelines, ensures the reliability of ETL processes, and supports downstream analytics and machine learning systems. If you’re searching for an opportunity where your data engineering skills can directly shape real-time financial experiences for millions of users, this guide to the Capital One data engineer interview process will help you prepare with confidence.

Role Overview & Culture

At Capital One, Data Engineers are critical to enabling a data-driven culture across the company. You’ll work on cloud-native infrastructure (primarily AWS), develop real-time and batch data pipelines, and collaborate with analysts, data scientists, and product teams to ensure data is accurate, accessible, and actionable. The team is empowered to use tools like Apache Spark, Airflow, and Snowflake, and there’s a strong emphasis on automation, testing, and CI/CD best practices.

The engineering culture is deeply collaborative and agile. They encourage experimentation, learning, and cross-functional teamwork. Engineers are expected to think creatively and own their solutions end-to-end. Capital One fosters a high-trust environment where feedback is frequent, mentorship is available, and continuous improvement is part of the daily workflow. This culture directly supports the work of data engineers, who are often the glue connecting raw data to business insights and customer-facing applications.

Why This Role at Capital One?

There are several reasons why Capital One stands out for aspiring data engineers. First, the scale and variety of data is immense, ranging from transaction and credit data to real-time mobile interactions, providing rich opportunities to solve complex, high-impact problems. Second, Capital One’s continued investment in AI and machine learning creates a strong demand for robust, well-structured data pipelines, giving engineers a vital role in enabling advanced analytics. And finally, the company supports career growth through a mix of formal training, internal mobility, and exposure to cutting-edge tools and frameworks.

In the next sections, we’ll break down what to expect in Capital One data engineer interview and how to stand out.

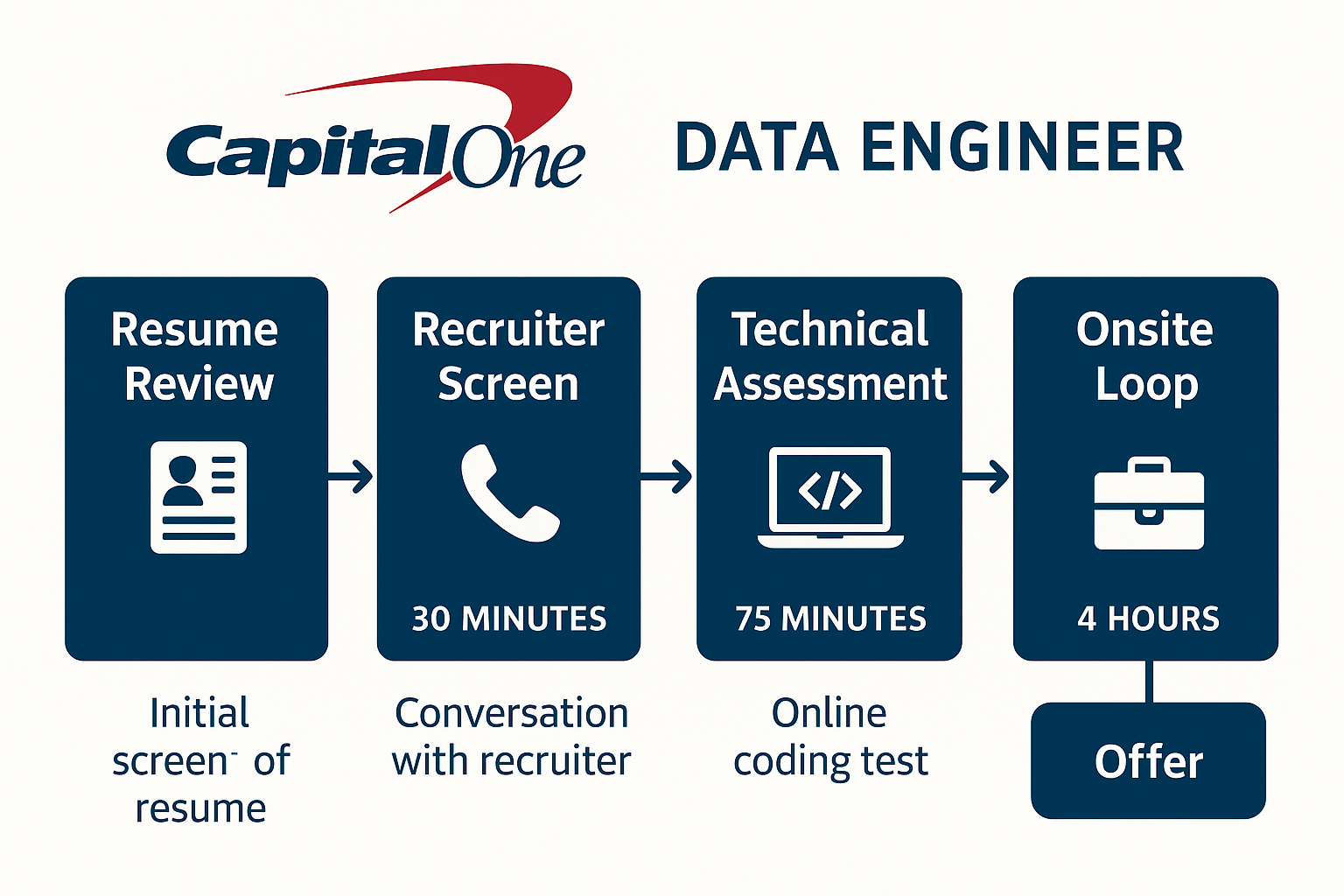

What Is the Interview Process Like for a Data Engineer Role at Capital One?

The Capital One data engineer interview process is known for being well-structured, transparent, and focused on both technical expertise and cultural fit. Whether you’re a recent grad or a seasoned engineer, the hiring process is designed to assess your ability to work in a cloud-native, fast-paced data environment. From SQL challenges to system design interviews, each step evaluates how well you can translate raw data into scalable, reliable pipelines that support analytics and decision-making across the organization. Here’s what you can expect at each stage of the journey.

Application & recruiter phone screen

The process typically begins with submitting your application through the Capital One careers portal or via a recruiter on LinkedIn. If your experience aligns with the role, a recruiter will reach out for a brief phone screen, usually lasting 20–30 minutes. During this call, you’ll discuss your background, the role’s expectations, and your interest in Capital One. The recruiter may ask about your familiarity with cloud tools (especially AWS), your experience with data pipelines, and high-level questions about past projects. This stage is also a chance for you to ask questions about team structure, tech stack, and the timeline of the process.

Online technical assessment (SQL, Python, or Spark)

If you pass the recruiter screen, you’ll receive a take-home or online coding assessment. The test usually covers SQL (joins, window functions, CTEs), Python (data manipulation using pandas or basic scripting), and sometimes Spark or PySpark for mid-to-senior roles. Candidates often describe the difficulty level as moderate to challenging, with some problems requiring multi-step logic and data transformation tasks. Completing this assessment within the time limit (typically 60–90 minutes) demonstrates your readiness to handle Capital One’s real-world data challenges.

Virtual onsite interviews

During the virtual onsite of Capital One data engineer interview, candidates typically participate in three to four sessions scheduled over half a day. One of the key components is the SQL or coding interview, which emphasizes writing clean, efficient queries to solve moderately complex problems. You can expect questions involving multiple table joins, window functions, and aggregations, often in a live coding environment using a collaborative IDE.

Another critical part of the onsite is the system design interview. Here, candidates are asked to architect data pipelines or platforms to address specific business scenarios, such as ingesting streaming data, ensuring real-time data quality, or building fault-tolerant ETL workflows. Interviewers look for thoughtful decisions regarding tool selection (e.g., Kafka, Airflow, Redshift), scalability, and data integrity.

The behavioral interview focuses on how you’ve handled challenges in past roles. Using the STAR method to structure your responses is recommended. Questions often explore your experience resolving data quality issues, collaborating across teams, or working under tight deadlines. Capital One places strong emphasis on clear communication, initiative, and alignment with its core principles, making this session just as important as the technical ones.

Debrief and decision by the hiring team

After your virtual onsite interviews, each interviewer submits their feedback typically within 24 to 48 hours using Capital One’s structured evaluation rubric. The hiring team then meets to review all assessments and calibrate scores across candidates. Feedback is often categorized into buckets such as “Strong Hire,” “Hire,” “Lean Hire,” “No Hire,” and “Strong No Hire.” While a “Strong Hire” across multiple rounds puts you in a favorable position, even a mix of “Hire” and “Lean Hire” ratings can still result in an offer, especially if you showed clear growth potential and strong alignment with Capital One’s culture.

That said, a single weaker round doesn’t automatically disqualify you—hiring managers look for consistency and overall trajectory. For example, if you received a “Lean Hire” in the system design round but “Hire” or “Strong Hire” in coding and behavioral interviews, the team may still view your performance positively. Recruiters generally don’t disclose exact ratings after the interview, but if you’re a borderline candidate, they may indicate that you’re “under consideration” or offer brief context. Final decisions are often shared within a week, and while Capital One is known for being courteous and responsive, detailed feedback isn’t always provided.

Behind the Scenes

Capital One’s interview process is structured and thoughtfully designed to reduce bias. Interviewers are trained to complete their feedback forms immediately after each session while the conversation is still fresh, which helps ensure more accurate and objective evaluations. The debrief process focuses not only on technical correctness but also on how well candidates communicate, collaborate, and problem-solve under pressure. This reflects the company’s emphasis on building well-rounded teams.

Additionally, the company values alignment with its mission and principles, so interviewers often consider behavioral traits like curiosity, ownership, and a growth mindset. Candidates frequently mention that the process moves at a reasonable pace, with most hearing back within a few days. While it’s not common to receive detailed post-interview feedback, you can expect a clear yes or no, and in some cases, a chance to reapply in the future if you were close to making the cut.

Differences by Level

Capital One data engineer interview process differs notably depending on the level of the role. For junior candidates or early-career applicants, the focus is primarily on foundational skills—such as writing efficient SQL queries, understanding basic ETL concepts, and demonstrating proficiency in scripting languages like Python. System design questions at this level tend to be more guided and focus on designing small-scale batch data workflows rather than complex architectures. Interviewers are often evaluating a candidate’s learning potential, clarity of thought, and grasp of core data engineering principles.

In contrast, interviews for mid-level or senior data engineer roles are more rigorous and expansive. Candidates are expected to demonstrate deep technical knowledge and architectural thinking. For example, they may be asked to design end-to-end data platforms, explain how to scale real-time streaming systems, or reason through performance trade-offs in distributed data storage. There’s often a stronger emphasis on cloud infrastructure, data governance, and the ability to mentor junior engineers. Communication with cross-functional teams, stakeholder alignment, and leadership in technical decision-making are also evaluated more closely at this level. Overall, while junior roles focus on execution and potential, senior roles assess strategic thinking and impact at scale.

What Questions Are Asked in a Capital One Data Engineer Interview?

Before stepping into your Capital One data engineer interview, it is important to understand the types of questions you might face—from technical SQL challenges to system design problems and behavioral scenarios that test your communication and judgment.

Coding / Technical Questions

Technical interviews at Capital One place a strong emphasis on your ability to manipulate and transform data efficiently using SQL, Python, and cloud tools. In one round of the Capital One data engineer interview, you might be asked to write a SQL query that calculates rolling averages with window functions or to build an ETL function using Python. These questions test not just syntax familiarity, but your ability to think critically, handle edge cases, and structure logic in a way that supports real-world business decisions. You’re expected to explain your approach clearly, justify your assumptions, and, where possible, test your solution using dummy data or examples. This is a key round where solid fundamentals and clear communication can truly set you apart.

1. Select the top 3 departments with at least ten employees with the highest average salary

Start by grouping employee records by department and filtering those with at least ten employees. Use the AVG() aggregate function to compute average salaries. Then sort the results in descending order and apply LIMIT 3. This tests your SQL aggregation, filtering, and sorting skills—key for data engineering roles.

2. Calculate the first touch attribution channel per user and count conversions by channel

Use ROW_NUMBER() or MIN() to identify the first interaction per user. Join with conversion data and group by the first channel to count conversions. Handle nulls or users without conversions appropriately. Attribution modeling is critical in product analytics and marketing data pipelines.

3. Write a query to count users who made additional purchases in the same category

Self-join transaction data to find follow-up purchases in the same category. Use COUNT(DISTINCT user_id) to get the number of unique users with upsell activity. Be mindful of date logic and filtering conditions. This evaluates your ability to track customer lifecycle and behaviors.

4. Write a SQL query to calculate the average number of swipes per user per week

Parse timestamps to weekly buckets and group by user and week. Then use nested queries or window functions to get per-user swipe averages. Handle users with gaps in weekly activity. Time series and behavioral metric calculations are essential in user engagement pipelines.

5. Write a function to group sequential timestamps into weekly buckets starting from the first one

In Python, iterate over a sorted list of timestamps and assign each to a rolling 7-day bucket starting from the first date. Use datetime.timedelta for window control. The output should be a list of sublists, one per week. This type of logic is common in log processing and usage aggregation tasks.

System / Product Design Questions

Capital One data engineer interview evaluates your ability to think like a systems architect. You’ll be challenged to build or improve scalable data pipelines, often within the context of real business scenarios such as detecting fraud in near-real time or ingesting user behavior logs efficiently across services. You might also be asked to design an end-to-end system that supports both batch and streaming data flows, all while balancing cost, latency, and reliability. Your response should begin at a high level (e.g., business goals and data sources), then progressively dive into the architecture covering ingestion tools like Kafka, orchestration tools like Airflow, storage options, partitioning strategies, and monitoring. This session highlights your ability to build for scale and change.

6. Design a classifier to predict the optimal moment for a commercial break

Begin by framing the problem as a binary classification task based on video stream features. Consider input signals like engagement metrics, scene changes, or pauses. Discuss feature engineering, model selection, and deployment in real-time environments. This is relevant for data engineers who must support real-time prediction systems with robust data pipelines.

7. Describe the process of building a restaurant recommender system

Break down the approach into data collection, user modeling, and recommendation generation. Highlight the use of collaborative filtering vs. content-based techniques. Discuss system scaling, cold start problems, and feedback loops. This type of design helps test your understanding of data architecture and ML-driven user personalization.

8. Design a recommendation algorithm for Netflix’s type-ahead search

Emphasize latency, relevance, and ranking in the design of the search suggestion engine. Consider prefix trees (tries) or search indexes combined with real-time click feedback. Ensure scalability across large catalogs. This question touches on search relevance systems, which are key in scalable logging and ingestion workflows.

9. Design a schema to represent client click data across devices

Think through table design that captures timestamps, device types, and user sessions efficiently. Normalize data where possible, while optimizing for common analytics queries. Partitioning and indexing strategies should be discussed. This is crucial for tracking user behavior in fraud detection and monitoring pipelines.

10. Add a column with data to a billion-row table without significant downtime

Discuss versioned schemas, shadow tables, or using columnar databases to reduce risk and latency. Explore techniques like backfilling asynchronously or lazy updates. Consider impact on read/write performance and data consistency. This problem tests how you optimize batch operations for scale and cost—critical in enterprise-grade data systems.

Behavioral or “Culture Fit” Questions

Capital One takes cultural alignment and soft skills seriously, especially for technical roles that require cross-team collaboration. In this portion of the interview, you’ll answer situational questions that explore how you communicate under pressure, resolve conflicts, and demonstrate ownership. For example, you might be asked to recall a time you dealt with an unexpected pipeline failure or had to align priorities with a skeptical product manager. These questions are designed to understand how you work in teams, handle stress, and live up to Capital One’s values such as “Excellence” and “Do the right thing.” To succeed, use the STAR method: clearly outlining the Situation, Task, Action, and Result and be specific about your personal impact and what you learned.

11. Tell me about a time you handled a failing pipeline under pressure

Use the STAR method to describe a specific incident where a pipeline broke—include context, actions you took to identify and fix the issue, and how you managed communication with stakeholders. Emphasize your ability to stay calm, troubleshoot effectively, and prevent recurrence. Highlight any monitoring or automation improvements implemented afterward. This demonstrates resilience and accountability, key traits at Capital One.

12. How do you prioritize data quality vs. delivery speed?

Frame your response around tradeoff analysis and stakeholder alignment. Describe a scenario where you had to balance accuracy with a deadline, and the reasoning behind your prioritization. Include any strategies for mitigating risks when leaning toward speed. This reveals your judgment and how you handle competing priorities in a high-stakes environment.

13. Describe a conflict with a data scientist or product team

Share a constructive example where there was misalignment on goals or data interpretations. Focus on how you listened, clarified needs, and reached compromise through collaboration or data validation. Avoid blaming language—emphasize empathy and teamwork. Capital One values cross-functional communication and this shows how well you navigate it.

14. How do you ensure your data pipelines are scalable and maintainable?

Talk about writing modular code, implementing CI/CD for pipeline deployments, and adding alerting and documentation. Share an example where these practices helped avoid downstream issues or onboarding challenges. Mention tradeoffs you considered between performance and maintainability. This shows you think long-term and engineer with care.

15. What steps do you take when onboarding to a new data team or project?

Explain how you ramp up: understanding existing architecture, reviewing documentation, meeting with stakeholders, and identifying quick wins. You can also mention how you handle legacy systems or undocumented codebases. Showing your adaptability and curiosity reassures the team you’ll integrate quickly and add value early.

How to Prepare for a Data Engineer Role at Capital One

Preparing for the Capital One data engineer interview means developing a balance of technical depth, clear communication, and system-level thinking. To succeed, it’s essential to not only brush up on common topics like joins and window functions but also to understand how your answers tie back to business use cases. In addition to coding practice, you should spend time learning about Capital One’s data infrastructure, cloud adoption, and product goals. Below are key strategies to help you build confidence and stand out throughout the interview process.

Study the Role & Culture

For data engineers, understanding the company’s tech ecosystem is essential. At Capital One, data engineers are expected to build systems that integrate seamlessly with real-time decision engines, scalable cloud infrastructure, and cross-functional teams that rely on data to drive product development. The company is known for its early and aggressive move to the cloud (especially AWS), its investment in building out real-time decision platforms, and its emphasis on enabling machine learning at scale. This makes it especially important for candidates to align their preparation with modern, cloud-native engineering principles.

Spend time reviewing Capital One’s tech blog, engineering talks, and open-source projects to understand the tools and frameworks they favor. Familiarity with technologies like Apache Airflow (for orchestration), Spark (for distributed data processing), Snowflake or Redshift (for cloud data warehousing), and Kafka (for stream processing) will help you connect your past experience with their environment. But going further, it’s also helpful to understand concepts like data observability, lineage, CI/CD for data pipelines, and data mesh principles, as these are increasingly important across the industry and show that you think beyond execution to architecture and scalability. Being able to talk about not just what you’ve built, but why your approach fits within a cloud-native, ML-driven company like Capital One, can significantly elevate your interview performance.

Practice Common Question Types

Preparing for a data engineer role at Capital One, your focus needs to be on how data moves, transforms, and scales, rather than just modeling or statistics. While both roles require SQL proficiency, data engineers are expected to think more about pipeline reliability, performance, and architectural decisions.

Essential question types for data engineers include:

- Writing advanced SQL queries that involve joins, CTEs, aggregations, and window functions

- Designing or debugging ETL/ELT pipelines for batch and streaming data

- Solving data modeling problems, such as how to normalize tables or handle slowly changing dimensions

- Addressing system design challenges, like building a real-time fraud detection pipeline or designing a scalable logging architecture

- Explaining data infrastructure trade-offs, such as choosing between Redshift and Snowflake or batch vs. stream processing

To prepare, spend around 50% of your time on SQL and data modeling. Unlike data science interviews that might involve exploratory data analysis or hypothesis testing, your goal here is precision, scalability, and reliability. Practice writing efficient SQL and reasoning about how different schemas affect query performance. Use Interview Query, LeetCode, and other platforms for hands-on practice with business-oriented SQL problems.

Dedicate 30% of your time to system design. Many data engineer candidates underestimate this area, but it’s often a dealbreaker for mid-level and senior roles. Use resources like Data Engineering on YouTube (e.g., Data with Danny, Seattle Data Guy), or books like Designing Data-Intensive Applications by Martin Kleppmann to learn about message queues, distributed systems, data partitioning, and job orchestration tools like Airflow.

Reserve the last 20% for behavioral questions that assess your ability to prioritize, collaborate, and manage ambiguity. Data engineers frequently work with product managers, analysts, and data scientists, so expect questions about trade-offs between data quality and delivery speed, dealing with pipeline failures, or collaborating across departments.

Think Out Loud & Ask Clarifying Questions

Interviewers at Capital One value not just your technical correctness, but your ability to think like a systems-oriented engineer. This means approaching problems with clarity, structure, and an awareness of downstream impact—a hallmark of the data engineer mindset. In the interview, focus on communicating how you break down complex processes: start by clarifying the business goal, outline your assumptions, and explain how you would structure the data flow to serve both reliability and scalability.

For example, if you’re asked to write a query or build a pipeline, don’t just jump into syntax. Talk through your plan: What are the edge cases? What if the data volume spikes? How will you validate input/output? How often should the job run—and how would you monitor for failures? This shows that you’re not only technical, but pragmatic and focused on maintainability, which is crucial for real-world data engineering.

Also, be proactive in asking clarifying questions. In real life, data engineers rarely receive perfect requirements—they work through ambiguity, prioritize constraints, and often act as the translators between raw data and business value. Capital One’s culture favors this kind of thoughtful, collaborative problem-solver. For example, if an interviewer gives you a vague requirement (“build a pipeline for customer transactions”), you might ask: “Are we optimizing for latency or cost?”, “Do we need historical reprocessing?”, or “Is the downstream use case analytical or operational?”

Finally, during technical rounds, narrate your thought process out loud just as you would explain your work to a colleague or stakeholder. This helps the interviewer follow your logic, gives them opportunities to engage with you, and simulates how you would collaborate in a team setting. A strong data engineer doesn’t just write working code, they build solutions that are scalable, tested, monitored, and well-aligned with the business. Let that mindset shine through in every answer.

Brute Force, Then Optimize

One of the most common challenges in data engineering interviews is dealing with vague or underspecified technical prompts. This is intentional. It reflects the real world, where data engineers are often given only partial requirements and are expected to ask smart clarifying questions before jumping into a solution. When faced with an open-ended prompt like “Design a data pipeline for transaction data,” don’t freeze or guess, start by anchoring the problem in the business context. You can ask: “Is this pipeline batch or streaming?”, “What are the performance constraints—latency, cost, volume?”, or “Who are the end users—analysts, ML models, or external systems?”

Once you’ve gathered just enough context to move forward, begin with a brute-force or simple solution. For example, if asked to filter invalid records and load data into a warehouse, you might describe a basic ETL pipeline using Python and a scheduler like Airflow, storing the results in S3 and querying through Redshift. Then, transition into how you’d optimize and scale: partitioning the data, applying schema evolution strategies, building retry logic, or migrating to a streaming model with Kafka and Spark if real-time needs arise.

Interviewers want to see that you can go from messy ambiguity to concrete implementation, and then adapt that solution with engineering maturity. Capital One emphasizes structured thinking, maintainability, and cross-functional collaboration over “clever hacks.” So rather than jumping straight into code, lead with logic, communicate trade-offs, and show that your approach would hold up not just today, but as systems and scale evolve.

Mock Interviews & Feedback

Finally, one of the best ways to prepare is to simulate the real interview experience. Set up mock interviews with peers, mentors, or even through online platforms. Focus on timing, clarity, and getting feedback on your approach and communication. If possible, connect with someone who has recently gone through the Capital One interview process—they can help you understand what to expect, what topics are trending, and how to manage your time. These rehearsals build confidence and help you stay composed when the actual interview day arrives.

FAQs

What Is the Average Salary for a Data Engineer Role at Capital One?

Average Base Salary

Average Total Compensation

Where Can I Read More Discussion Posts on Capital One’s Data Engineering Roles?

If you’re preparing for a data engineering role at Capital One, it’s helpful to study not only the interview process but also the technical themes and tools that current and past engineers frequently discuss. Key topics to familiarize yourself with include streaming data infrastructure (e.g., Kafka, Kinesis), workflow orchestration with Airflow, data warehousing with Redshift or Snowflake, schema evolution and versioning, and real-time analytics systems. Understanding Capital One’s shift to a cloud-native architecture—primarily using AWS services like S3, Lambda, and Glue—will also give you an edge, especially in system design interviews.

Are There Job Postings for Capital One Data Engineering Roles on Interview Query?

Yes! You can browse current data engineering job postings from Capital One on Interview Query Job Board or visit Capital One’s official careers page for the most up-to-date openings. Many roles include detailed descriptions of the tech stack, qualifications, and hiring team expectations, which can help you tailor your preparation effectively. Explore open roles and prepare using real interview insights to give yourself a strategic edge in the process.

Conclusion

Capital One data engineer interview can be rigorous, covering everything from complex SQL queries to system design and behavioral evaluations—but with focused, structured preparation, it’s absolutely within reach. By understanding what the role demands, practicing real-world data problems, and communicating like a systems thinker, you can position yourself as a strong candidate ready to thrive in Capital One’s modern, cloud-first data environment.

If you’re looking to prepare even more thoroughly, check out our other interview guides for Capital One Software Engineers and Data Scientists to explore overlapping skill areas and company-specific prep tips. For additional support, Interview Query also offers 1:1 coaching sessions, resume reviews, and mock interviews tailored to your target role. With the right strategy and support, you’ll be ready to take the next step in your data engineering career.