Capital One Data Analyst Interview Guide: Questions, Process & Tips

Introduction

Capital One is known for its deep commitment to data—from powering customer insights to driving innovative products and smarter decision-making. As a result, the Capital One data analyst interview questions are designed to evaluate not just technical skills, but also your ability to think critically, collaborate cross-functionally, and make data meaningful for the business. If you’re aiming to break into a role where analytics directly influences millions of customer experiences, Capital One offers one of the most dynamic environments to grow as a data professional. In this guide, we’ll walk through the key elements of the role, what sets Capital One apart, and how to succeed in each stage of the interview process.

Role Overview & Culture

The Data Analyst role at Capital One sits at the core of business decision-making. Analysts are responsible for working with large, often complex datasets to extract meaningful insights. This includes writing and optimizing SQL queries, developing dashboards and data visualizations, and delivering clear reports to both technical and non-technical stakeholders. Analysts collaborate closely with teams in product, strategy, engineering, and operations to ensure data-backed decisions are made across the organization.

What makes this role stand out is Capital One’s data-forward culture. The company was an early adopter of cloud infrastructure and continues to invest heavily in advanced analytics, which means data is not just a support function—it’s foundational to how business gets done. Teams are empowered to run experiments, validate ideas with real-world customer behavior, and iterate quickly. Analysts are embedded in agile teams, working side by side with product managers, engineers, and designers, which fosters not just technical growth but a strong understanding of customer impact and product outcomes. Capital One also invests in data literacy across the organization, ensuring that everyone—from executives to interns—can engage meaningfully with data.

Why This Role at Capital One?

Capital One is a rare mix of tech-forward infrastructure and real-world financial data at scale. As a data analyst, you’ll have access to large and diverse datasets, ranging from credit card transactions to customer engagement metrics. This gives analysts a unique opportunity to work on impactful business problems and develop domain knowledge in finance, marketing, fraud detection, and more. The company also provides best-in-class tools, including AWS, Snowflake, Python, and Tableau, allowing analysts to go beyond basic reporting into predictive modeling and automation.

The role comes with a clearly defined growth path. Whether you’re aiming to become a Senior Business Analyst, Product Analyst, or move toward data science, Capital One supports that journey with mentorship, learning platforms, and rotational opportunities. For students and recent graduates, the Capital One data analyst intern program and Analyst Development Program provide an excellent foundation to explore different parts of the business while honing technical and analytical skills in a real-world setting.

Up next, we’ll break down the Capital One data analyst interview process so you can prepare effectively for every stage—from initial screening to final panel.

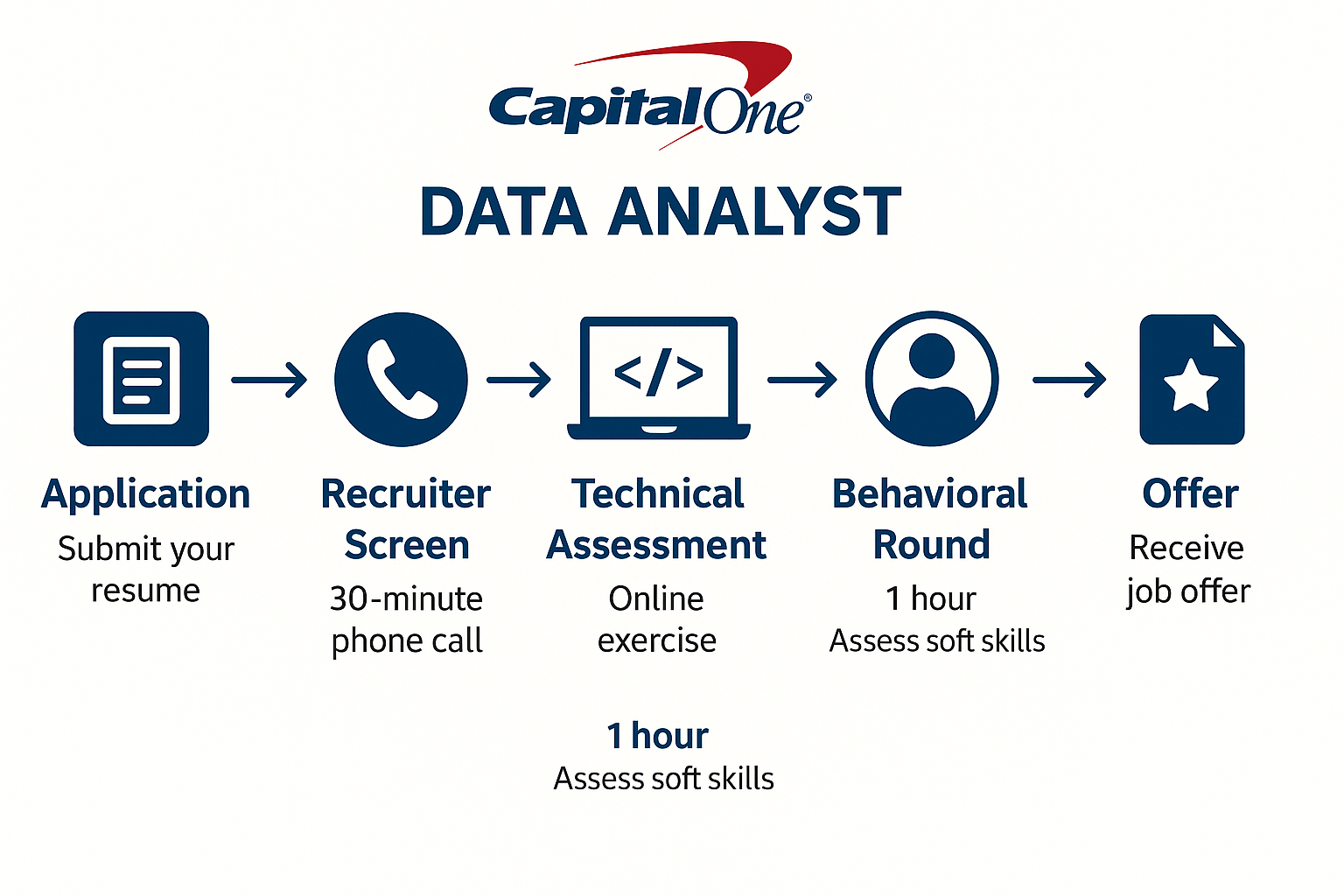

What Is the Interview Process Like for a Data Analyst Role at Capital One?

The Capital One data analyst interview process is known for being rigorous but fair, with a clear structure designed to assess both technical ability and business insight. Whether you’re applying for a full-time role or the Capital One data analyst intern program, the process typically unfolds in several distinct stages. Each step builds on the last, starting with resume screening and moving toward deeper analytical and strategic discussions. Below, we break down each phase so you know what to expect and how to prepare.

Application & Resume Review

It all begins with submitting your application through the Capital One careers portal or a campus recruiting event. Recruiters look for candidates who demonstrate strong analytical capabilities, particularly in SQL, Python or R, and experience in working with real-world datasets. Resumes that highlight clear impact—such as improving efficiency, reducing costs, or supporting product decisions—tend to stand out. Candidates who can show evidence of collaboration, business context, and strong communication skills are more likely to move forward. For intern candidates, academic projects and case competitions can carry significant weight.

Recruiter Call

If your resume is selected, you’ll be invited to a brief phone call with a recruiter, usually lasting 15 to 30 minutes. This conversation is focused on your background, interest in Capital One, and your familiarity with basic data analysis tools. The recruiter may ask why you want to work at Capital One, what types of data projects you’ve handled, and how comfortable you are with SQL or Python. This is also your chance to ask about the role, team structure, and hiring timeline. If you’re applying through the Capital One data analyst intern track, the recruiter might walk you through the internship program’s structure, timeline, and expectations.

Online SQL/Coding Assessment

Once you pass the recruiter screen, you’ll receive a link to complete an online assessment, often hosted on platforms like CodeSignal or HackerRank. This timed test focuses primarily on SQL, covering everything from basic joins and filtering to more advanced concepts like window functions and subqueries. Some versions may include simple Python coding or data interpretation problems, but SQL is always central. Many candidates report that questions reflect real-world scenarios, such as analyzing sales trends or customer behavior using transactional data. While partial credit may be awarded, clean and efficient code tends to be rewarded more highly.

Behavioral & Case Interviews

Candidates who perform well on the assessment are invited to one or two interviews that blend behavioral and case-based questions. These are typically held over video calls and led by analysts or managers from the team you’re applying to. Behavioral questions explore your experience working in teams, handling ambiguity, and communicating data insights. For instance, you might be asked about a time you had to explain technical findings to a non-technical audience, or how you approached a project with unclear goals.

The case portion often involves a business problem that requires data-driven thinking. You might be given a scenario like, “A new credit card feature was launched—how would you measure its success?” or “What metrics would you use to analyze customer churn?” You won’t always have to write code during these rounds, but you’ll need to talk through how you would structure your analysis, what data you’d need, and how you’d present your findings to stakeholders.

Final Panel Interview

The final round of the Capital One data analyst interview is typically a panel interview involving multiple stakeholders from different parts of the team. This round usually lasts 60 to 90 minutes, depending on the role level and the number of interviewers involved. The panel often includes a mix of senior analysts, team leads, managers, and occasionally product or business partners, especially if the role is cross-functional.

The format is structured but conversational, and may be broken into segments. For most candidates, the interview begins with behavioral questions that revisit earlier themes but in greater depth—such as how you handled a high-pressure deadline, how you managed competing stakeholder interests, or how you approached a difficult data-related decision. Interviewers are looking for clarity in communication, thoughtful prioritization, and examples of ownership or impact.

In the second half, you might receive a live case problem or scenario. Rather than coding, this is usually a whiteboard- or discussion-style walkthrough of a business problem. For example, you might be asked, “How would you assess whether a new feature increased customer engagement?” or “Which KPIs would you use to monitor the health of a credit portfolio?” You’re expected to talk through your approach, explain your logic, and describe what kind of data you’d use—highlighting both your business acumen and analytical thinking.

For more experienced candidates, including those applying for Senior Analyst or lateral transfer roles, part of the panel may involve a short presentation of a previous project. You’ll be asked to walk through your problem statement, methods, tools used (e.g., SQL, Python, Tableau), key findings, and business impact. Capital One values structure, so interviewers often appreciate a clear narrative that ties your technical work to decision-making and customer outcomes.

Overall, the final panel is designed to simulate real cross-functional collaboration—so your ability to listen, reason out loud, and articulate data-backed recommendations clearly is often just as important as your technical depth.

Behind the Scenes

Capital One’s recruiting process is built on structured rubrics that help ensure fairness and consistency. Each round has clearly defined evaluation criteria, and performance in one stage—particularly the technical assessment—can heavily influence whether you progress. For intern candidates or early-career applicants, timelines may be tied to university recruiting calendars, with many offers going out in the fall and spring through cohort-based cycles. Applying early can improve your chances of securing an interview slot.

Differences by Level

The complexity and emphasis of the interview process vary slightly depending on the role level. Junior candidates are typically assessed more on SQL, basic business intuition, and communication skills. Interviews may be more scenario-based, asking how you would use data to support a specific team or solve a straightforward problem. For senior analysts, the interviews tend to involve broader business strategy, cross-functional collaboration, and occasionally leadership-style questions. You may also be expected to discuss metrics design, stakeholder management, and the impact of your recommendations in past roles.

What Questions Are Asked in a Capital One Data Analyst Interview?

The Capital One data analyst interview includes a wide range of questions that test your technical skills, business thinking, and ability to collaborate within teams.

Coding / Technical Questions

One of the most important parts of the Capital One data analyst interview questions involves assessing your technical proficiency in SQL, data wrangling, and occasionally Python. Capital One wants to know whether you can extract meaningful insights from raw data and do so with clarity and precision. Capital One data analyst CodeSignal assessment emphasizes topics like joins, CTEs, and window functions. In some cases, you’ll also be asked about Excel-based cleaning tasks or basic Python usage. What matters most is not just getting the right answer, but showing that you understand the business context, can write readable code, and know how to handle edge cases and real-world messiness in data.

1. Select the top 3 departments with at least ten employees and highest average salary

Use a combination of GROUP BY, HAVING, and ORDER BY clauses in SQL to solve this. You’ll need to filter departments based on a minimum employee count and sort by the average salary. Limit the results to the top three. This question tests your aggregation and filtering logic—both critical for analyst roles.

2. Calculate the first touch attribution channel for each user

Identify each user’s first interaction channel using window functions like ROW_NUMBER(). Partition by user ID and order by event timestamp to find their first event. This question reflects real-world marketing analytics cases, which are common at Capital One.

3. Write a query to count users who made additional purchases after signing up

Use JOINs or subqueries to identify users with sign-up events followed by purchases. You’ll need to filter on event type and use aggregation or window functions. This tests your ability to sequence user behaviors—an important skill in understanding product usage or churn.

4. Write a SQL query to calculate the average number of swipes per user per day

Break down user activity by day and calculate averages across users. Use date formatting and grouping functions effectively. This is a good test of time-based analysis, which is relevant in digital banking product usage tracking.

5. Write a query to retrieve the latest salary for each employee

Use window functions like RANK() or ROW_NUMBER() to rank salary records for each employee and extract the most recent one. Be mindful of duplicate timestamps or edge cases. It simulates data-cleaning scenarios often faced during ETL audits.

6. Write a query to find the third purchase of every customer

Leverage ROW_NUMBER() over a partition by user and order by purchase time to filter the third event. Make sure to only return users who actually have at least three purchases. It evaluates your skills with event sequencing and temporal logic.

7. Write a query to return neighborhoods with no registered users

This is a classic LEFT JOIN anti-join pattern. Identify neighborhoods not referenced by any user by checking for NULL values post-join. This pattern is very relevant in analyzing market expansion or untapped user segments.

Case Study & Business Questions

In nearly every Capital One data analyst interview, candidates are presented with a business case to test how well they reason through ambiguous problems using data. These questions reflect real scenarios an analyst might face on the job—such as analyzing why a product underperformed, designing an experiment, or deciding which metrics to monitor for fraud or retention. Capital One is looking for structured thinking, solid business intuition, and your ability to connect analysis to strategy. Whether you’re given a hypothetical or asked how you’d solve a real-world challenge, this part of the interview is your chance to demonstrate how you turn data into actionable decisions.

8. Calculate the average lifetime value for a SaaS product given churn and revenue retention

Use a formula involving customer lifetime and average revenue per user (ARPU) to estimate LTV. You can model churn as a retention rate and apply geometric series logic if needed. This question gauges your understanding of recurring revenue models, which are relevant to financial products at Capital One.

9. Evaluate the effectiveness of a 50% rider discount campaign on new Lyft users

Think through a before-and-after comparison using metrics like user retention, ride frequency, and CAC. A/B testing principles might also be involved in determining campaign lift. This mirrors real-world campaign evaluation skills needed in performance marketing analytics.

10. Differentiate between scrapers and real users on a platform

Identify behavioral patterns such as API usage frequency, access times, or request headers to flag non-human traffic. Discuss clustering or rule-based classification. It tests your ability to apply anomaly detection and user behavior segmentation.

11. Analyze customer spending data to identify potential credit card targets

Focus on segmentation, lifetime value, and spending trends across merchant categories. Incorporate KPIs like total spend, frequency, and category preference. Capital One would value this insight when optimizing product targeting strategies.

12. Develop a new Lyft Line algorithm, test it, measure its success, and launch it

Walk through a product development lifecycle: requirement gathering, modeling logic, testing framework, and launch metrics. Suggest A/B testing and metrics like ride-matching rate or customer satisfaction. This reflects product strategy thinking crucial to data-driven decision-making.

13. Determine criteria for selecting Dashers for delivery in new markets

Create a scoring system based on availability, proximity, historical reliability, and demand patterns. Consider trade-offs between efficiency and fairness. Useful for roles analyzing gig economy logistics or operational efficiency.

14. Project the lifetime and lifetime value of a new driver based on churn and revenue data

Use historical retention data to estimate how long new drivers remain active. Combine with revenue per active period to project LTV. Highly relevant for assessing partner strategy in gig-based services or card member engagement forecasting.

Behavioral or Culture Fit Questions

Capital One places a strong emphasis on collaboration, communication, and cultural fit—so expect several behavioral questions throughout the process. These questions help interviewers understand how you’ve handled cross-functional projects, learned from failure, managed competing priorities, or driven results in challenging situations. Your answers give Capital One insight into how you operate as a teammate, how well you embody ownership and curiosity, and how you’d contribute to a data-literate and innovative workplace. Clear, structured storytelling—especially through the STAR (Situation, Task, Action, Result) format—can help make your experiences both memorable and meaningful.

15. Tell me about a time you had to resolve a conflict within a team

Begin by describing the context of the team and the nature of the conflict. Explain how you approached the situation—whether you facilitated communication, clarified expectations, or escalated appropriately. Focus on what you learned about interpersonal dynamics. This question reveals your ability to work constructively in cross-functional settings.

16. Describe a time when you received constructive feedback and how you responded

Set the scene with a project or task where feedback was offered. Share your initial reaction and what actions you took to improve based on the input. Highlight how this experience influenced your future work. Feedback agility is a key trait for high-performance teams.

17. Have you ever worked in a team where someone wasn’t contributing? What did you do?

Explain the situation without blaming others and focus on your problem-solving approach. Whether you stepped up responsibilities or had a conversation, emphasize collaboration and empathy. This assesses both initiative and respect for team cohesion.

18. Tell me about a time you had to work with a team very different from you

Clarify the differences—whether in background, skillset, or communication style—and explain how you bridged those gaps. Point out adjustments you made to collaborate more effectively. This demonstrates openness to diverse perspectives and adaptability.

19. Describe a project where aligning with company values helped your decision-making

Share a scenario where you faced ambiguity and used core values (such as integrity, customer-first mindset, or accountability) to guide your actions. Highlight the impact of those choices on the outcome. This helps assess culture fit and ethical judgment.

20. Give an example of how you handled a disagreement with your manager or senior stakeholder

Discuss a situation where perspectives differed and how you respectfully voiced your opinion. Emphasize the resolution process, focusing on compromise, clarity, or data-based persuasion. Conflict resolution is critical when collaborating across levels.

21. How do you prioritize competing deadlines or responsibilities under pressure?

Lay out a method—like using impact/urgency matrices or stakeholder input—to set priorities. Mention tools or routines (e.g., calendars, checklists) you use to stay organized. Time and stress management are co

How to Prepare for a Data Analyst Role at Capital One

Preparing for the Capital One data analyst interview requires more than just brushing up on technical skills—it’s about aligning your mindset with the company’s data-driven, customer-focused culture. Capital One looks for analysts who can not only write clean SQL queries but also think strategically and communicate insights clearly. Whether you’re targeting an internship or a full-time position, focused preparation across technical, business, and behavioral areas can dramatically improve your chances of success.

Study the Role & Culture

Start by learning how Capital One uses data in real-world contexts, such as fraud detection, customer segmentation, and credit risk modeling. Understanding the company’s mission and recent innovations helps you position yourself as a candidate who speaks their language. When refining your resume or preparing talking points, highlight examples where you’ve driven measurable impact, shown innovation, or made ethical decisions—values that align directly with Capital One’s cultural pillars: impact, innovation, and integrity.

Practice Common Question Types

Technical and case interviews are the backbone of the Capital One data analyst interview, with a strong emphasis on SQL and data-driven business reasoning. Capital One expects analysts to go beyond just pulling data—they want people who can find patterns, think strategically, and communicate insights clearly.

SQL skills are especially important and often tested in the Capital One data analyst CodeSignal assessment. You can expect multi-step problems involving joins, window functions, filtering, and ranking—similar to real tasks analysts handle on the job. Instead of memorizing syntax, focus on solving business-style problems with clean, readable logic. Practicing SQL on Interview Query or LeetCode’s database section is a great way to simulate the challenge. Pay close attention to CTEs, subqueries, and handling edge cases—these are often included in the assessment to test your ability to think critically and code efficiently.

Business case questions test how you apply data to solve real-world problems. Capital One values structured thinking and product intuition, so practice walking through cases like feature adoption, churn analysis, or test design. Focus on defining success metrics, outlining a clear approach, and thinking through potential pitfalls. These questions reflect the analyst’s role as a strategic partner, so your reasoning matters just as much as your conclusions.

Finally, don’t overlook behavioral prep. Capital One cares about how well you collaborate, lead, and learn from challenges. Prepare a few strong examples where you’ve driven impact, handled ambiguity, or worked cross-functionally—stories that reflect the company’s values of curiosity, impact, and integrity.

Think Out Loud & Ask Clarifying Questions

During the Capital One data analyst interview, how you structure your thinking is just as important as your final answer. One helpful approach for case or business questions is the H-M-E-P framework: begin with a hypothesis (what you think might be happening), then outline the metrics you’d use to test it, describe how you’d explore the data (segmentation, timeframe, comparisons), and conclude with potential pitfalls or limitations. This shows you’re thinking like a product partner, not just a coder. For behavioral questions, the STAR method—Situation, Task, Action, Result—is a reliable way to stay concise while highlighting your impact. When solving SQL or technical problems, practice the habit of thinking out loud. Start by restating the goal in plain language, then walk through your approach step by step. If something is unclear, ask clarifying questions rather than guessing. Capital One interviewers appreciate candidates who are collaborative, thoughtful, and able to communicate their logic clearly—just like they would in real project discussions.

Brute Force, Then Optimize

Start with a simple query or solution, then walk through performance improvements. In technical rounds of the Capital One data analyst interview, a practical framework to follow is Baseline → Clarify → Optimize. Start with a baseline solution—a clear, correct query that satisfies the business need, even if it’s not the most efficient. For example, you might first use a subquery or temporary table to join customer purchases with product categories. Once that’s working, clarify your assumptions out loud: mention if you’re assuming no duplicate records, or that NULLs should be excluded. This signals to the interviewer that you’re thinking critically about data quality and edge cases.

Then, move into optimization: explain how you’d refactor the query using Common Table Expressions (CTEs) for readability, or replace a subquery with a window function to improve performance. You might mention filtering earlier in the query to reduce rows before joins, or suggest indexing certain columns in production. At each step, check in with the interviewer—ask, “Would you like me to walk through how I’d tune this further?” or “Would it be helpful if I added handling for NULL values here?” This kind of interactive, step-by-step reasoning reflects how Capital One analysts debug real data pipelines and collaborate across teams.

Mock Interviews & Feedback

Practicing in real conditions can make a big difference. Try scheduling a mock interview with a peer, a mentor, or even a former Capital One analyst if you have access. Getting feedback on your communication, SQL fluency, and case logic will sharpen your delivery and boost your confidence. If possible, record yourself answering questions and review your clarity and pacing.

FAQs

What Is the Average Salary for a Data Analyst Role at Capital One?

Average Base Salary

Average Total Compensation

Where Can I Read More Discussion Posts on Capital One’s Data Analyst Role Here in IQ?

You can read more discussion posts and interview reports about Capital One’s Data Analyst role on Interview Query’s Discussion pages. This pages include real candidate experiences, community answers, and sample questions from actual interviews.

Are There Job Postings for Capital One Data Analyst Roles on Interview Query?

Yes! Interview Query regularly features listings for Capital One openings across data analyst, business analyst, and internship roles. You can explore postings for full-time jobs as well as the Capital One data analyst intern pipeline, which often opens each fall and spring for students interested in analytics, product, and finance tracks. These listings often include job descriptions, required skills, and even sample questions to help guide your prep.

See the latest openings and apply with real-world prep by visiting the Interview Query job board or checking Capital One’s official careers page.

Conclusion

The Capital One data analyst interview process is one of the more structured and skill-focused hiring experiences in the analytics space. From SQL assessments to business reasoning and behavioral questions, each round is designed to evaluate how well you understand and communicate data in a real-world setting. Success comes down to preparation—focus heavily on SQL problem-solving and structured case responses, while also preparing STAR-format stories that reflect Capital One’s values of impact, innovation, and integrity.

For more resources, explore our guides on data scientist and business analyst interviews, or consider signing up for mock interview coaching tailored to Capital One roles. Preparing thoughtfully will not only help you get the offer—it will help you start strong on day one.